We measure for Tier 3 certification at a data center in Istanbul. And no, not me in the photo.

We measure for Tier 3 certification at a data center in Istanbul. And no, not me in the photo.I lead projects for creating data centers in Russia and abroad. And I want to talk about how in recent years, Russian data centers have acquired their own particular design style.

And I'm not talking about stories like the fact that if the project has a container diesel generator, it is imperative to immediately design a smoking area close to the exit from the building. Because, if you don’t do this, people will smoke at DGU and throw bull-calves to the ground or directly into the ventilation hole. And when it needs to be started, he will take them all in a radius of a couple of meters with a stream of air and immediately clog the filter.

Rather, we are talking about more global things regarding general ideas and design style. This style is based on the fact that the Russian customer usually knows that he needs a data center, but does not know what IT equipment he will put in it in a year or two, not to mention the prospect of ten years. Therefore, the data center is designed as versatile as possible. If you describe it as "the next ten years to place any equipment that enters the market", then you will not really miss. As a result, it turns out that the design of the data center is aimed at ensuring that the facility is easily upgraded in the future without remodeling the building, major renovations, and so on.

Example: no one knows “on the shore” what width of the “cold” corridor needs to be made. Yes, there are minimum standards, but in recent years we have been trying to make it at least 2.4 meters wide, because there is oversized equipment in our design, which is 1,500 mm deep and it is necessary to take into account its turning radius, laying the width of the corridor.

Then a universal air conditioning system is designed for halls. If a few years ago the classical scheme with a hot and cold corridor was quite enough, now the load on the rack is growing and it is necessary to use various methods of “after-cooling” the equipment in highly loaded racks. Previously, the average value was 4–5 kW per rack, now customers require at least 7–10 kW, and in the middle of the hall there can be separate racks with a maximum load of up to 20 kW. We need new approaches to placing both IT equipment and air conditioning equipment in the machine room. Now there are air conditioners on the cabinets to save space, but they require a special room (with ceilings above). Active raised floor tiles appeared - before they were not there either.

It often happens that there is a modernization, the air conditioners no longer pull - they were once designed for a 3 kW rack, but now you need to remove 7 kW from the same racks. The customer says: we change everything. Somewhere it turns out, somewhere not. In some cases, we change everything, but sometimes we change only the indoor units, and we leave the old chillers if they allow us to remove new loads and only check their resource. It is often easier to retrofit with new air conditioners and distribute the flows with tiles.

Separately, I would like to note projects in which such modernization is carried out “hot”, that is, when business processes cannot be interrupted for even a second, which means that IT equipment cannot be stopped. A real challenge for the entire project team: tight deadlines, the highest responsibility, work seven days a week (and sometimes 24/7). And if it’s also a small room, where two rigging shoulders rest against each other ...

The modernization of uninterruptible power supply systems also deserves special mention. There are projects in which engineers seemed to deliberately do everything to keep their brainchild in its original form until old age. We look with the customer one-ruler and together we come across various "obstacles". There are such bottlenecks, the circuit is so complicated that it is hard to believe that it works at all.

The KISS principle (Keep it simple, stupid!) Has not been explicitly used.

Or here are the busbars inside the hall: high installation speed, freed up space under the raised floor, flexibility and ease of use - power input “from above” makes it easier to add or move racks.

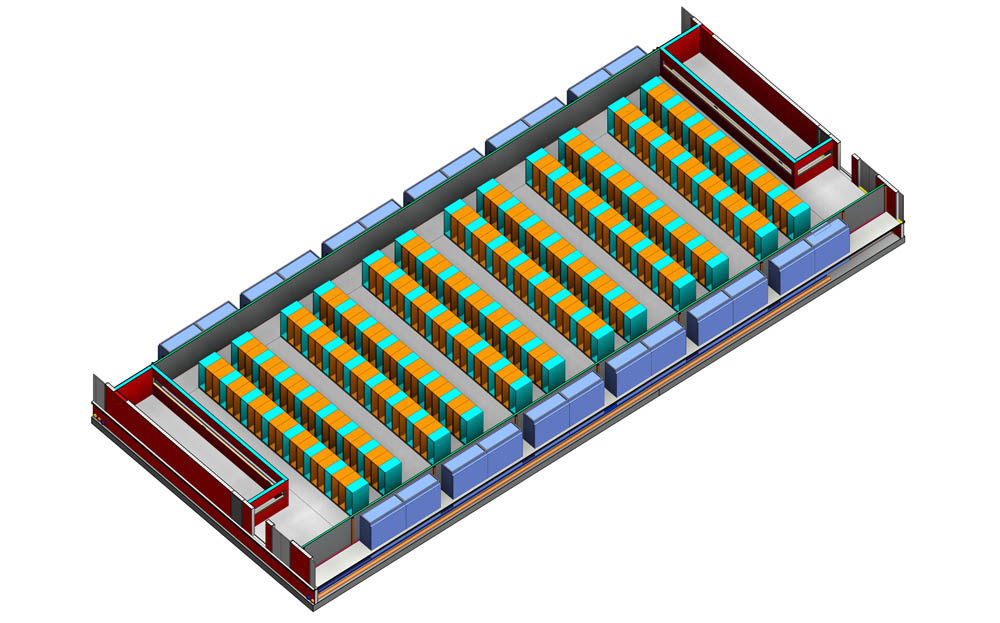

Since the customer cannot predict the uniform distribution of “heavy” racks, we first estimate the general layout of the rooms, then we build a model according to which different configurations of the hall are considered, and according to which we calculate air flows.

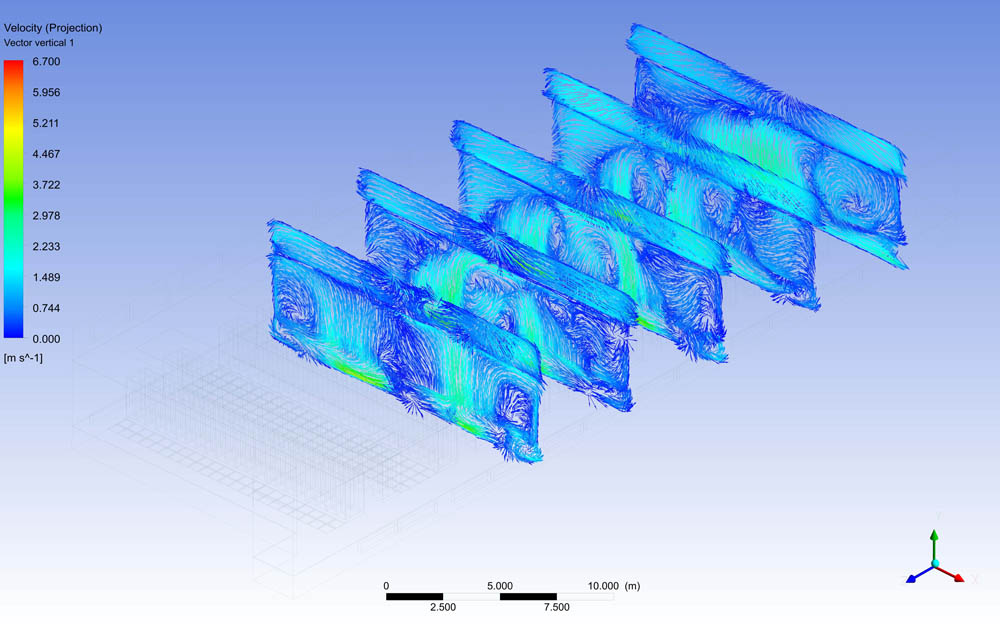

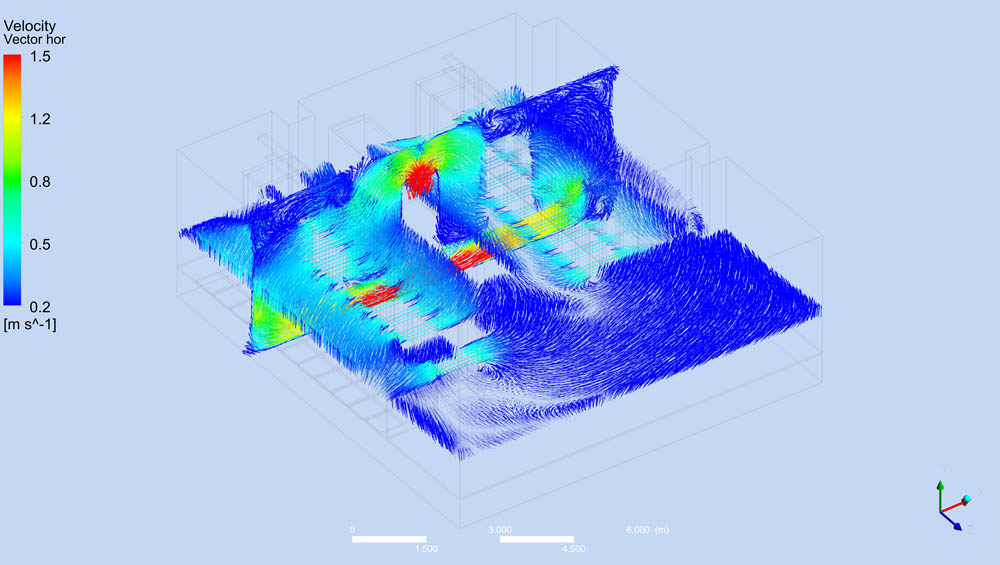

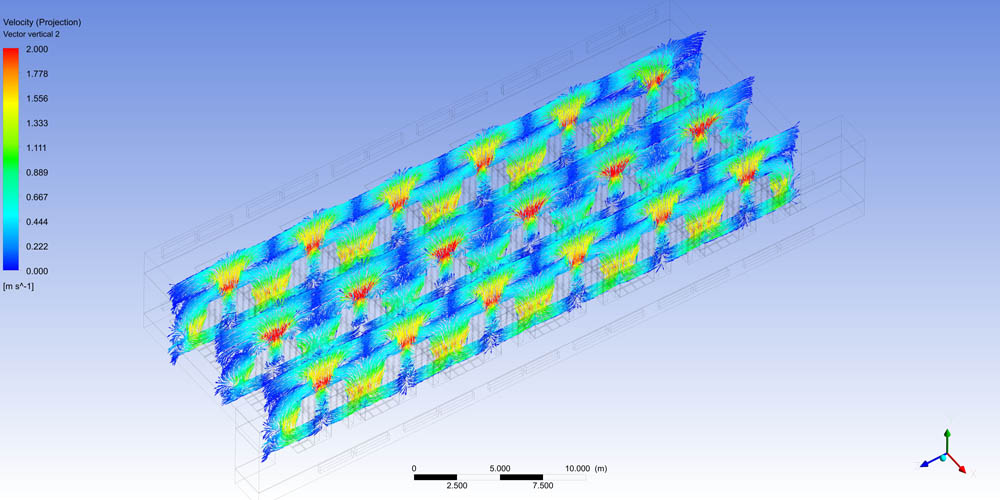

Then we examine the flows themselves. This is how it looks:

This is important because two adjacent rows can stand, in one rack there will be 14 kW, in the other - 2 kW. The average for them will be eight, but in one hot corridor it will be very warm and the air conditioners will work for wear, and in the second they will fall by mistake, because there is nothing to cool. That is, the plan for the deployment of IT equipment, at least approximately, needs to be thought out already at the design stage of engineering systems and communications.

And there are dozens of such nuances of the project.

Here is another example of the instability factor - the reconciliation process. Any new construction is coordinated many times in different instances. This is a pretty iterative process. Terms of coordination are unpredictable. Based on this, in each case it is necessary to find solutions in order to get a compromise with various authorities regarding what can and cannot be done in a particular building and site. A whole base of engineering life hacks is being formed: how to satisfy the requirements of several standards at once and at the same time maintain efficiency.

In some regions, it is not always possible to quickly change the purpose of a building. Data centers are often built in office or former “office premises” (garages, warehouses). It is necessary to change the destination to industrial. It often turns out that initially the customer could not change the “garage” to “office”, and you have to go through the whole chain. And sometimes this means that it’s easier to choose a new site than to get permission for this.

State-owned companies are adding another feature to this process. They have very tight contracts and, in particular, the terms on them. And coordination within the customer, between its departments, is an unregulated time process.

Then the fear is imposed by new technologies. In different countries we see a different attitude to this issue: somewhere they trust the contractor, but they very tightly check all the proposed solutions according to technical requirements, and somewhere both the customer and the contractor do it “the old fashioned way”. We usually have a gap between what we know as designers, builders and practitioners, and what the customer remembers from the experience of his previous data center. Here he built ten years ago, and everything turned out well. So, you need to build exactly the same data center, but more. And this is not always good now. New legislative aspects, environmental standards, technologies - the market is changing quite quickly. I've often come across the fact that the customer simply does not know about the existence of an alternative to the classic bundle - static UPS + DGU. For them, a

diesel-dynamic uninterruptible power supply (DDIBP) is a black box, and they immediately discard the option with it. The second phase of denial occurs at the moment when coordination with the Ministry of Emergency Situations, additional facilities, coordination of sewerage and so on are compared. But if you count all the pros and cons - the base for the choice is already being set. As a rule, there is money for five, ten and 20 years, and everyone understands this language perfectly.

And from this problem with a lack of understanding of technology, the following grows. The customer does not always really evaluate the deadlines. This applies not only to the construction of the data center and the engineer, but also to the entire IT market. The first reason is that the market is developing. The time for installation and commissioning, as well as the complexity of these works, and, accordingly, the qualifications of the personnel involved, is now growing, but then it’s much easier to operate such systems than those created on the basis of past technologies — such is the technology trend.

The second reason - often there is a long-term development program within the customer. And the customer does not always start the project within himself when he was supposed to start the program. But it must end exactly on it! If there was a year on the server ones, and you started with a shift of six months, then you need to build them in the six remaining months. Within the company, deadlines do not move. And you need to invent something. And sometimes the customer cuts them purposefully, because his experience shows what can be done faster than stated. Really possible. But in terms of implementation, usually there are customs formalities, seasonal delivery peaks, problems with the supply of components in factories and August. August for engineering infrastructure is generally dead. The main manufacturers of air conditioners by all plants go on a friendly vacation - last year, this moved the deadlines for one of the projects. More often, projects must be completed before January 1, and here Catholic Christmas leaves its mark. Christmas begins on December 21-22, when the hottest time in Russia is to close projects. Then our holidays. If you are “lucky” and the car with the cargo went to customs on December 20, then it will be released on January 11. And these three weeks can create problems. The terms of equipment delivery to state customers do not change: you can easily fly through no fault of your own and create a negative reputation for yourself or even go blacklisted. Therefore, the deadlines are laid not minimum, but an objective period.

Or here is the production process: eight weeks for conditioning and UPS, 12 weeks for DGU - this is if the assembly of parts from stock. And factories do not work quite like that. Therefore, at the start of projects, we try to talk out the milestones to which the equipment will be brought. So that the customer has a pipeline to check what and how. It is important that the manager on the part of the customer knows that if they didn’t pay today, he himself shifted the project deadline for that day.

Somehow it was necessary to build a small data center in four months. The foundation was, there was no overhaul. Two halls with 100 racks, 1.2 MW each. Start of work - in early December. March 13th finished. It is not convenient and comfortable for all contractors to go to work at the beginning of winter at the end of the year: in Russia everyone is preparing for long holidays and vacations. The builders both are sacred. We work with those who know the specifics of projects, so we are flexible about the fact that we need to work in three shifts without breaks on holidays and weekends. Find people and start work. We don’t always start right away, here a week later they started. Personally, on December 31 of that year, my working day ended at 18:00. And on January 2, I stood with the project manager of the customer at the site and looked at how the work was going.

No sample projects. Typically in Europe, data centers are built around one project. He did one - and you can spank all over the country. This is not so with us. We cannot apply standard designs in the south and north. Different fuel costs. Different prices for electricity. Different environmental requirements. Different average annual temperatures. Freecooling works well in the north. But in the south, you can put solar panels for the data center to remove load peaks, in the north they will help to illuminate the house territory as much as possible. Windmill is a very long and expensive agreement, far from everywhere it is possible to supply and operate. The one who initiates the project to create the data center is not always the same who operates, so building a cheaper one is now more important than lowering the cost of operation, but then.

From a recent one, the vendor supplied diesel equipment, cars arrived with specifications for countries with a hot climate, that is, for Saudi Arabia. Cars need to be cooled. And here they differ from the “normal” ones - by the radius of the fan. From the point of view of technology, this is not a minus, but for us it is bad, because we must deliver containers with a standard size. The machines are powerful and fit perfectly into a 12-meter standard container. And this fan sticks out of the envelope. I had to ask contractors to make a non-standard container in a standard size: to make protrusions, peaks from precipitation, wind, access.

I especially want to note the data centers outside the Urals. There are huge distances to them that need to be crossed along disgusting roads in order to bring equipment. And it does not always come whole. Not all vendors are ready to give support to distant regions. We have expertise and a good engineering service, so such orders are often outsourced to us. And so for critical data centers, it is important to know that not everyone is ready to sign up for an SLA for four hours at some mineral object. Outside of Moscow, Petersburg, Yekaterinburg and Kazan, such SLAs generally sound like science fiction. And in the Far North, in general, the word SLA takes on a sarcastic connotation.

The consequence is the choice of vendors with a wide service network. Often, a cheaper vendor is worse in terms of operation.

References