في منشور سابق ، قمنا بتحجيم مجموعة النسخ المتماثلة MongoDB وقدمنا StatefulSet. سنتولى الآن تنسيق مجموعة Elasticsearch عالية التوفر (مع العقد الرئيسية الأخرى ، وعقد البيانات ، وعقد العميل) واستخدام ES-HQ و Kibana.

ستحتاج:

- فهم أساسي لل Elasticsearch ، وأنواع العقد ، وأدوارها.

- كتلة Kubernetes تعمل مع ثلاثة العقد على الأقل (أربعة النوى على الأقل ، 4 جيجابايت).

- القدرة على العمل مع Kibana.

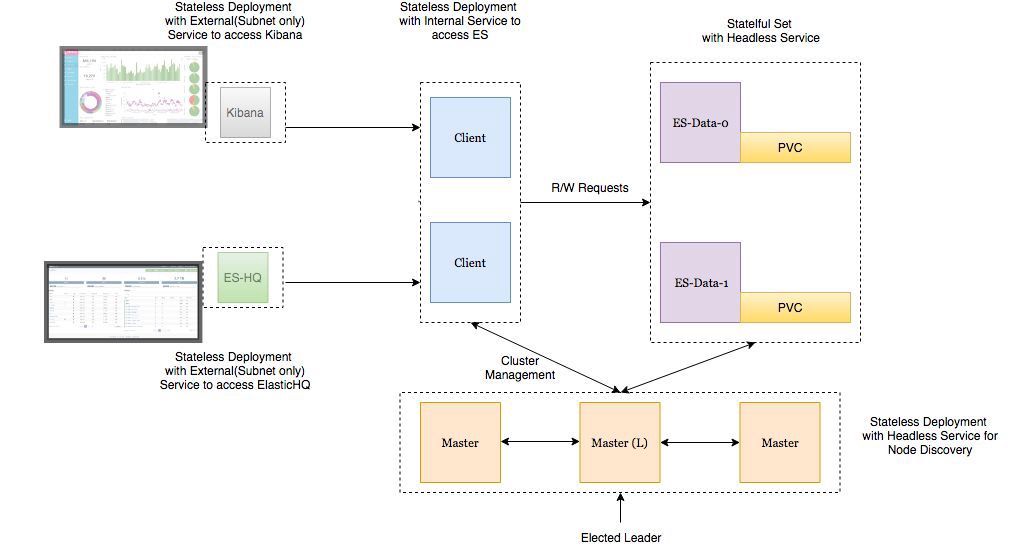

هندسة النشر

- يتم نشر عقد بيانات Elasticsearch كملف StatefulSet مع خدمة مقطوعة الرأس بحيث يكون لدينا معرفات شبكة ثابتة .

- يتم نشر الأشرطة الرئيسية Elasticsearch كـ ReplicaSet مع خدمة مقطوعة الرأس. هذا هو لاكتشاف السيارات .

- يتم نشر Pods على عقد عميل Elasticsearch كـ ReplicaSet مع خدمة داخلية بحيث يمكنك إرسال طلبات القراءة / الكتابة إلى عقد البيانات.

- يتم نشر قرون Kibana و ElasticHQ كـ ReplicaSet مع الخدمات المتوفرة خارج مجموعة Kubernetes ، ولكن توجد داخل الشبكة الفرعية (لا تفتح للخارج دون داع).

- يتم نشر HPA (Horizonal Pod Autoscaler) للعُقد الخاصة بالعميل ، وهي مسؤولة عن الفحص التلقائي الأفقي عند التحميل العالي.

"تذكر تكوين البيئة:

- ES_JAVA_OPTS متغير.

- متغير CLUSTER_NAME .

- المتغير NUMBER_OF_MASTERS لنشر الأساتذة لتجنب وضع انقسام الدماغ. إذا كان لدينا 3 أسياد ، حدد 2.

- قواعد مكافحة التقارب لقلوب مماثلة لضمان موثوقية عالية إذا سقطت عقدة العمل.

"

دعنا نشر هذه الخدمات في مجموعة GKE.

kind: Namespace metadata: name: elasticsearch --- apiVersion: apps/v1beta1 kind: Deployment metadata: name: es-master namespace: elasticsearch labels: component: elasticsearch role: master spec: replicas: 3 template: metadata: labels: component: elasticsearch role: master spec: affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: role operator: In values: - master topologyKey: kubernetes.io/hostname initContainers: - name: init-sysctl image: busybox:1.27.2 command: - sysctl - -w - vm.max_map_count=262144 securityContext: privileged: true containers: - name: es-master image: quay.io/pires/docker-elasticsearch-kubernetes:6.2.4 env: - name: NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: NODE_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: CLUSTER_NAME value: my-es - name: NUMBER_OF_MASTERS value: "2" - name: NODE_MASTER value: "true" - name: NODE_INGEST value: "false" - name: NODE_DATA value: "false" - name: HTTP_ENABLE value: "false" - name: ES_JAVA_OPTS value: -Xms256m -Xmx256m - name: PROCESSORS valueFrom: resourceFieldRef: resource: limits.cpu resources: limits: cpu: 2 ports: - containerPort: 9300 name: transport volumeMounts: - name: storage mountPath: /data volumes: - emptyDir: medium: "" name: "storage" --- apiVersion: v1 kind: Service metadata: name: elasticsearch-discovery namespace: elasticsearch labels: component: elasticsearch role: master spec: selector: component: elasticsearch role: master ports: - name: transport port: 9300 protocol: TCP clusterIP: None view rawes-master.yml hosted with love by GitHub

(نشر وخدمة مقطوعة الرأس للعقد الرئيسية)

root$ kubectl -n elasticsearch get all NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deploy/es-master 3 3 3 3 32s NAME DESIRED CURRENT READY AGE rs/es-master-594b58b86c 3 3 3 31s NAME READY STATUS RESTARTS AGE po/es-master-594b58b86c-9jkj2 1/1 Running 0 31s po/es-master-594b58b86c-bj7g7 1/1 Running 0 31s po/es-master-594b58b86c-lfpps 1/1 Running 0 31s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/elasticsearch-discovery ClusterIP None <none> 9300/TCP 31s

من المثير للاهتمام دراسة سجلات الموقد على العقد الرئيسية ومعرفة كيفية اختيار المعلم من بينها الآن وكيف سيكون لاحقًا عند إضافة عقد بيانات جديدة وعقد عميل.

root$ kubectl -n elasticsearch logs -f po/es-master-594b58b86c-9jkj2 | grep ClusterApplierService [2018-10-21T07:41:54,958][INFO ][oecsClusterApplierService] [es-master-594b58b86c-9jkj2] detected_master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300}, added {{es-master-594b58b86c-lfpps}{wZQmXr5fSfWisCpOHBhaMg}{50jGPeKLSpO9RU_HhnVJCA}{10.9.124.81}{10.9.124.81:9300},{es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [3]])

هنا يمكنك أن ترى أنه ضمن es-master يحمل الاسم es-master-594b58b86c-bj7g7 من قبل المعلم ، ويتم إضافة جرابين آخرين إليه وإلى بعضهم البعض.

يتم تثبيت خدمة elasticsearch-discovery بدون رأس افتراضيًا في صورة Docker كمتغير بيئة وتستخدم للكشف في العقد. يمكن استبدال هذا الإعداد إذا كنت ترغب في ذلك.

وبالمثل ، نقوم بنشر عقد البيانات وعقد العميل . انظر التكوينات أدناه.

نشر عقدة البيانات:

kind: Namespace metadata: name: elasticsearch --- apiVersion: storage.k8s.io/v1beta1 kind: StorageClass metadata: name: fast provisioner: kubernetes.io/gce-pd parameters: type: pd-ssd fsType: xfs allowVolumeExpansion: true --- apiVersion: apps/v1beta1 kind: StatefulSet metadata: name: es-data namespace: elasticsearch labels: component: elasticsearch role: data spec: serviceName: elasticsearch-data replicas: 3 template: metadata: labels: component: elasticsearch role: data spec: affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: role operator: In values: - data topologyKey: kubernetes.io/hostname initContainers: - name: init-sysctl image: busybox:1.27.2 command: - sysctl - -w - vm.max_map_count=262144 securityContext: privileged: true containers: - name: es-data image: quay.io/pires/docker-elasticsearch-kubernetes:6.2.4 env: - name: NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: NODE_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: CLUSTER_NAME value: my-es - name: NODE_MASTER value: "false" - name: NODE_INGEST value: "false" - name: HTTP_ENABLE value: "false" - name: ES_JAVA_OPTS value: -Xms256m -Xmx256m - name: PROCESSORS valueFrom: resourceFieldRef: resource: limits.cpu resources: limits: cpu: 2 ports: - containerPort: 9300 name: transport volumeMounts: - name: storage mountPath: /data volumeClaimTemplates: - metadata: name: storage annotations: volume.beta.kubernetes.io/storage-class: "fast" spec: accessModes: [ "ReadWriteOnce" ] storageClassName: fast resources: requests: storage: 10Gi --- apiVersion: v1 kind: Service metadata: name: elasticsearch-data namespace: elasticsearch labels: component: elasticsearch role: data spec: ports: - port: 9300 name: transport clusterIP: None selector: component: elasticsearch role: data view rawes-data.yml hosted with love by GitHub

(StatefulSet وخدمة مقطوعة الرأس لعقد البيانات)

تصدر الخدمة مقطوعة الرأس في عقد البيانات معرفات شبكة ثابتة للعقد وتساعد في نقل البيانات بين العقد.

من المهم تنسيق الصوت الدائم قبل إرفاقه بالموقد. فقط حدد نوع وحدة التخزين عند إنشاء فئة التخزين. يمكنك أيضًا تعيين معلمة تسمح بزيادة حجم الصوت تلقائيًا . قراءة التفاصيل هنا .

parameters: type: pd-ssd fsType: xfs allowVolumeExpansion: true ...

نشر عقد العميل:

kind: Namespace metadata: name: elasticsearch --- apiVersion: apps/v1beta1 kind: Deployment metadata: name: es-client namespace: elasticsearch labels: component: elasticsearch role: client spec: replicas: 2 template: metadata: labels: component: elasticsearch role: client spec: affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: role operator: In values: - client topologyKey: kubernetes.io/hostname initContainers: - name: init-sysctl image: busybox:1.27.2 command: - sysctl - -w - vm.max_map_count=262144 securityContext: privileged: true containers: - name: es-client image: quay.io/pires/docker-elasticsearch-kubernetes:6.2.4 env: - name: NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: NODE_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: CLUSTER_NAME value: my-es - name: NODE_MASTER value: "false" - name: NODE_DATA value: "false" - name: HTTP_ENABLE value: "true" - name: ES_JAVA_OPTS value: -Xms256m -Xmx256m - name: NETWORK_HOST value: _site_,_lo_ - name: PROCESSORS valueFrom: resourceFieldRef: resource: limits.cpu resources: limits: cpu: 1 ports: - containerPort: 9200 name: http - containerPort: 9300 name: transport volumeMounts: - name: storage mountPath: /data volumes: - emptyDir: medium: "" name: storage --- apiVersion: v1 kind: Service metadata: name: elasticsearch namespace: elasticsearch annotations: cloud.google.com/load-balancer-type: Internal labels: component: elasticsearch role: client spec: selector: component: elasticsearch role: client ports: - name: http port: 9200 type: LoadBalancer view rawes-client.yml hosted with love by GitHub

(نشر والخدمة الخارجية لعقد العميل)

توفر الخدمة المنشورة هنا إمكانية الوصول إلى نظام المجموعة ES خارج مجموعة Kubernetes ، ولكنها لا تزال داخل الشبكة الفرعية. التعليق التوضيحي cloud.google.com/load-balancer-type: داخلي مسؤول عن ذلك.

ولكن إذا تم نشر التطبيق الذي يصل إلى مجموعة ES للقراءة والكتابة داخل المجموعة ، فيمكن الحصول على الوصول إلى خدمة ElasticSearch على الموقع http: //elasticsearch.elasticsearch: 9200 .

عند توسيع عقد البيانات وعقد العميل ، ستتم إضافتها إلى نظام المجموعة تلقائيًا. (ابحث عن المعلم تحت السجلات)

root$ kubectl -n elasticsearch get pods -l role=data NAME READY STATUS RESTARTS AGE es-data-0 1/1 Running 0 48s es-data-1 1/1 Running 0 28s -------------------------------------------------------------------- root$ kubectl apply -f es-client.yml root$ kubectl -n elasticsearch get pods -l role=client NAME READY STATUS RESTARTS AGE es-client-69b84b46d8-kr7j4 1/1 Running 0 47s es-client-69b84b46d8-v5pj2 1/1 Running 0 47s -------------------------------------------------------------------- root$ kubectl -n elasticsearch get all NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deploy/es-client 2 2 2 2 1m deploy/es-master 3 3 3 3 9m NAME DESIRED CURRENT READY AGE rs/es-client-69b84b46d8 2 2 2 1m rs/es-master-594b58b86c 3 3 3 9m NAME DESIRED CURRENT AGE statefulsets/es-data 2 2 3m NAME READY STATUS RESTARTS AGE po/es-client-69b84b46d8-kr7j4 1/1 Running 0 1m po/es-client-69b84b46d8-v5pj2 1/1 Running 0 1m po/es-data-0 1/1 Running 0 3m po/es-data-1 1/1 Running 0 3m po/es-master-594b58b86c-9jkj2 1/1 Running 0 9m po/es-master-594b58b86c-bj7g7 1/1 Running 0 9m po/es-master-594b58b86c-lfpps 1/1 Running 0 9m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/elasticsearch LoadBalancer 10.9.121.160 10.9.120.8 9200:32310/TCP 1m svc/elasticsearch-data ClusterIP None <none> 9300/TCP 3m svc/elasticsearch-discovery ClusterIP None <none> 9300/TCP 9m -------------------------------------------------------------------- #Check logs of es-master leader pod root$ kubectl -n elasticsearch logs po/es-master-594b58b86c-bj7g7 | grep ClusterApplierService [2018-10-21T07:41:53,731][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] new_master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300}, added {{es-master-594b58b86c-lfpps}{wZQmXr5fSfWisCpOHBhaMg}{50jGPeKLSpO9RU_HhnVJCA}{10.9.124.81}{10.9.124.81:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [1] source [zen-disco-elected-as-master ([1] nodes joined)[{es-master-594b58b86c-lfpps}{wZQmXr5fSfWisCpOHBhaMg}{50jGPeKLSpO9RU_HhnVJCA}{10.9.124.81}{10.9.124.81:9300}]]]) [2018-10-21T07:41:55,162][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-master-594b58b86c-9jkj2}{x9Prp1VbTq6_kALQVNwIWg}{7NHUSVpuS0mFDTXzAeKRcg}{10.9.125.81}{10.9.125.81:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [3] source [zen-disco-node-join[{es-master-594b58b86c-9jkj2}{x9Prp1VbTq6_kALQVNwIWg}{7NHUSVpuS0mFDTXzAeKRcg}{10.9.125.81}{10.9.125.81:9300}]]]) [2018-10-21T07:48:02,485][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-data-0}{SAOhUiLiRkazskZ_TC6EBQ}{qirmfVJBTjSBQtHZnz-QZw}{10.9.126.88}{10.9.126.88:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [4] source [zen-disco-node-join[{es-data-0}{SAOhUiLiRkazskZ_TC6EBQ}{qirmfVJBTjSBQtHZnz-QZw}{10.9.126.88}{10.9.126.88:9300}]]]) [2018-10-21T07:48:21,984][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-data-1}{fiv5Wh29TRWGPumm5ypJfA}{EXqKGSzIQquRyWRzxIOWhQ}{10.9.125.82}{10.9.125.82:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [5] source [zen-disco-node-join[{es-data-1}{fiv5Wh29TRWGPumm5ypJfA}{EXqKGSzIQquRyWRzxIOWhQ}{10.9.125.82}{10.9.125.82:9300}]]]) [2018-10-21T07:50:51,245][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-client-69b84b46d8-v5pj2}{MMjA_tlTS7ux-UW44i0osg}{rOE4nB_jSmaIQVDZCjP8Rg}{10.9.125.83}{10.9.125.83:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [6] source [zen-disco-node-join[{es-client-69b84b46d8-v5pj2}{MMjA_tlTS7ux-UW44i0osg}{rOE4nB_jSmaIQVDZCjP8Rg}{10.9.125.83}{10.9.125.83:9300}]]]) [2018-10-21T07:50:58,964][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-client-69b84b46d8-kr7j4}{gGC7F4diRWy2oM1TLTvNsg}{IgI6g3iZT5Sa0HsFVMpvvw}{10.9.124.82}{10.9.124.82:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [7] source [zen-disco-node-join[{es-client-69b84b46d8-kr7j4}{gGC7F4diRWy2oM1TLTvNsg}{IgI6g3iZT5Sa0HsFVMpvvw}{10.9.124.82}{10.9.124.82:9300}]]]) ] [ES-الماجستير-594b58b86c-bj7g7] وأضاف {{ES-الماجستير-594b58b86c-9jkj2} {} {x9Prp1VbTq6_kALQVNwIWg 7NHUSVpuS0mFDTXzAeKRcg} {} {10.9.125.81 10.9 root$ kubectl -n elasticsearch get pods -l role=data NAME READY STATUS RESTARTS AGE es-data-0 1/1 Running 0 48s es-data-1 1/1 Running 0 28s -------------------------------------------------------------------- root$ kubectl apply -f es-client.yml root$ kubectl -n elasticsearch get pods -l role=client NAME READY STATUS RESTARTS AGE es-client-69b84b46d8-kr7j4 1/1 Running 0 47s es-client-69b84b46d8-v5pj2 1/1 Running 0 47s -------------------------------------------------------------------- root$ kubectl -n elasticsearch get all NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deploy/es-client 2 2 2 2 1m deploy/es-master 3 3 3 3 9m NAME DESIRED CURRENT READY AGE rs/es-client-69b84b46d8 2 2 2 1m rs/es-master-594b58b86c 3 3 3 9m NAME DESIRED CURRENT AGE statefulsets/es-data 2 2 3m NAME READY STATUS RESTARTS AGE po/es-client-69b84b46d8-kr7j4 1/1 Running 0 1m po/es-client-69b84b46d8-v5pj2 1/1 Running 0 1m po/es-data-0 1/1 Running 0 3m po/es-data-1 1/1 Running 0 3m po/es-master-594b58b86c-9jkj2 1/1 Running 0 9m po/es-master-594b58b86c-bj7g7 1/1 Running 0 9m po/es-master-594b58b86c-lfpps 1/1 Running 0 9m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/elasticsearch LoadBalancer 10.9.121.160 10.9.120.8 9200:32310/TCP 1m svc/elasticsearch-data ClusterIP None <none> 9300/TCP 3m svc/elasticsearch-discovery ClusterIP None <none> 9300/TCP 9m -------------------------------------------------------------------- #Check logs of es-master leader pod root$ kubectl -n elasticsearch logs po/es-master-594b58b86c-bj7g7 | grep ClusterApplierService [2018-10-21T07:41:53,731][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] new_master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300}, added {{es-master-594b58b86c-lfpps}{wZQmXr5fSfWisCpOHBhaMg}{50jGPeKLSpO9RU_HhnVJCA}{10.9.124.81}{10.9.124.81:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [1] source [zen-disco-elected-as-master ([1] nodes joined)[{es-master-594b58b86c-lfpps}{wZQmXr5fSfWisCpOHBhaMg}{50jGPeKLSpO9RU_HhnVJCA}{10.9.124.81}{10.9.124.81:9300}]]]) [2018-10-21T07:41:55,162][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-master-594b58b86c-9jkj2}{x9Prp1VbTq6_kALQVNwIWg}{7NHUSVpuS0mFDTXzAeKRcg}{10.9.125.81}{10.9.125.81:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [3] source [zen-disco-node-join[{es-master-594b58b86c-9jkj2}{x9Prp1VbTq6_kALQVNwIWg}{7NHUSVpuS0mFDTXzAeKRcg}{10.9.125.81}{10.9.125.81:9300}]]]) [2018-10-21T07:48:02,485][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-data-0}{SAOhUiLiRkazskZ_TC6EBQ}{qirmfVJBTjSBQtHZnz-QZw}{10.9.126.88}{10.9.126.88:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [4] source [zen-disco-node-join[{es-data-0}{SAOhUiLiRkazskZ_TC6EBQ}{qirmfVJBTjSBQtHZnz-QZw}{10.9.126.88}{10.9.126.88:9300}]]]) [2018-10-21T07:48:21,984][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-data-1}{fiv5Wh29TRWGPumm5ypJfA}{EXqKGSzIQquRyWRzxIOWhQ}{10.9.125.82}{10.9.125.82:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [5] source [zen-disco-node-join[{es-data-1}{fiv5Wh29TRWGPumm5ypJfA}{EXqKGSzIQquRyWRzxIOWhQ}{10.9.125.82}{10.9.125.82:9300}]]]) [2018-10-21T07:50:51,245][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-client-69b84b46d8-v5pj2}{MMjA_tlTS7ux-UW44i0osg}{rOE4nB_jSmaIQVDZCjP8Rg}{10.9.125.83}{10.9.125.83:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [6] source [zen-disco-node-join[{es-client-69b84b46d8-v5pj2}{MMjA_tlTS7ux-UW44i0osg}{rOE4nB_jSmaIQVDZCjP8Rg}{10.9.125.83}{10.9.125.83:9300}]]]) [2018-10-21T07:50:58,964][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-client-69b84b46d8-kr7j4}{gGC7F4diRWy2oM1TLTvNsg}{IgI6g3iZT5Sa0HsFVMpvvw}{10.9.124.82}{10.9.124.82:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [7] source [zen-disco-node-join[{es-client-69b84b46d8-kr7j4}{gGC7F4diRWy2oM1TLTvNsg}{IgI6g3iZT5Sa0HsFVMpvvw}{10.9.124.82}{10.9.124.82:9300}]]]) ] [ES-الماجستير-594b58b86c-bj7g7] وأضاف {{ES-data- root$ kubectl -n elasticsearch get pods -l role=data NAME READY STATUS RESTARTS AGE es-data-0 1/1 Running 0 48s es-data-1 1/1 Running 0 28s -------------------------------------------------------------------- root$ kubectl apply -f es-client.yml root$ kubectl -n elasticsearch get pods -l role=client NAME READY STATUS RESTARTS AGE es-client-69b84b46d8-kr7j4 1/1 Running 0 47s es-client-69b84b46d8-v5pj2 1/1 Running 0 47s -------------------------------------------------------------------- root$ kubectl -n elasticsearch get all NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deploy/es-client 2 2 2 2 1m deploy/es-master 3 3 3 3 9m NAME DESIRED CURRENT READY AGE rs/es-client-69b84b46d8 2 2 2 1m rs/es-master-594b58b86c 3 3 3 9m NAME DESIRED CURRENT AGE statefulsets/es-data 2 2 3m NAME READY STATUS RESTARTS AGE po/es-client-69b84b46d8-kr7j4 1/1 Running 0 1m po/es-client-69b84b46d8-v5pj2 1/1 Running 0 1m po/es-data-0 1/1 Running 0 3m po/es-data-1 1/1 Running 0 3m po/es-master-594b58b86c-9jkj2 1/1 Running 0 9m po/es-master-594b58b86c-bj7g7 1/1 Running 0 9m po/es-master-594b58b86c-lfpps 1/1 Running 0 9m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/elasticsearch LoadBalancer 10.9.121.160 10.9.120.8 9200:32310/TCP 1m svc/elasticsearch-data ClusterIP None <none> 9300/TCP 3m svc/elasticsearch-discovery ClusterIP None <none> 9300/TCP 9m -------------------------------------------------------------------- #Check logs of es-master leader pod root$ kubectl -n elasticsearch logs po/es-master-594b58b86c-bj7g7 | grep ClusterApplierService [2018-10-21T07:41:53,731][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] new_master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300}, added {{es-master-594b58b86c-lfpps}{wZQmXr5fSfWisCpOHBhaMg}{50jGPeKLSpO9RU_HhnVJCA}{10.9.124.81}{10.9.124.81:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [1] source [zen-disco-elected-as-master ([1] nodes joined)[{es-master-594b58b86c-lfpps}{wZQmXr5fSfWisCpOHBhaMg}{50jGPeKLSpO9RU_HhnVJCA}{10.9.124.81}{10.9.124.81:9300}]]]) [2018-10-21T07:41:55,162][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-master-594b58b86c-9jkj2}{x9Prp1VbTq6_kALQVNwIWg}{7NHUSVpuS0mFDTXzAeKRcg}{10.9.125.81}{10.9.125.81:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [3] source [zen-disco-node-join[{es-master-594b58b86c-9jkj2}{x9Prp1VbTq6_kALQVNwIWg}{7NHUSVpuS0mFDTXzAeKRcg}{10.9.125.81}{10.9.125.81:9300}]]]) [2018-10-21T07:48:02,485][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-data-0}{SAOhUiLiRkazskZ_TC6EBQ}{qirmfVJBTjSBQtHZnz-QZw}{10.9.126.88}{10.9.126.88:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [4] source [zen-disco-node-join[{es-data-0}{SAOhUiLiRkazskZ_TC6EBQ}{qirmfVJBTjSBQtHZnz-QZw}{10.9.126.88}{10.9.126.88:9300}]]]) [2018-10-21T07:48:21,984][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-data-1}{fiv5Wh29TRWGPumm5ypJfA}{EXqKGSzIQquRyWRzxIOWhQ}{10.9.125.82}{10.9.125.82:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [5] source [zen-disco-node-join[{es-data-1}{fiv5Wh29TRWGPumm5ypJfA}{EXqKGSzIQquRyWRzxIOWhQ}{10.9.125.82}{10.9.125.82:9300}]]]) [2018-10-21T07:50:51,245][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-client-69b84b46d8-v5pj2}{MMjA_tlTS7ux-UW44i0osg}{rOE4nB_jSmaIQVDZCjP8Rg}{10.9.125.83}{10.9.125.83:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [6] source [zen-disco-node-join[{es-client-69b84b46d8-v5pj2}{MMjA_tlTS7ux-UW44i0osg}{rOE4nB_jSmaIQVDZCjP8Rg}{10.9.125.83}{10.9.125.83:9300}]]]) [2018-10-21T07:50:58,964][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-client-69b84b46d8-kr7j4}{gGC7F4diRWy2oM1TLTvNsg}{IgI6g3iZT5Sa0HsFVMpvvw}{10.9.124.82}{10.9.124.82:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [7] source [zen-disco-node-join[{es-client-69b84b46d8-kr7j4}{gGC7F4diRWy2oM1TLTvNsg}{IgI6g3iZT5Sa0HsFVMpvvw}{10.9.124.82}{10.9.124.82:9300}]]]) 10.9.125.82} {10.9.125.82 root$ kubectl -n elasticsearch get pods -l role=data NAME READY STATUS RESTARTS AGE es-data-0 1/1 Running 0 48s es-data-1 1/1 Running 0 28s -------------------------------------------------------------------- root$ kubectl apply -f es-client.yml root$ kubectl -n elasticsearch get pods -l role=client NAME READY STATUS RESTARTS AGE es-client-69b84b46d8-kr7j4 1/1 Running 0 47s es-client-69b84b46d8-v5pj2 1/1 Running 0 47s -------------------------------------------------------------------- root$ kubectl -n elasticsearch get all NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE deploy/es-client 2 2 2 2 1m deploy/es-master 3 3 3 3 9m NAME DESIRED CURRENT READY AGE rs/es-client-69b84b46d8 2 2 2 1m rs/es-master-594b58b86c 3 3 3 9m NAME DESIRED CURRENT AGE statefulsets/es-data 2 2 3m NAME READY STATUS RESTARTS AGE po/es-client-69b84b46d8-kr7j4 1/1 Running 0 1m po/es-client-69b84b46d8-v5pj2 1/1 Running 0 1m po/es-data-0 1/1 Running 0 3m po/es-data-1 1/1 Running 0 3m po/es-master-594b58b86c-9jkj2 1/1 Running 0 9m po/es-master-594b58b86c-bj7g7 1/1 Running 0 9m po/es-master-594b58b86c-lfpps 1/1 Running 0 9m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc/elasticsearch LoadBalancer 10.9.121.160 10.9.120.8 9200:32310/TCP 1m svc/elasticsearch-data ClusterIP None <none> 9300/TCP 3m svc/elasticsearch-discovery ClusterIP None <none> 9300/TCP 9m -------------------------------------------------------------------- #Check logs of es-master leader pod root$ kubectl -n elasticsearch logs po/es-master-594b58b86c-bj7g7 | grep ClusterApplierService [2018-10-21T07:41:53,731][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] new_master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300}, added {{es-master-594b58b86c-lfpps}{wZQmXr5fSfWisCpOHBhaMg}{50jGPeKLSpO9RU_HhnVJCA}{10.9.124.81}{10.9.124.81:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [1] source [zen-disco-elected-as-master ([1] nodes joined)[{es-master-594b58b86c-lfpps}{wZQmXr5fSfWisCpOHBhaMg}{50jGPeKLSpO9RU_HhnVJCA}{10.9.124.81}{10.9.124.81:9300}]]]) [2018-10-21T07:41:55,162][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-master-594b58b86c-9jkj2}{x9Prp1VbTq6_kALQVNwIWg}{7NHUSVpuS0mFDTXzAeKRcg}{10.9.125.81}{10.9.125.81:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [3] source [zen-disco-node-join[{es-master-594b58b86c-9jkj2}{x9Prp1VbTq6_kALQVNwIWg}{7NHUSVpuS0mFDTXzAeKRcg}{10.9.125.81}{10.9.125.81:9300}]]]) [2018-10-21T07:48:02,485][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-data-0}{SAOhUiLiRkazskZ_TC6EBQ}{qirmfVJBTjSBQtHZnz-QZw}{10.9.126.88}{10.9.126.88:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [4] source [zen-disco-node-join[{es-data-0}{SAOhUiLiRkazskZ_TC6EBQ}{qirmfVJBTjSBQtHZnz-QZw}{10.9.126.88}{10.9.126.88:9300}]]]) [2018-10-21T07:48:21,984][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-data-1}{fiv5Wh29TRWGPumm5ypJfA}{EXqKGSzIQquRyWRzxIOWhQ}{10.9.125.82}{10.9.125.82:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [5] source [zen-disco-node-join[{es-data-1}{fiv5Wh29TRWGPumm5ypJfA}{EXqKGSzIQquRyWRzxIOWhQ}{10.9.125.82}{10.9.125.82:9300}]]]) [2018-10-21T07:50:51,245][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-client-69b84b46d8-v5pj2}{MMjA_tlTS7ux-UW44i0osg}{rOE4nB_jSmaIQVDZCjP8Rg}{10.9.125.83}{10.9.125.83:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [6] source [zen-disco-node-join[{es-client-69b84b46d8-v5pj2}{MMjA_tlTS7ux-UW44i0osg}{rOE4nB_jSmaIQVDZCjP8Rg}{10.9.125.83}{10.9.125.83:9300}]]]) [2018-10-21T07:50:58,964][INFO ][oecsClusterApplierService] [es-master-594b58b86c-bj7g7] added {{es-client-69b84b46d8-kr7j4}{gGC7F4diRWy2oM1TLTvNsg}{IgI6g3iZT5Sa0HsFVMpvvw}{10.9.124.82}{10.9.124.82:9300},}, reason: apply cluster state (from master [master {es-master-594b58b86c-bj7g7}{1aFT97hQQ7yiaBc2CYShBA}{Q3QzlaG3QGazOwtUl7N75Q}{10.9.126.87}{10.9.126.87:9300} committed version [7] source [zen-disco-node-join[{es-client-69b84b46d8-kr7j4}{gGC7F4diRWy2oM1TLTvNsg}{IgI6g3iZT5Sa0HsFVMpvvw}{10.9.124.82}{10.9.124.82:9300}]]])

إظهار سجلات جراب الرئيسي الرئيسي بوضوح عند إضافة كل عقدة إلى الكتلة. هذا مفيد لمعرفة عند تصحيح الأخطاء.

لقد قمنا بنشر جميع المكونات ، والآن نحن بحاجة إلى التحقق من:

1) نشر Elasticsearch من كتلة Kubernetes باستخدام حاوية أوبونتو.

root$ kubectl run my-shell --rm -i --tty --image ubuntu -- bash root@my-shell-68974bb7f7-pj9x6:/# curl http://elasticsearch.elasticsearch:9200/_cluster/health?pretty { "cluster_name" : "my-es", "status" : "green", "timed_out" : false, "number_of_nodes" : 7, "number_of_data_nodes" : 2, "active_primary_shards" : 0, "active_shards" : 0, "relocating_shards" : 0, "initializing_shards" : 0, "unassigned_shards" : 0, "delayed_unassigned_shards" : 0, "number_of_pending_tasks" : 0, "number_of_in_flight_fetch" : 0, "task_max_waiting_in_queue_millis" : 0, "active_shards_percent_as_number" : 100.0 }

2) نشر Elasticsearch من خارج المجموعة عبر IP 10.9.120.8 الداخلي GCP (في حالتنا ، 10.9.120.8 ).

root$ curl http://10.9.120.8:9200/_cluster/health?pretty { "cluster_name" : "my-es", "status" : "green", "timed_out" : false, "number_of_nodes" : 7, "number_of_data_nodes" : 2, "active_primary_shards" : 0, "active_shards" : 0, "relocating_shards" : 0, "initializing_shards" : 0, "unassigned_shards" : 0, "delayed_unassigned_shards" : 0, "number_of_pending_tasks" : 0, "number_of_in_flight_fetch" : 0, "task_max_waiting_in_queue_millis" : 0, "active_shards_percent_as_number" : 100.0 }

3) قواعد مكافحة التقارب ل ES الموقد.

root$ kubectl -n elasticsearch get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE es-client-69b84b46d8-kr7j4 1/1 Running 0 10m 10.8.14.52 gke-cluster1-pool1-d2ef2b34-t6h9 es-client-69b84b46d8-v5pj2 1/1 Running 0 10m 10.8.15.53 gke-cluster1-pool1-42b4fbc4-cncn es-data-0 1/1 Running 0 12m 10.8.16.58 gke-cluster1-pool1-4cfd808c-kpx1 es-data-1 1/1 Running 0 12m 10.8.15.52 gke-cluster1-pool1-42b4fbc4-cncn es-master-594b58b86c-9jkj2 1/1 Running 0 18m 10.8.15.51 gke-cluster1-pool1-42b4fbc4-cncn es-master-594b58b86c-bj7g7 1/1 Running 0 18m 10.8.16.57 gke-cluster1-pool1-4cfd808c-kpx1 es-master-594b58b86c-lfpps 1/1 Running 0 18m 10.8.14.51 gke-cluster1-pool1-d2ef2b34-t6h9

لاحظ أننا لا نملك اثنين من الموقد مماثلة على العقدة نفسها ، لذلك نحن ضمان موثوقية عالية في حالة فشل العقدة.

التحجيم

يمكننا نشر خدمات autoscaling لعقد العميل اعتمادًا على حد وحدة المعالجة المركزية. مثال HPA لعقدة العميل:

apiVersion: autoscaling/v1 kind: HorizontalPodAutoscaler metadata: name: es-client namespace: elasticsearch spec: maxReplicas: 5 minReplicas: 2 scaleTargetRef: apiVersion: extensions/v1beta1 kind: Deployment name: es-client targetCPUUtilizationPercentage: 80

يضيف القياس التلقائي قرونًا على عقدة العميل إلى الكتلة ، ويمكن ملاحظة ذلك في سجلات أي قرنة على العقدة الرئيسية.

بالنسبة للقرون على عقد البيانات ، تحتاج فقط إلى زيادة عدد النسخ المتماثلة في لوحة تحكم Kubernetes أو في وحدة التحكم GKE. ستتم إضافة عقدة البيانات التي تم إنشاؤها بنفسها إلى الكتلة وستبدأ في نسخ البيانات من العقد الأخرى.

لا أحتاج إلى التحجيم التلقائي على العقد الرئيسية - فهي تخزن فقط البيانات حول حالة الكتلة. ولكن إذا كنت ستقوم بإضافة عقد بيانات ، فتأكد من أن عدد العقد الرئيسية في الكتلة أمر غريب ، ولا تنسَ تغيير متغير NUMBER_OF_MASTERS للبيئة.

نشر Kibana و ES-HQ

كيبانا و ES-HQ

Kibana هي أداة بسيطة لتصور بيانات ES ، ويساعد ES-HQ في إدارة ومراقبة مجموعة Elasticsearch. عند نشر Kibana و ES-HQ ، تذكر ما يلي:

- نقوم بتمرير اسم كتلة ES إلى صورة Docker كمتغير بيئة.

- تظل خدمة الوصول إلى نشر Kibana / ES-HQ داخل الشركة ، أي أنه لم يتم إنشاء عنوان IP عام. نحن نستخدم موازن تحميل GCP الداخلي.

نشر كيبانا

kind: Namespace metadata: name: elasticsearch --- apiVersion: apps/v1beta1 kind: Deployment metadata: name: es-kibana namespace: elasticsearch labels: component: elasticsearch role: kibana spec: replicas: 1 template: metadata: labels: component: elasticsearch role: kibana spec: containers: - name: es-kibana image: docker.elastic.co/kibana/kibana-oss:6.2.2 env: - name: CLUSTER_NAME value: my-es - name: ELASTICSEARCH_URL value: http://elasticsearch:9200 resources: limits: cpu: 0.5 ports: - containerPort: 5601 name: http --- apiVersion: v1 kind: Service metadata: name: kibana annotations: cloud.google.com/load-balancer-type: "Internal" namespace: elasticsearch labels: component: elasticsearch role: kibana spec: selector: component: elasticsearch role: kibana ports: - name: http port: 80 targetPort: 5601 protocol: TCP type: LoadBalancer view rawes-kibana.yml hosted with love by GitHub

(خدمة النشر و Kibana)

نشر ES-HQ

kind: Namespace metadata: name: elasticsearch --- apiVersion: apps/v1beta1 kind: Deployment metadata: name: es-hq namespace: elasticsearch labels: component: elasticsearch role: hq spec: replicas: 1 template: metadata: labels: component: elasticsearch role: hq spec: containers: - name: es-hq image: elastichq/elasticsearch-hq:release-v3.4.0 env: - name: HQ_DEFAULT_URL value: http://elasticsearch:9200 resources: limits: cpu: 0.5 ports: - containerPort: 5000 name: http --- apiVersion: v1 kind: Service metadata: name: hq annotations: cloud.google.com/load-balancer-type: "Internal" namespace: elasticsearch labels: component: elasticsearch role: hq spec: selector: component: elasticsearch role: hq ports: - name: http port: 80 targetPort: 5000 protocol: TCP type: LoadBalancer view rawes-hq.yml hosted with love by GitHub

(نشر وخدمة ES-HQ)

نحن الوصول إلى كل الخدمات من خلال الموازن الداخلي الذي تم إنشاؤه.

root$ kubectl -n elasticsearch get svc -l role=kibana NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kibana LoadBalancer 10.9.121.246 10.9.120.10 80:31400/TCP 1m root$ kubectl -n elasticsearch get svc -l role=hq NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE hq LoadBalancer 10.9.121.150 10.9.120.9 80:31499/TCP 1m

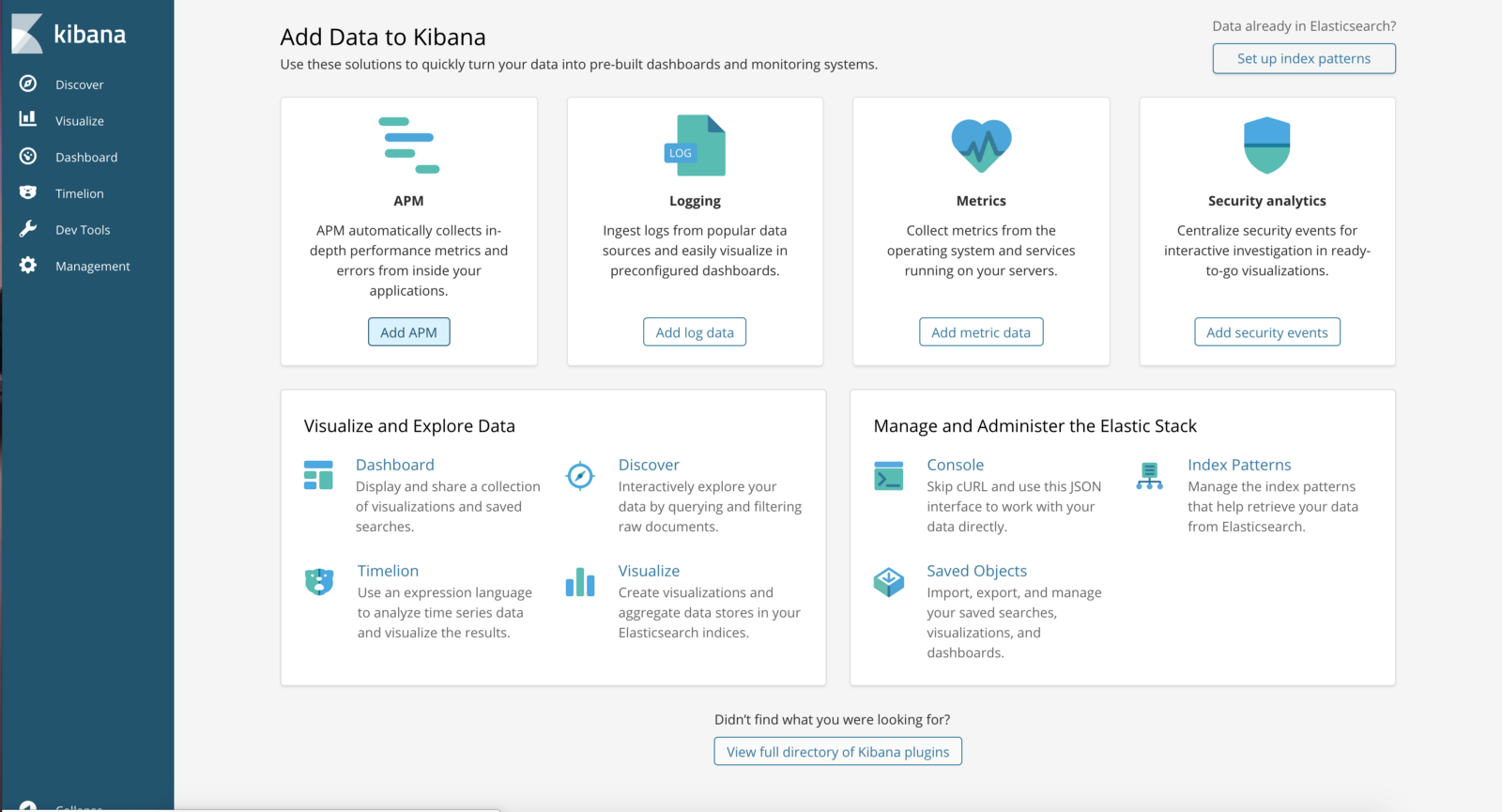

http: // <External-Ip-Kibana-Service> / app / kibana # / home؟ _g = ()

(لوحة التحكم Kibana)

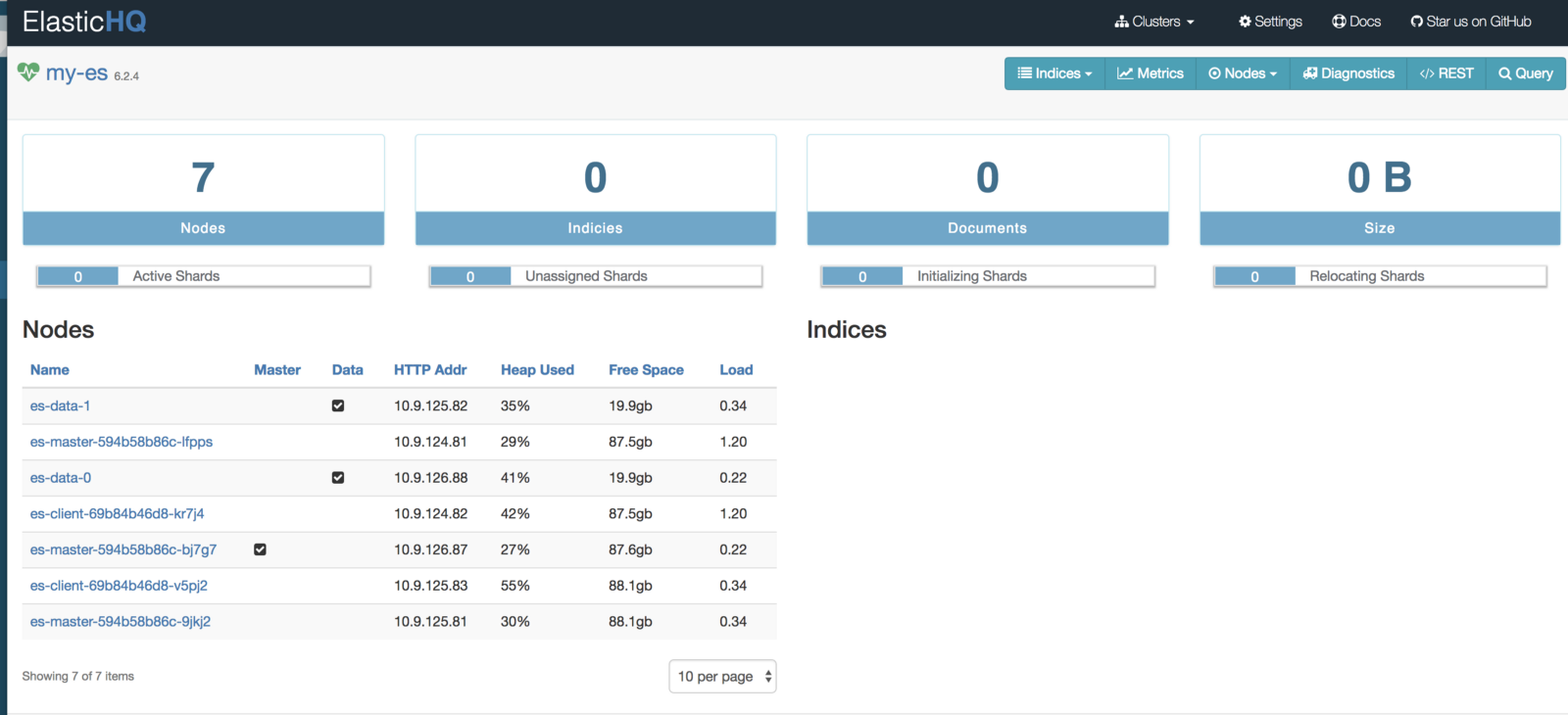

http: // <External-Ip-ES-Hq-Service> / #! / clusters / my-es

(لوحة التحكم ElasticHQ لرصد وإدارة الكتلة)

يعد ES أحد أكثر أنظمة البحث والتحليل الموزعة شيوعًا ، وفي Kubernetes يحل المشكلات الرئيسية المتمثلة في التوسع والإتاحة العالية. بالإضافة إلى ذلك ، يتم نشر مجموعات ES جديدة في Kubernetes على الفور.