Catatan perev. : Penulis artikel itu, Erkan Erol, seorang insinyur dari SAP, berbagi studinya tentang mekanisme fungsi perintah kubectl exec , yang begitu akrab bagi semua orang yang bekerja dengan Kubernetes. Dia menyertai keseluruhan algoritme dengan daftar kode sumber Kubernetes (dan proyek terkait), yang memungkinkan Anda untuk memahami topik sedalam yang diperlukan.

Suatu hari Jumat, seorang rekan mendatangi saya dan bertanya bagaimana cara menjalankan perintah di pod menggunakan

client-go . Saya tidak bisa menjawabnya dan tiba-tiba menyadari bahwa saya tidak tahu apa-apa tentang mekanisme kerja

kubectl exec . Ya, saya punya ide tertentu tentang perangkatnya, tetapi saya tidak 100% yakin akan kebenarannya dan karena itu memutuskan untuk mengatasi masalah ini. Setelah mempelajari blog, dokumentasi, dan kode sumber, saya belajar banyak hal baru, dan dalam artikel ini saya ingin berbagi penemuan dan pemahaman saya. Jika ada sesuatu yang salah, silakan hubungi saya di

Twitter .

Persiapan

Untuk membuat sebuah cluster di MacBook, saya

mengkloning ecomm-integrasi-balerina / kubernetes-cluster . Kemudian ia mengoreksi alamat IP dari node dalam konfigurasi kubelet, karena pengaturan default tidak memungkinkan

kubectl exec . Anda dapat membaca lebih lanjut tentang alasan utama ini di

sini .

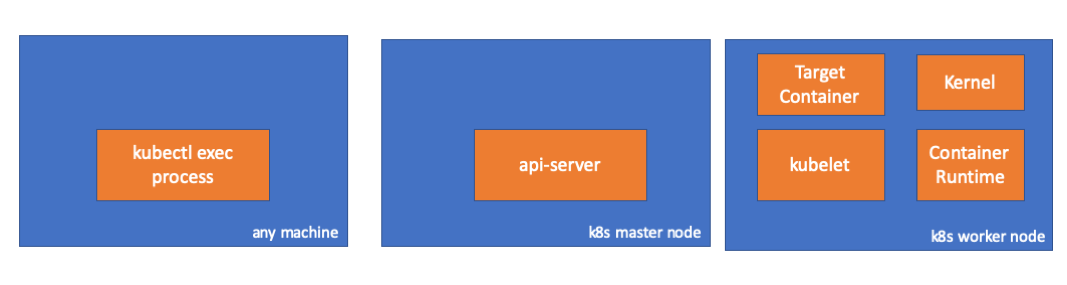

- Mobil apa pun = MacBook saya

- Master IP = 192.168.205.10

- IP host pekerja = 192.168.205.11

- Port server API = 6443

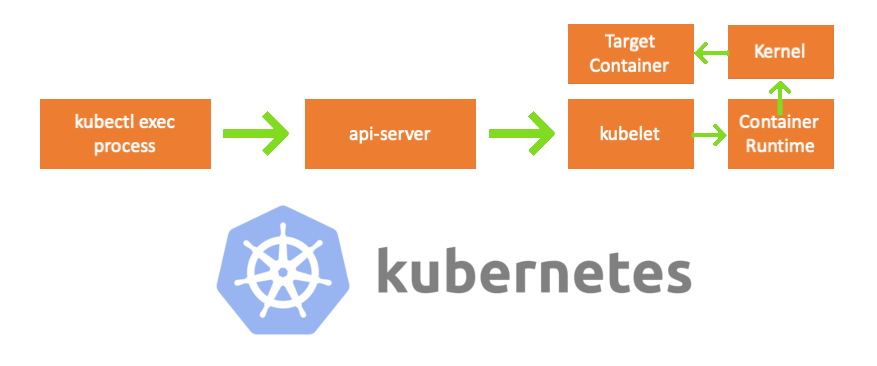

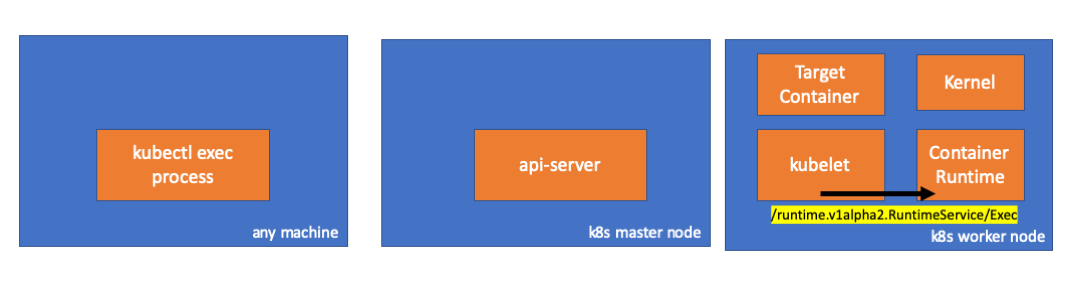

Komponen

- proses kubectl exec : ketika kita mengeksekusi "kubectl exec ...", proses dimulai. Anda dapat melakukan ini di mesin apa pun dengan akses ke server API K8s. Catatan trans.: Selanjutnya dalam daftar konsol, penulis menggunakan komentar "mesin apa saja", yang menyiratkan bahwa perintah selanjutnya dapat dijalankan pada mesin seperti itu dengan akses ke Kubernetes.

- api server : komponen pada master yang menyediakan akses ke API Kubernetes. Ini adalah antarmuka untuk pesawat kontrol di Kubernetes.

- kubelet : agen yang berjalan di setiap node di cluster. Ini menyediakan wadah di pod'e.

- container runtime ( container runtime ): perangkat lunak yang bertanggung jawab untuk pengoperasian kontainer. Contoh: Docker, CRI-O, mengandung ...

- kernel : OS kernel pada node yang berfungsi; bertanggung jawab atas manajemen proses.

- wadah target : wadah yang merupakan bagian dari pod dan beroperasi di salah satu simpul kerja.

Apa yang saya temukan

1. Aktivitas sisi klien

Buat pod di namespace

default :

// any machine $ kubectl run exec-test-nginx --image=nginx

Kemudian kita jalankan perintah exec dan tunggu selama 5000 detik untuk pengamatan lebih lanjut:

// any machine $ kubectl exec -it exec-test-nginx-6558988d5-fgxgg -- sh

Proses kubectl muncul (dengan pid = 8507 dalam kasus kami):

// any machine $ ps -ef |grep kubectl 501 8507 8409 0 7:19PM ttys000 0:00.13 kubectl exec -it exec-test-nginx-6558988d5-fgxgg -- sh

Jika kami memeriksa aktivitas jaringan dari proses, kami menemukan bahwa ia memiliki koneksi ke server api (192.168.205.10.6443):

// any machine $ netstat -atnv |grep 8507 tcp4 0 0 192.168.205.1.51673 192.168.205.10.6443 ESTABLISHED 131072 131768 8507 0 0x0102 0x00000020 tcp4 0 0 192.168.205.1.51672 192.168.205.10.6443 ESTABLISHED 131072 131768 8507 0 0x0102 0x00000028

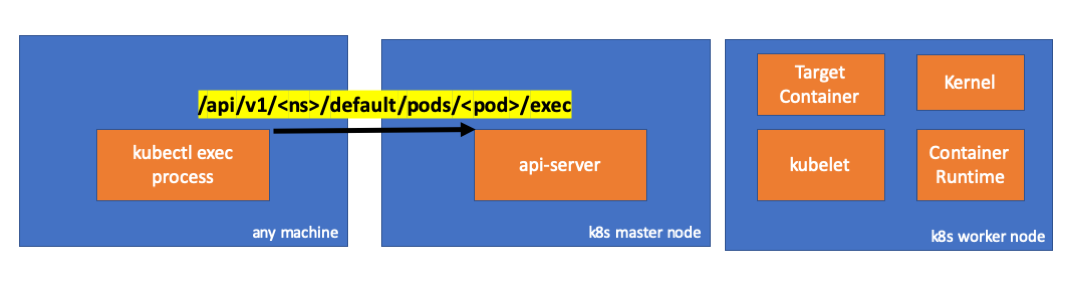

Mari kita lihat kodenya. Kubectl membuat permintaan POST dengan subresource exec dan mengirimkan permintaan REST:

req := restClient.Post(). Resource("pods"). Name(pod.Name). Namespace(pod.Namespace). SubResource("exec") req.VersionedParams(&corev1.PodExecOptions{ Container: containerName, Command: p.Command, Stdin: p.Stdin, Stdout: p.Out != nil, Stderr: p.ErrOut != nil, TTY: t.Raw, }, scheme.ParameterCodec) return p.Executor.Execute("POST", req.URL(), p.Config, p.In, p.Out, p.ErrOut, t.Raw, sizeQueue)

( kubectl / pkg / cmd / exec / exec.go )

2. Aktivitas di sisi master node

Kami juga dapat mengamati permintaan di sisi api-server:

handler.go:143] kube-apiserver: POST "/api/v1/namespaces/default/pods/exec-test-nginx-6558988d5-fgxgg/exec" satisfied by gorestful with webservice /api/v1 upgradeaware.go:261] Connecting to backend proxy (intercepting redirects) https://192.168.205.11:10250/exec/default/exec-test-nginx-6558988d5-fgxgg/exec-test-nginx?command=sh&input=1&output=1&tty=1 Headers: map[Connection:[Upgrade] Content-Length:[0] Upgrade:[SPDY/3.1] User-Agent:[kubectl/v1.12.10 (darwin/amd64) kubernetes/e3c1340] X-Forwarded-For:[192.168.205.1] X-Stream-Protocol-Version:[v4.channel.k8s.io v3.channel.k8s.io v2.channel.k8s.io channel.k8s.io]]

Perhatikan bahwa permintaan HTTP termasuk permintaan perubahan protokol. SPDY memungkinkan Anda untuk mengalikan stream stdin / stdout / stderr / spdy-error individu melalui koneksi TCP tunggal.Server API menerima permintaan dan mengonversinya ke

PodExecOptions :

( pkg / apis / core / types.go )Untuk melakukan tindakan yang diperlukan, api-server harus tahu pod mana yang perlu dihubungi:

( pkg / registry / core / pod / strategy.go )Tentu saja, data titik akhir diambil dari informasi host:

nodeName := types.NodeName(pod.Spec.NodeName) if len(nodeName) == 0 {

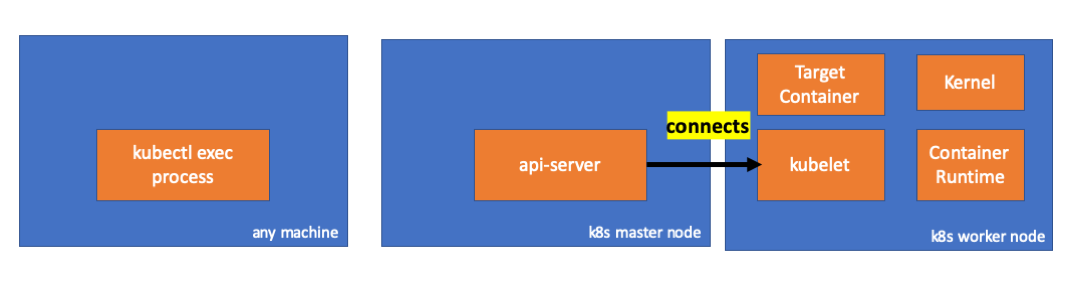

( pkg / registry / core / pod / strategy.go )Hore! Kubelet sekarang memiliki port (

node.Status.DaemonEndpoints.KubeletEndpoint.Port ) yang dapat dihubungkan oleh server API:

( pkg / kubelet / klien / kubelet_client.go )Dari dokumentasi Master-Node Communication> Master to Cluster> apiserver to kubelet :

Koneksi ini ditutup pada titik akhir HTTPS kubelet. Secara default, apiserver tidak memverifikasi sertifikat kubelet, yang membuat koneksi rentan terhadap "serangan perantara" (MITM) dan tidak aman untuk bekerja pada jaringan publik yang tidak dipercaya dan / atau publik.

Sekarang server API mengetahui titik akhir dan membuat koneksi:

( pkg / registry / core / pod / rest / subresources.go )Mari kita lihat apa yang terjadi pada master node.

Pertama-tama kita mengetahui IP dari node yang berfungsi. Dalam kasus kami, ini adalah 192.168.205.11:

// any machine $ kubectl get nodes k8s-node-1 -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-node-1 Ready <none> 9h v1.15.3 192.168.205.11 <none> Ubuntu 16.04.6 LTS 4.4.0-159-generic docker://17.3.3

Kemudian instal port kubelet (10250 dalam kasus kami):

// any machine $ kubectl get nodes k8s-node-1 -o jsonpath='{.status.daemonEndpoints.kubeletEndpoint}' map[Port:10250]

Sekarang saatnya memeriksa jaringan. Apakah ada koneksi ke simpul kerja (192.168.205.11)? Itu ada di sana! Jika Anda membunuh proses

exec , itu akan hilang, jadi saya tahu bahwa koneksi dibuat oleh api-server sebagai hasil dari perintah exec yang dieksekusi.

// master node $ netstat -atn |grep 192.168.205.11 tcp 0 0 192.168.205.10:37870 192.168.205.11:10250 ESTABLISHED …

Koneksi antara kubectl dan api-server masih terbuka. Selain itu, ada koneksi lain yang menghubungkan api-server dan kubelet.

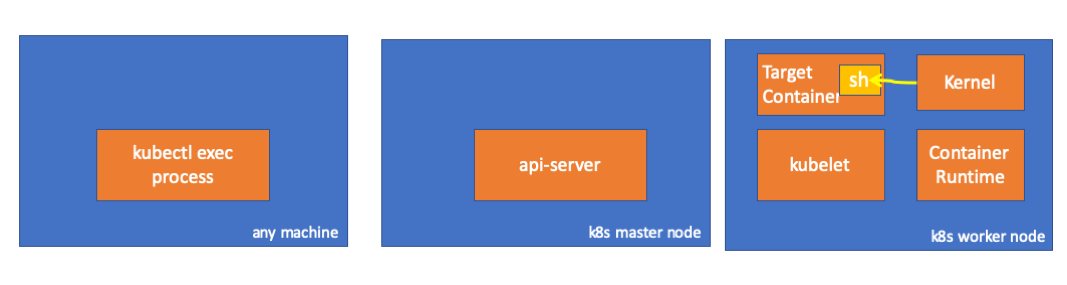

3. Aktivitas pada simpul kerja

Sekarang mari kita terhubung ke node pekerja dan lihat apa yang terjadi di sana.

Pertama-tama, kita melihat bahwa hubungan dengannya juga terjalin (baris kedua); 192.168.205.10 adalah IP dari node master:

// worker node $ netstat -atn |grep 10250 tcp6 0 0 :::10250 :::* LISTEN tcp6 0 0 192.168.205.11:10250 192.168.205.10:37870 ESTABLISHED

Bagaimana dengan tim

sleep kita? Hore, dia juga hadir!

// worker node $ ps -afx ... 31463 ? Sl 0:00 \_ docker-containerd-shim 7d974065bbb3107074ce31c51f5ef40aea8dcd535ae11a7b8f2dd180b8ed583a /var/run/docker/libcontainerd/7d974065bbb3107074ce31c51 31478 pts/0 Ss 0:00 \_ sh 31485 pts/0 S+ 0:00 \_ sleep 5000 …

Tapi tunggu: bagaimana kubelet menghidupkan ini? Ada daemon di kubelet yang memungkinkan akses ke API melalui port untuk permintaan api-server:

( pkg / kubelet / server / streaming / server.go )Kubelet menghitung titik akhir respons untuk permintaan exec:

func (s *server) GetExec(req *runtimeapi.ExecRequest) (*runtimeapi.ExecResponse, error) { if err := validateExecRequest(req); err != nil { return nil, err } token, err := s.cache.Insert(req) if err != nil { return nil, err } return &runtimeapi.ExecResponse{ Url: s.buildURL("exec", token), }, nil }

( pkg / kubelet / server / streaming / server.go )Jangan bingung. Itu tidak mengembalikan hasil dari perintah, tetapi titik akhir untuk komunikasi:

type ExecResponse struct {

( cri-api / pkg / apis / runtime / v1alpha2 / api.pb.go )Kubelet mengimplementasikan antarmuka

RuntimeServiceClient , yang merupakan bagian dari Interface Runtime Interface

(kami menulis lebih banyak tentangnya, misalnya, di sini - kira-kira.Daftar panjang dari cri-api ke kubernetes / kubernetes Itu hanya menggunakan gRPC untuk menjalankan metode melalui Container Runtime Interface:

type runtimeServiceClient struct { cc *grpc.ClientConn }

( cri-api / pkg / apis / runtime / v1alpha2 / api.pb.go ) func (c *runtimeServiceClient) Exec(ctx context.Context, in *ExecRequest, opts ...grpc.CallOption) (*ExecResponse, error) { out := new(ExecResponse) err := c.cc.Invoke(ctx, "/runtime.v1alpha2.RuntimeService/Exec", in, out, opts...) if err != nil { return nil, err } return out, nil }

( cri-api / pkg / apis / runtime / v1alpha2 / api.pb.go )Container Runtime bertanggung jawab untuk mengimplementasikan

RuntimeServiceServer :

Daftar panjang dari cri-api ke kubernetes / kubernetes

Jika demikian, kita harus melihat koneksi antara kubelet dan runtime kontainer, kan? Mari kita periksa.

Jalankan perintah ini sebelum dan sesudah perintah exec dan lihat perbedaannya. Dalam kasus saya, perbedaannya adalah ini:

// worker node $ ss -a -p |grep kubelet ... u_str ESTAB 0 0 * 157937 * 157387 users:(("kubelet",pid=5714,fd=33)) ...

Hmmm ... Koneksi baru melalui soket unix antara kubelet (pid = 5714) dan sesuatu yang tidak diketahui. Apa itu? Itu benar, ini Docker (pid = 1186)!

// worker node $ ss -a -p |grep 157387 ... u_str ESTAB 0 0 * 157937 * 157387 users:(("kubelet",pid=5714,fd=33)) u_str ESTAB 0 0 /var/run/docker.sock 157387 * 157937 users:(("dockerd",pid=1186,fd=14)) ...

Seperti yang Anda ingat, ini adalah proses docker daemon (pid = 1186) yang mengeksekusi perintah kami:

// worker node $ ps -afx ... 1186 ? Ssl 0:55 /usr/bin/dockerd -H fd:// 17784 ? Sl 0:00 \_ docker-containerd-shim 53a0a08547b2f95986402d7f3b3e78702516244df049ba6c5aa012e81264aa3c /var/run/docker/libcontainerd/53a0a08547b2f95986402d7f3 17801 pts/2 Ss 0:00 \_ sh 17827 pts/2 S+ 0:00 \_ sleep 5000 ...

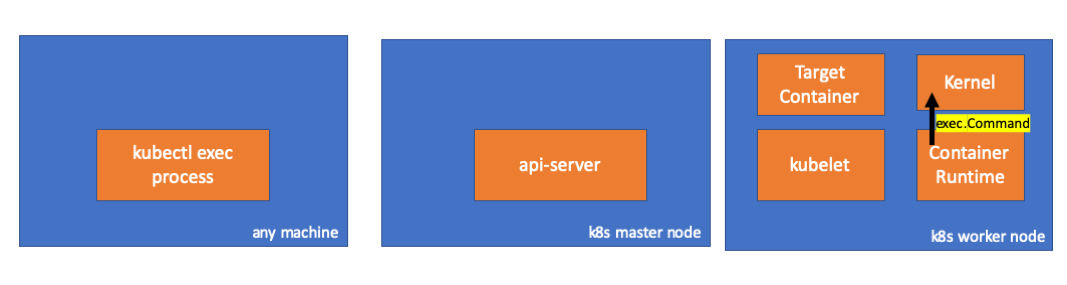

4. Aktivitas dalam runtime wadah

Mari kita periksa kode sumber CRI-O untuk memahami apa yang terjadi. Di Docker, logikanya serupa.

Ada server yang bertanggung jawab untuk mengimplementasikan

RuntimeServiceServer :

( cri-o / server / server.go )

( cri-o / erver / container_exec.go )Di akhir rantai, runtime kontainer menjalankan perintah pada simpul kerja:

( cri-o / internal / oci / runtime_oci.go )

Akhirnya, kernel menjalankan perintah:

Pengingat

- API Server juga dapat memulai koneksi ke kubelet.

- Koneksi berikut dipertahankan hingga akhir sesi eksekutif interaktif:

- antara kubectl dan api-server;

- antara api-server dan kubectl;

- antara kubelet dan container runtime.

- Kubectl atau api-server tidak dapat menjalankan apa pun pada node produksi. Kubelet dapat memulai, tetapi untuk tindakan ini ia juga berinteraksi dengan runtime wadah.

Sumber daya

PS dari penerjemah

Baca juga di blog kami: