在上一篇文章中 ,介绍了NAS软件平台的设计。

现在是实现它的时候了。

检查一下

在开始之前,请确保检查池的运行状况:

zpool status -v

池及其中的所有磁盘必须处于联机状态。

此外,我假设在上一个阶段,所有操作都是根据说明进行的 ,并且可以正常工作,或者您自己很了解自己在做什么。

便利设施

首先,如果您从一开始就没有这样做,那么值得进行便利的管理。

要求:

- SSH服务器:

apt-get install openssh-server 。 如果您不知道如何配置SSH, 在Linux上做NAS还为时过早 您可以在本文中阅读其使用功能,然后使用其中一本手册 。 - tmux或屏幕 :

apt-get install tmux 。 在通过SSH登录并使用多个窗口时保存会话。

安装SSH之后,您需要添加一个用户,以便不以root身份通过SSH登录(默认情况下,输入已禁用,您无需启用它):

zfs create rpool/home/user adduser user cp -a /etc/skel/.[!.]* /home/user chown -R user:user /home/user

对于远程管理,这是足够的最低要求。

不过,尽管您需要保持键盘和显示器的连接, 您还需要在更新内核时重新启动,以确保加载后一切都能正常运行。

一种替代方法是使用提供IME的 Virtual KVM。 那里有一个控制台,尽管就我而言,它是作为Java applet实现的,但不是很方便。

客制化

缓存准备

据您所记得,在我描述的配置中,L2ARC下有一个单独的SSD,尚未使用,但用于“增长”。

可选,但是建议用随机数据填充此SSD(对于Samsung EVO,在blkdiscard之后将用零填充,但无论如何不是在所有SSD上填充):

dd if=/dev/urandom of=/dev/disk/by-id/ata-Samsung_SSD_850_EVO bs=4M && blkdiscard /dev/disk/by-id/ata-Samsung_SSD_850_EVO

禁用日志压缩

在ZFS上,已经使用了压缩,因为通过gzip进行日志压缩显然是多余的。

关闭:

for file in /etc/logrotate.d/* ; do if grep -Eq "(^|[^#y])compress" "$file" ; then sed -i -r "s/(^|[^#y])(compress)/\1#\2/" "$file" fi done

系统更新

这里的一切都很简单:

apt-get dist-upgrade --yes reboot

为新状态创建快照

重新启动后,为了修复新的工作状态,您需要重写第一个快照:

zfs destroy rpool/ROOT/debian@install zfs snapshot rpool/ROOT/debian@install

文件系统组织

为SLOG分区

为了获得正常的ZFS性能,要做的第一件事是将SLOG放在SSD上。

让我提醒您,使用的配置中的SLOG在两个SSD上重复:为此,将在每个SSD的第4部分的顶部创建LUKS-XTS上的设备:

dd if=/dev/urandom of=/etc/keys/slog.key bs=1 count=4096 cryptsetup --verbose --cipher "aes-xts-plain64:sha512" --key-size 512 --key-file /etc/keys/slog.key luksFormat /dev/disk/by-id/ata-Samsung_SSD_850_PRO-part4 cryptsetup --verbose --cipher "aes-xts-plain64:sha512" --key-size 512 --key-file /etc/keys/slog.key luksFormat /dev/disk/by-id/ata-Micron_1100-part4 echo "slog0_crypt1 /dev/disk/by-id/ata-Samsung_SSD_850_PRO-part4 /etc/keys/slog.key luks,discard" >> /etc/crypttab echo "slog0_crypt2 /dev/disk/by-id/ata-Micron_1100-part4 /etc/keys/slog.key luks,discard" >> /etc/crypttab

为L2ARC和交换分区

首先,您需要在swap和l2arc下创建分区:

sgdisk -n1:0:48G -t1:8200 -c1:part_swap -n2::196G -t2:8200 -c2:part_l2arc /dev/disk/by-id/ata-Samsung_SSD_850_EVO

交换分区和L2ARC将使用随机密钥加密,如下所示 重新启动后,不需要它们,并且始终可以重新创建它们。

因此,在crypttab中,编写了一行以纯模式加密/解密分区:

echo swap_crypt /dev/disk/by-id/ata-Samsung_SSD_850_EVO-part1 /dev/urandom swap,cipher=aes-xts-plain64:sha512,size=512 >> /etc/crypttab echo l2arc_crypt /dev/disk/by-id/ata-Samsung_SSD_850_EVO-part2 /dev/urandom cipher=aes-xts-plain64:sha512,size=512 >> /etc/crypttab

然后,您需要重新启动守护程序并启用交换:

echo 'vm.swappiness = 10' >> /etc/sysctl.conf sysctl vm.swappiness=10 systemctl daemon-reload systemctl start systemd-cryptsetup@swap_crypt.service echo /dev/mapper/swap_crypt none swap sw,discard 0 0 >> /etc/fstab swapon -av

因为 swapiness上未积极使用swapiness ; swapiness参数(默认值为60)必须设置为10。

L2ARC在此阶段尚未使用,但是它的部分已经准备就绪:

$ ls /dev/mapper/ control l2arc_crypt root_crypt1 root_crypt2 slog0_crypt1 slog0_crypt2 swap_crypt tank0_crypt0 tank0_crypt1 tank0_crypt2 tank0_crypt3

水池槽N

将描述tank0池的创建,类推地创建tank0 。

为了不手动创建相同的分区并避免错误,我编写了一个脚本来创建池的加密分区:

现在,使用此脚本,您需要创建一个用于存储数据的池:

./create_crypt_pool.sh zpool create -o ashift=12 -O atime=off -O compression=lz4 -O normalization=formD tank0 raidz1 /dev/disk/by-id/dm-name-tank0_crypt*

有关ashift=12参数的注释,请参阅我以前的 文章和有关它们的评论。

创建池后,我将其日志放到SSD上:

zpool add tank0 log mirror /dev/disk/by-id/dm-name-slog0_crypt1 /dev/disk/by-id/dm-name-slog0_crypt2

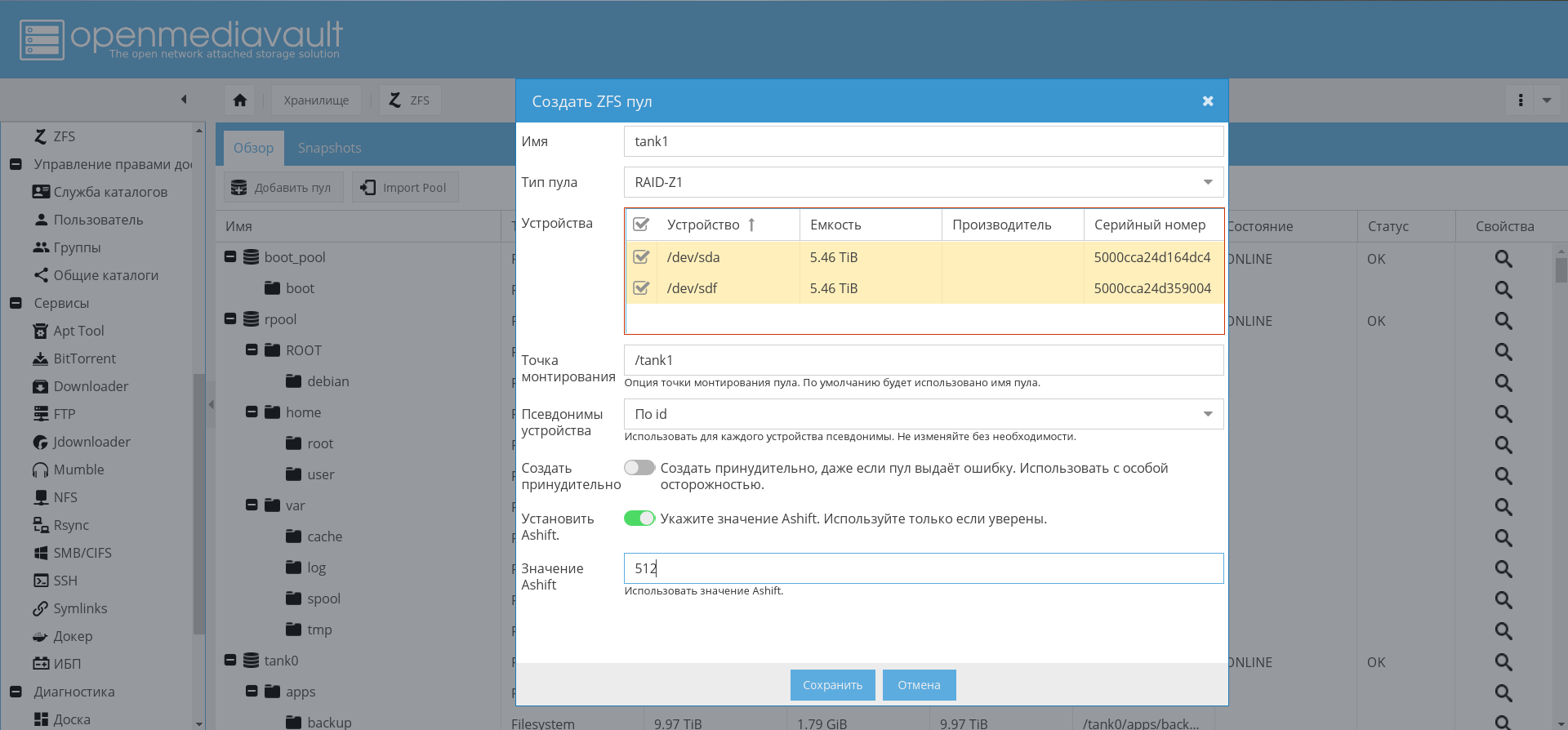

将来,在安装和配置OMV的情况下,可以通过GUI创建池:

在启动时启用池导入和自动挂载卷

为了保证启用自动挂接池,请运行以下命令:

rm /etc/zfs/zpool.cache systemctl enable zfs-import-scan.service systemctl enable zfs-mount.service systemctl enable zfs-import-cache.service

在此阶段,磁盘子系统的配置完成。

作业系统

第一步是安装和配置OMV,以便最终为NAS奠定某种基础。

安装OMV

OMV将作为deb软件包安装。 为此,可以使用官方说明 。

add_repo.sh脚本将OMV Arrakis存储库添加到add_repo.sh以便批处理系统可以看到该存储库。

add_repo.sh cat <<EOF >> /etc/apt/sources.list.d/openmediavault.list deb http://packages.openmediavault.org/public arrakis main

请注意,与原始版本相比,包含了合作伙伴存储库。

要安装和初始化,您必须运行以下命令。

用于安装OMV的命令。 ./add_repo.sh export LANG=C export DEBIAN_FRONTEND=noninteractive export APT_LISTCHANGES_FRONTEND=none apt-get update apt-get --allow-unauthenticated install openmediavault-keyring apt-get update apt-get --yes --auto-remove --show-upgraded \ --allow-downgrades --allow-change-held-packages \ --no-install-recommends \ --option Dpkg::Options::="--force-confdef" \ --option DPkg::Options::="--force-confold" \ install postfix openmediavault

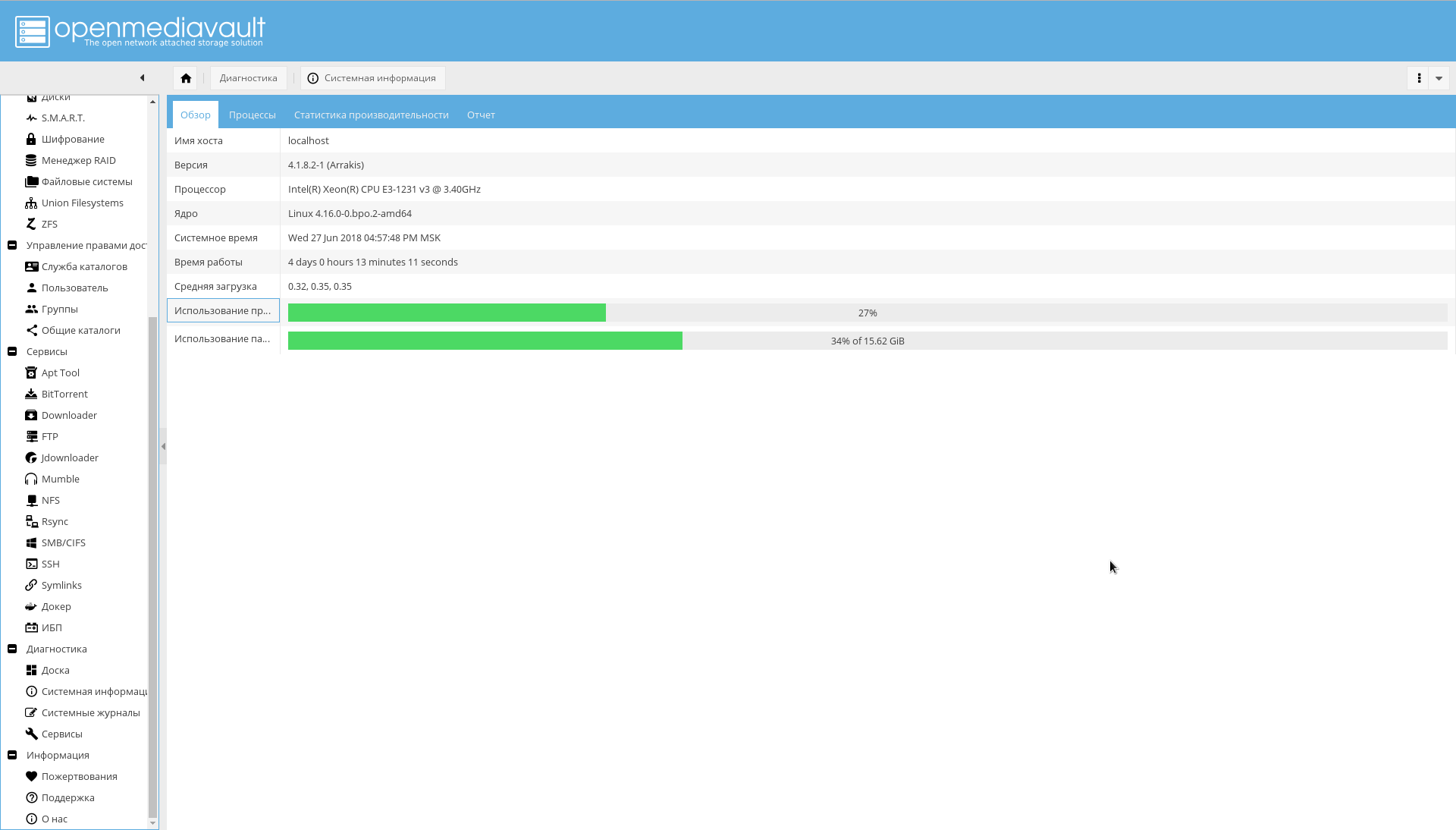

已安装OMV。 它使用自己的内核,安装后可能需要重新启动。

重新启动后,OpenMediaVault界面将在端口80上可用(通过IP地址转到NAS上的浏览器):

默认的用户名/密码为admin/openmediavault 。

配置OMV

此外,大多数配置将通过WEB-GUI。

建立安全连接

现在,您需要更改WEB管理员的密码并为NAS生成证书,以便将来使用HTTPS。

在“系统->常规设置-> Web管理员密码”选项卡上更改密码 。

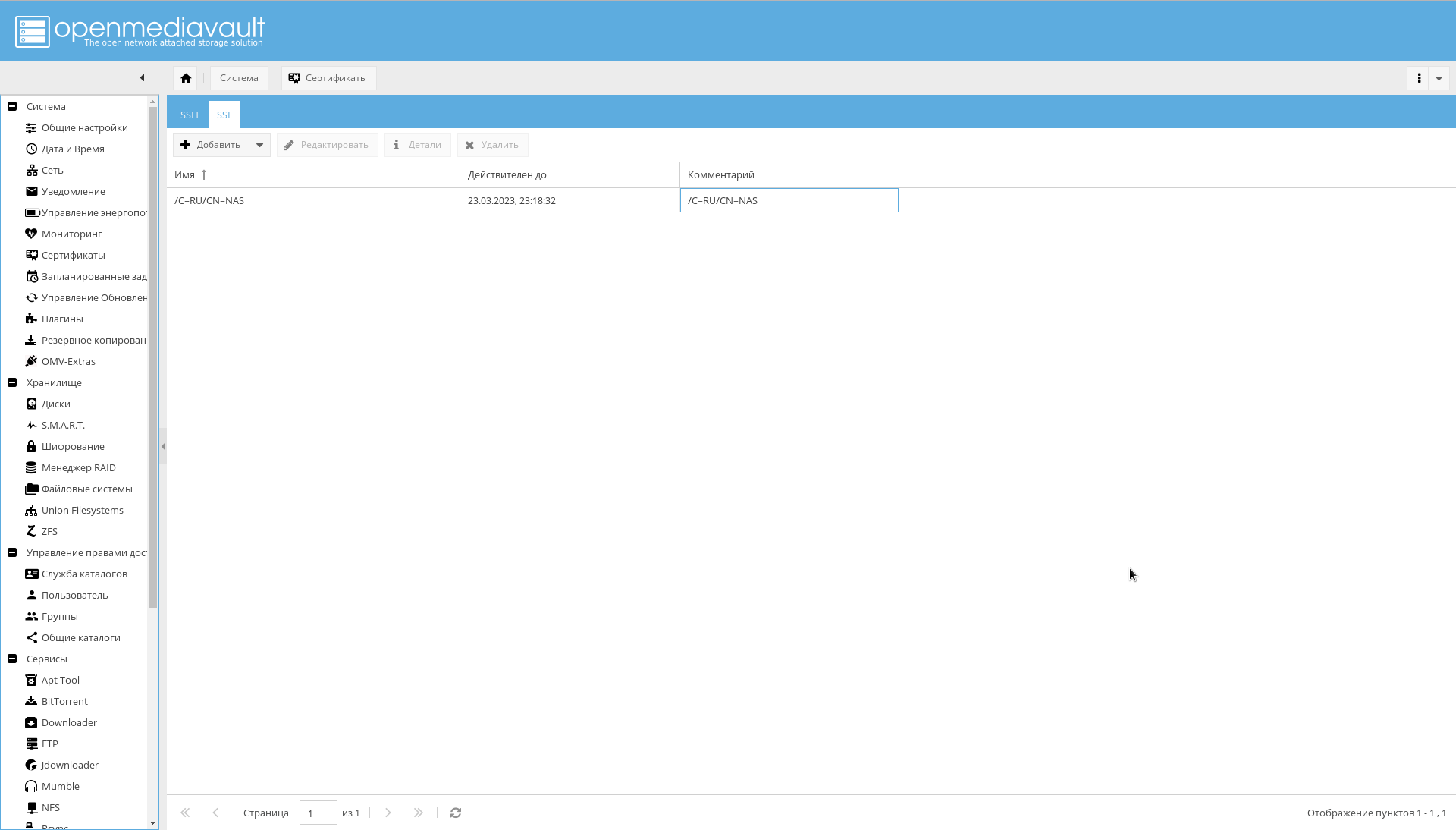

要在“系统->证书-> SSL”选项卡上生成证书,请选择“添加->创建” 。

创建的证书将在同一选项卡上可见:

创建证书后,在“系统->常规设置”选项卡上,启用“启用SSL / TLS”复选框。

配置完成之前,将需要证书。 最终版本将使用签名证书来联系OMV。

现在,您需要登录到OMV,端口443,或在浏览器中简单地将https://前缀分配给IP。

如果您成功登录,则在“系统->常规设置”选项卡上,需要启用“强制SSL / TLS”复选框。

将端口80和443更改为10080和10443 。

并尝试登录到以下地址: https://IP_NAS:10443 。

更改端口很重要,因为端口80和443将使用带有nginx-reverse-proxy的docker容器。

主要设定

必须首先完成的最低设置:

- 在“系统->日期和时间”选项卡上,检查时区值并设置NTP服务器。

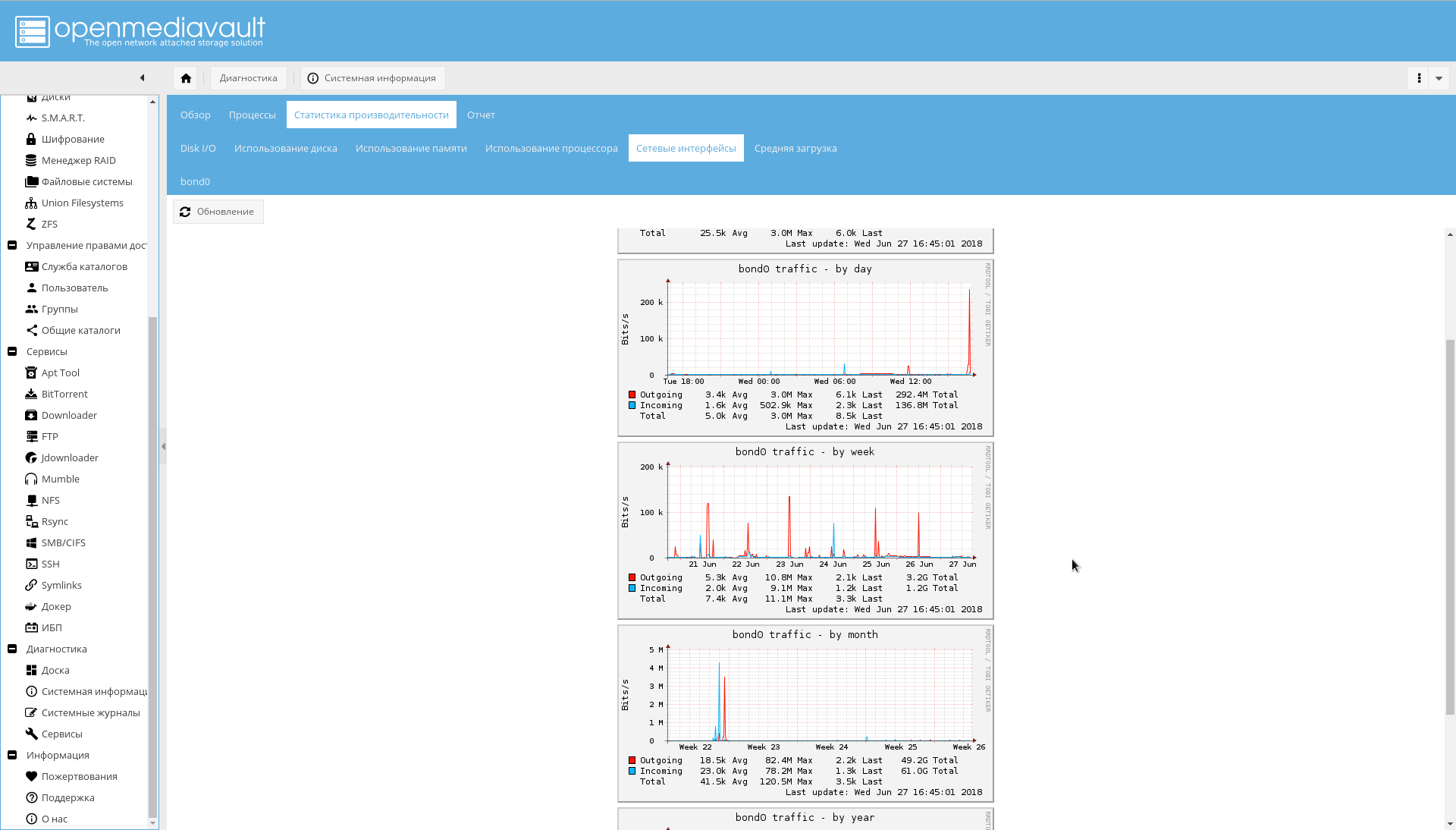

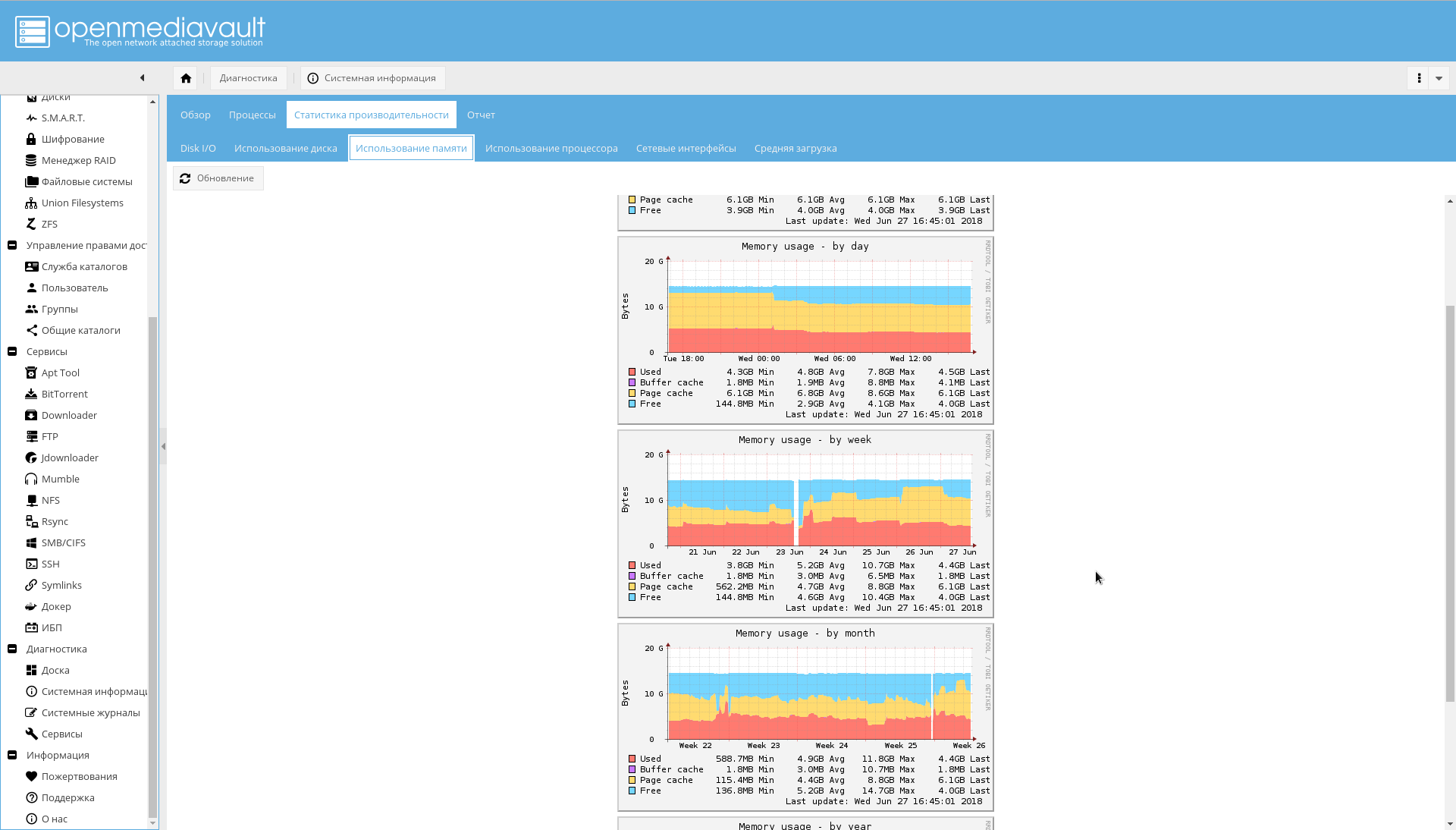

- 在选项卡“系统->监视”上,启用性能统计信息收集。

- 在“系统->能源管理”选项卡上,可能有必要关闭“监视”,这样OMV不会尝试控制风扇。

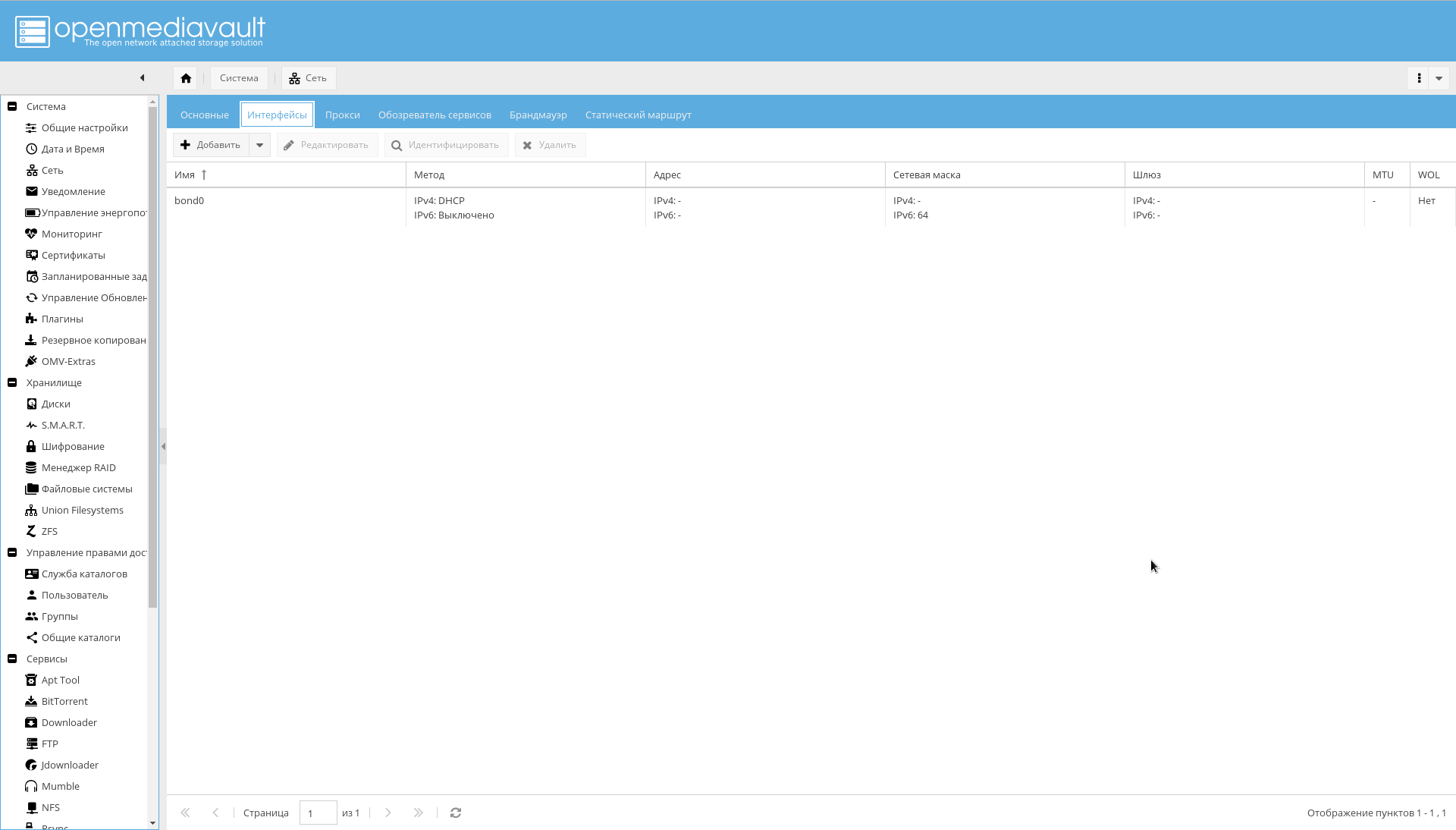

联播网

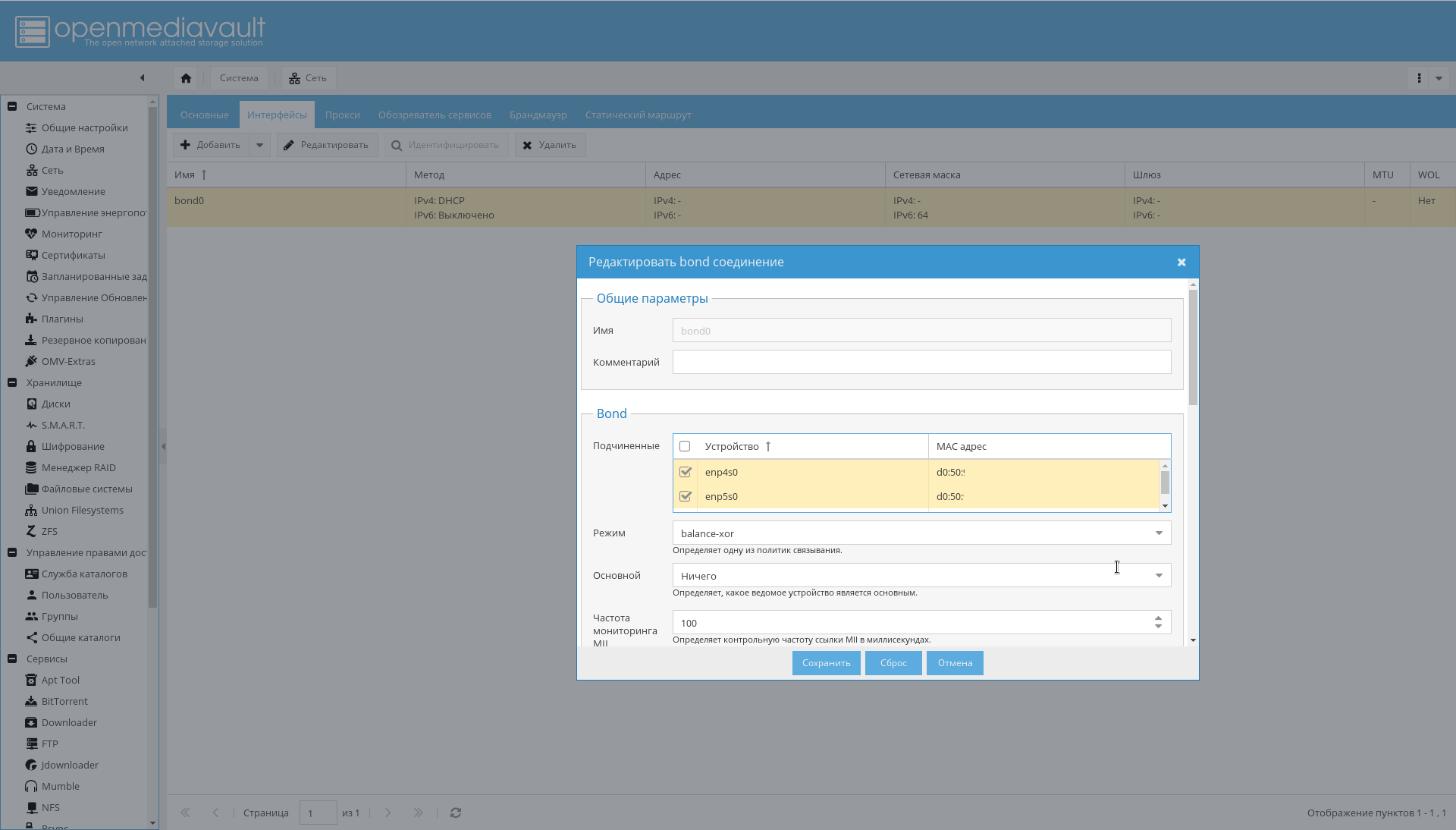

如果尚未连接第二个NAS网络接口,则将其连接到路由器。

然后:

- 在“系统->网络”选项卡上,将主机名设置为“ nas”(或您喜欢的任何名称)。

- 设置接口的绑定,如下图所示: “ System-> Network-> Interfaces-> Add-> Bond” 。

- 在选项卡“系统->网络->防火墙”上添加必要的防火墙规则。 首先,访问端口10443、10080、443、80、22的SSH以及接收/发送ICMP的权限就足够了。

结果,应该出现绑定中的接口,路由器会将其视为一个接口并为其分配一个IP地址:

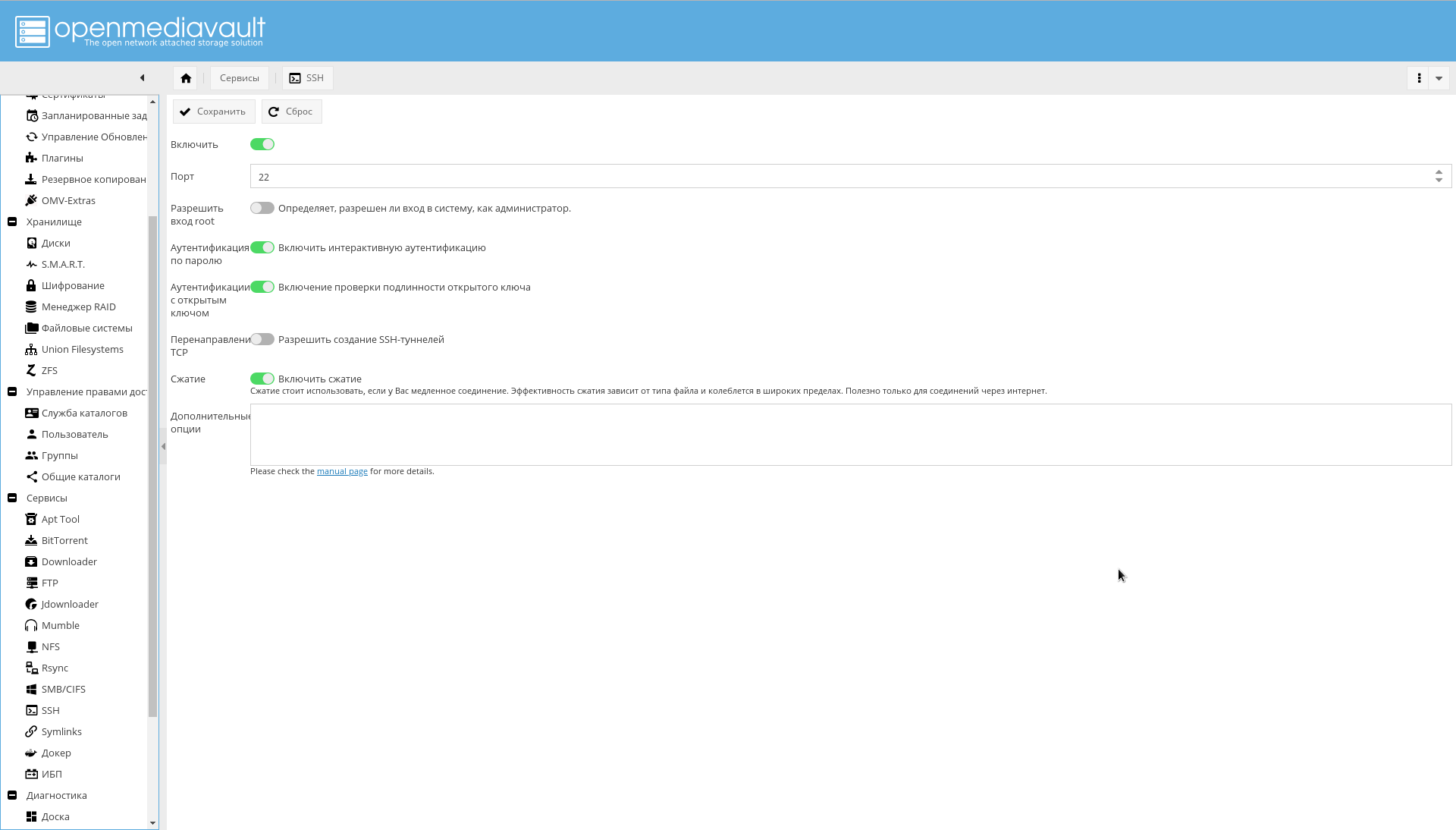

如果需要,可以从WEB GUI额外配置SSH:

储存库和模块

在“系统->更新管理->设置”选项卡上,打开“社区支持的更新 。 ”

首先,添加OMV Extras存储库 。

只需安装插件或软件包即可完成此操作,如论坛所示 。

在“系统->插件”页面上,您需要找到插件“ openmediavault-omvextrasorg”并进行安装。

结果,“ OMV-Extras”图标将出现在系统菜单中(可以在屏幕截图中看到)。

转到那里并启用以下存储库:

- OMV-Extras.org。 一个稳定的存储库,其中包含许多插件。

- OMV-Extras.org测试。 该存储库中的某些插件不在稳定的存储库中。

- Docker CE。 实际上,Docker。

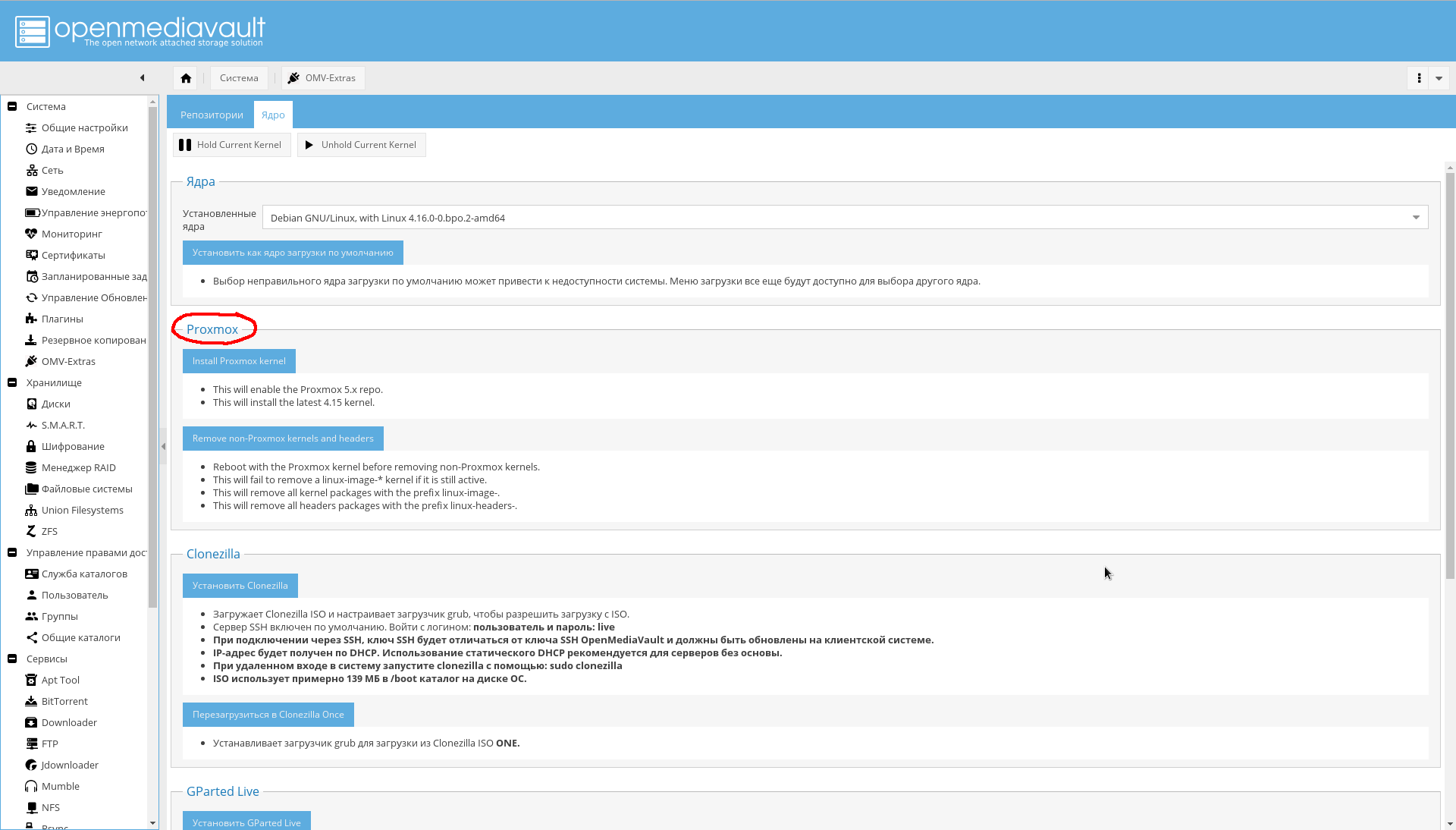

在“系统-> OMV Extras->内核”选项卡上,可以选择所需的内核,包括Proxmox的内核(我自己没有安装它,因为我还不需要安装,因此不推荐使用):

安装必要的插件( 用斜体 显示的绝对必要的粗体 -可选,我没有安装):

插件列表。- openmediavault-apttool。 用于批处理系统的最低GUI。 添加“服务-> Apttool” 。

- openmediavault-anacron。 添加了使用异步调度程序从GUI工作的功能。 添加“系统-> Anacron” 。

- openmediavault备份。 在存储中提供备份系统。 添加页面“系统->备份” 。

- openmediavault-diskstats。 需要收集有关磁盘性能的统计信息。

- openmediavault-dnsmasq 。 允许您在NAS上增加DNS服务器和DHCP。 因为我是在路由器上进行的,所以不需要它。

- openmediavault-docker-gui 。 Docker容器管理界面。 添加“服务-> Docker” 。

- openmediavault-ldap 。 支持通过LDAP进行身份验证。 添加“权限管理->目录服务” 。

- openmediavault-letsencrypt 。 从GUI支持“让我们加密”。 不需要它,因为它使用嵌入在nginx-reverse-proxy容器中。

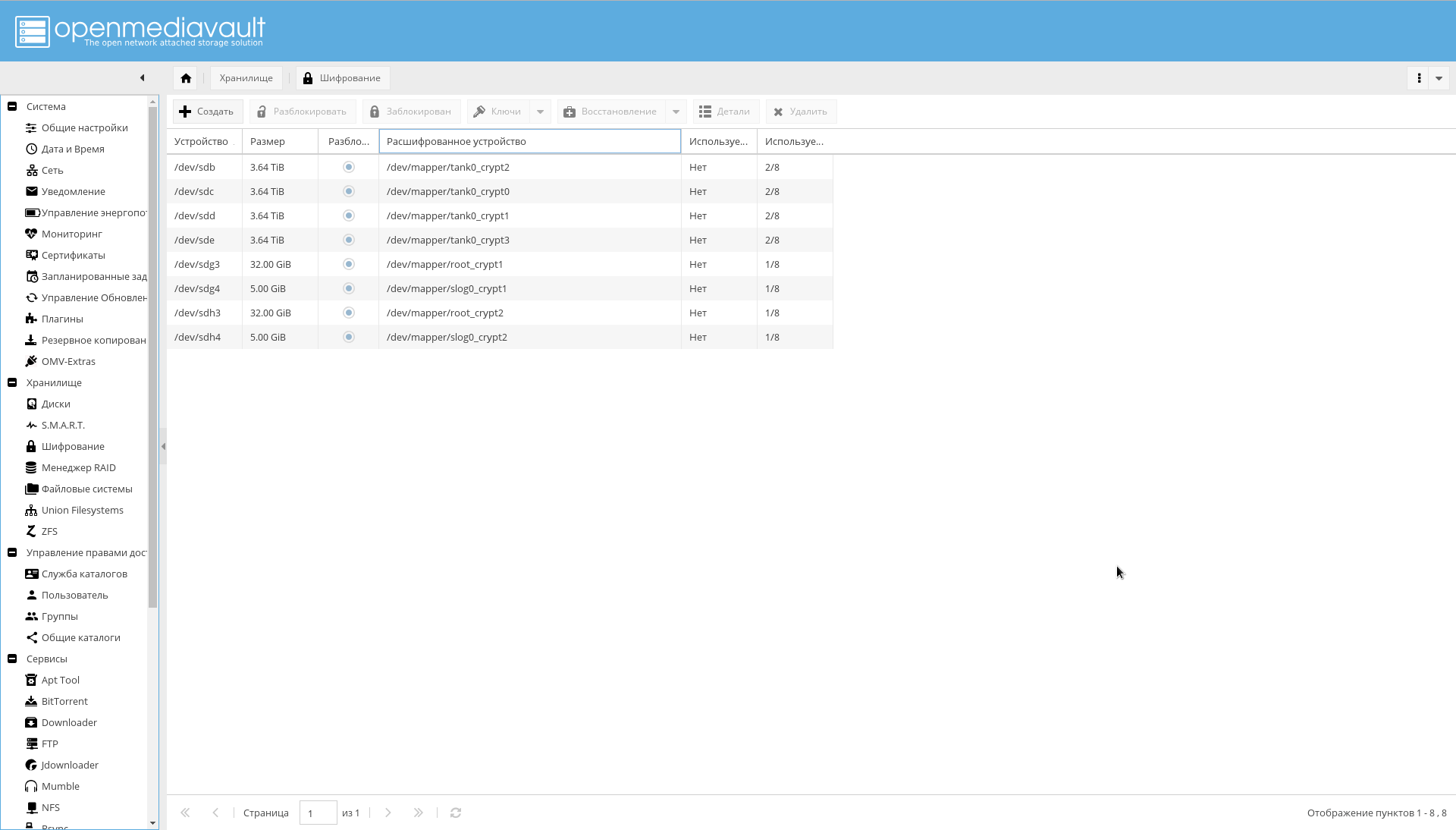

- openmediavault-luksencryption 。 LUKS加密支持。 必须在OMV界面中显示加密的磁盘。 添加“存储->加密” 。

- openmediavault-nut 。 UPS支持。 添加“服务-> UPS” 。

- openmediavault-omvextrasorg 。 OMV Extras应该已经安装。

- openmediavault-resetperms。 允许您重置权限和重置共享目录上的访问控制列表。 添加“访问控制->常规目录->重置权限” 。

- openmediavault-route。 路由管理的有用插件。 添加“系统->网络->静态路由” 。

- openmediavault符号链接。 提供创建符号链接的功能。 添加页面“服务->符号链接” 。

- openmediavault-union文件系统。 UnionFS支持。 尽管Docker使用ZFS作为后端,但将来可能会派上用场。 添加“存储->联合文件系统” 。

- openmediavault-virtualbox 。 它可用于在GUI中嵌入虚拟机管理功能。

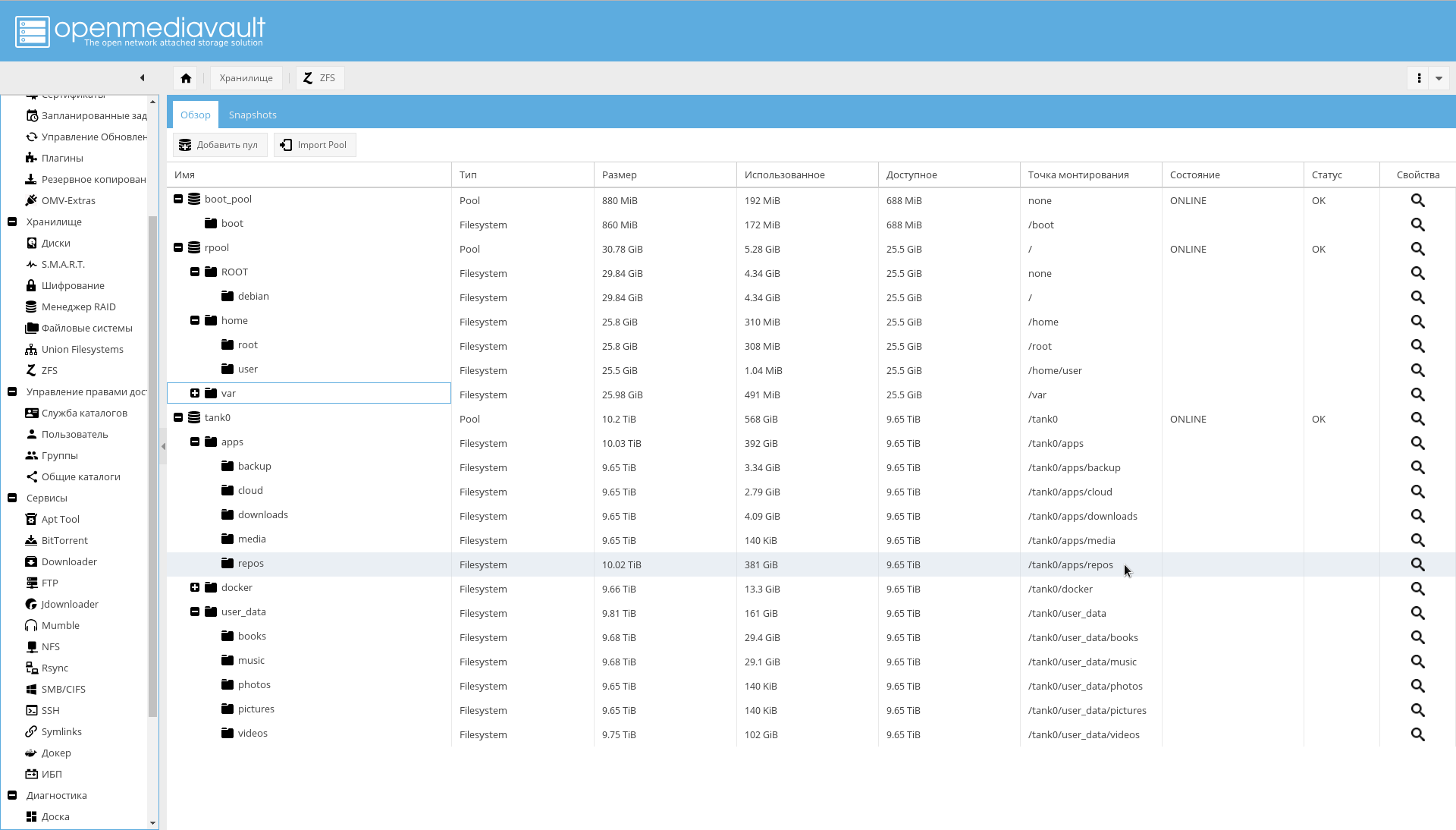

- openmediavault-zfs 。 该插件将ZFS支持添加到OpenMediaVault。 安装后,将出现“存储-> ZFS”页面。

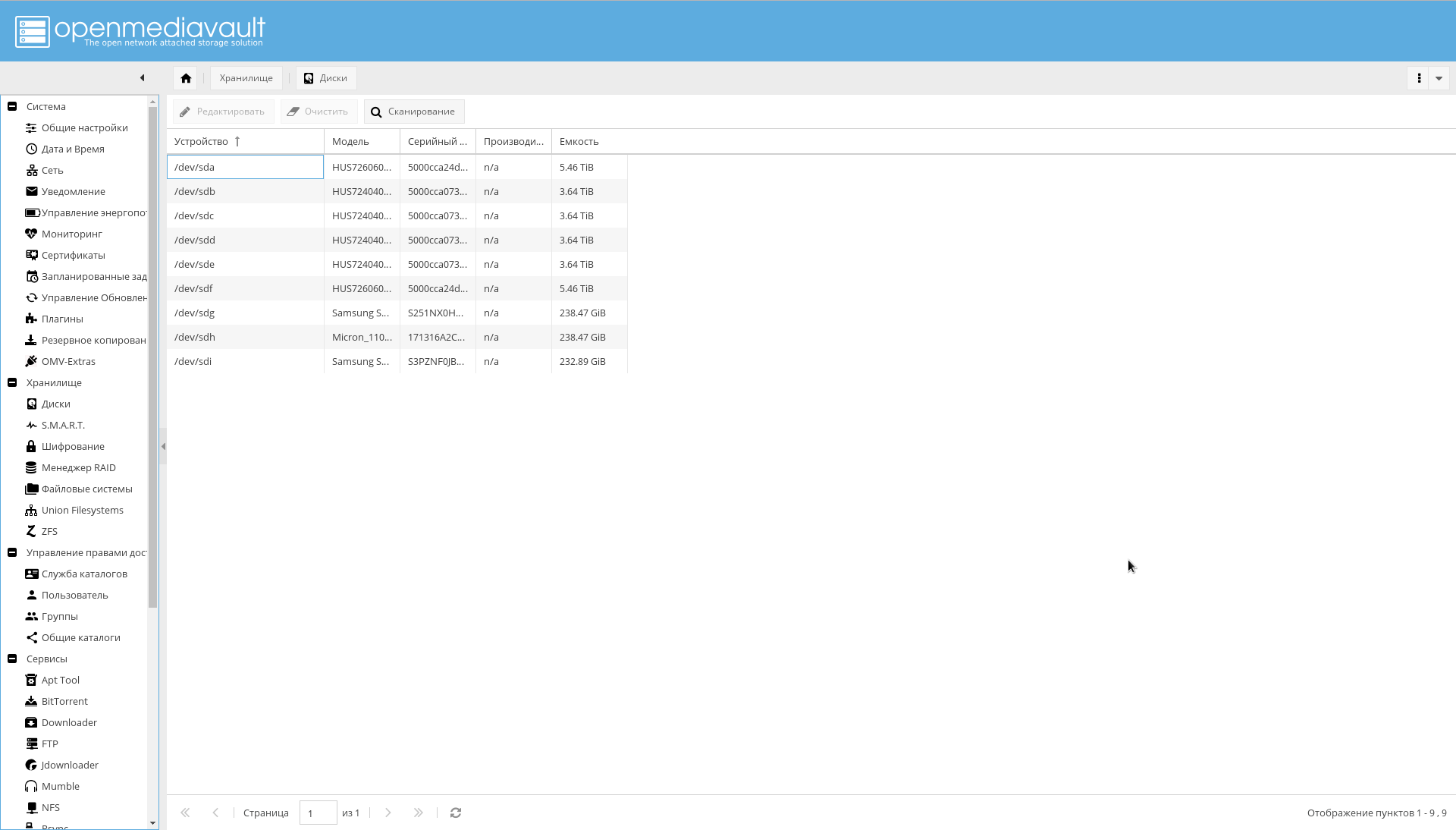

磁碟

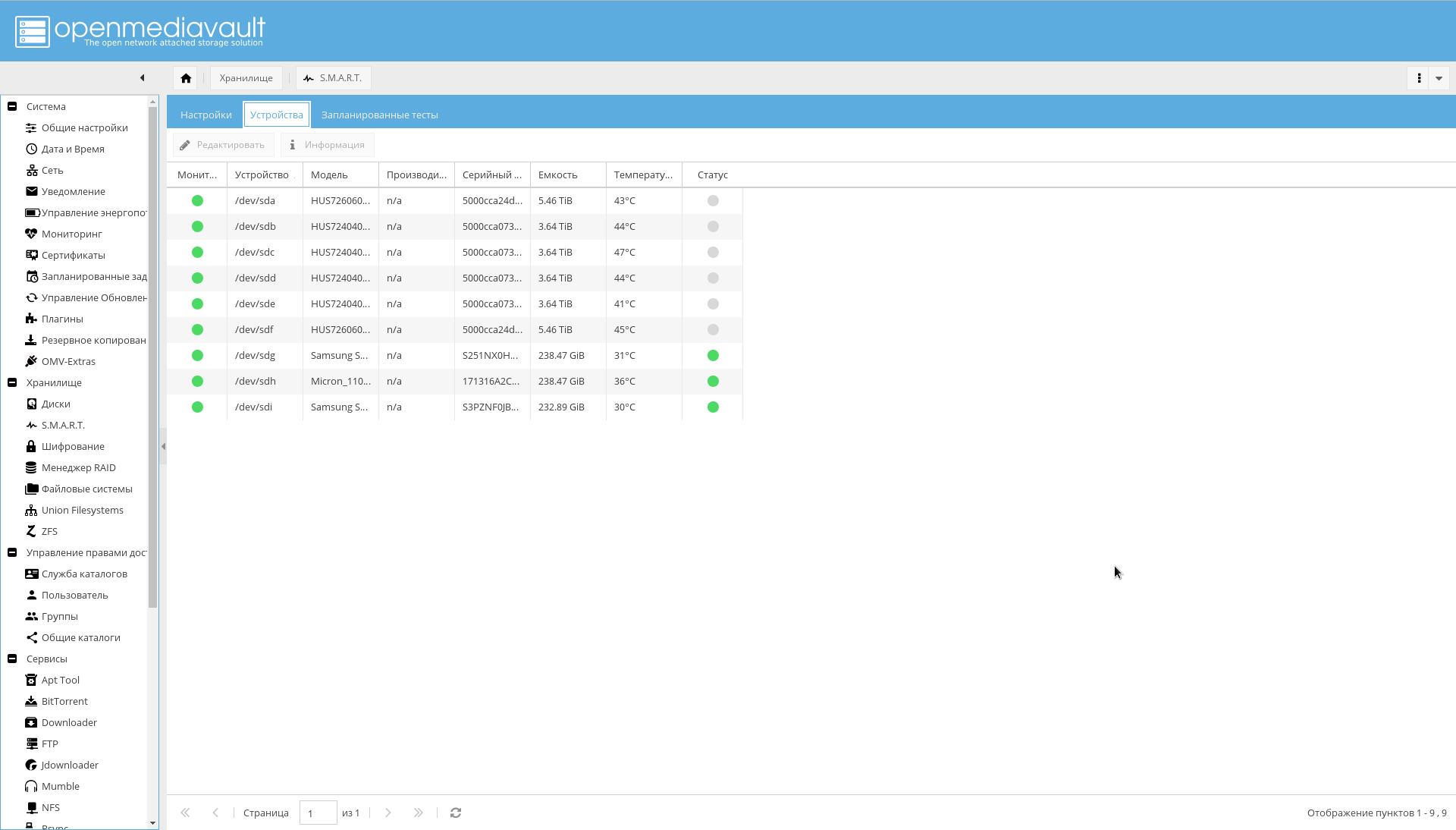

系统中的所有磁盘都必须是可见的OMV。 通过查看“存储->磁盘”标签来确保这一点。 如果不是所有光盘都可见,请运行扫描。

在那里,必须在所有HDD上启用写缓存(通过单击列表中的磁盘并单击“编辑”按钮)。

确保在“存储->加密”选项卡上可见所有加密部分:

现在是时候配置SMART了,这表示增加可靠性的一种方式:

- 转到“存储-> SMART->设置”选项卡 。 打开SMART。

- 在同一位置,选择磁盘的温度水平值(临界,通常为60 C, 磁盘的最佳温度范围为 15-45 C)。

- 转到“存储-> SMART->设备”标签 。 打开每个驱动器的监视。

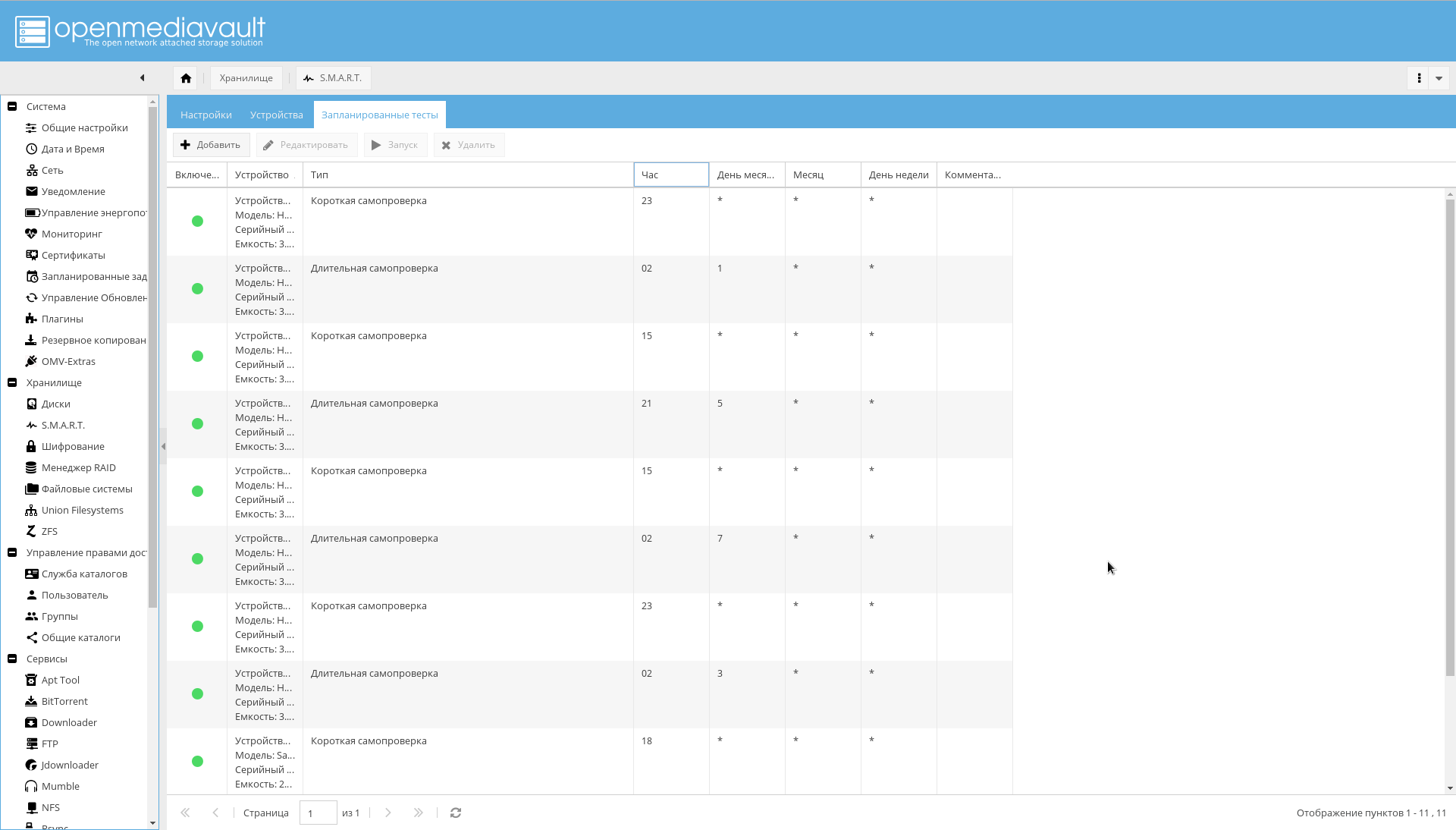

- 转到“存储-> SMART->计划的测试”选项卡 。 每天为每个磁盘添加一次简短的自测,并每月添加一次长时间的自测。 而且,自检时间不会重叠。

这样,就可以认为磁盘配置已完成。

文件系统和共享目录

您必须为预定义目录创建文件系统。

这可以从控制台或从OMV WEB界面(存储- > ZFS->选择池tank0->添加按钮->文件系统 )完成。

用于创建FS的命令。 zfs create -o utf8only=on -o normalization=formD -p tank0/user_data/books zfs create -o utf8only=on -o normalization=formD -p tank0/user_data/music zfs create -o utf8only=on -o normalization=formD -p tank0/user_data/pictures zfs create -o utf8only=on -o normalization=formD -p tank0/user_data/downloads zfs create -o compression=off -o utf8only=on -o normalization=formD -p tank0/user_data/videos

结果应为以下目录结构:

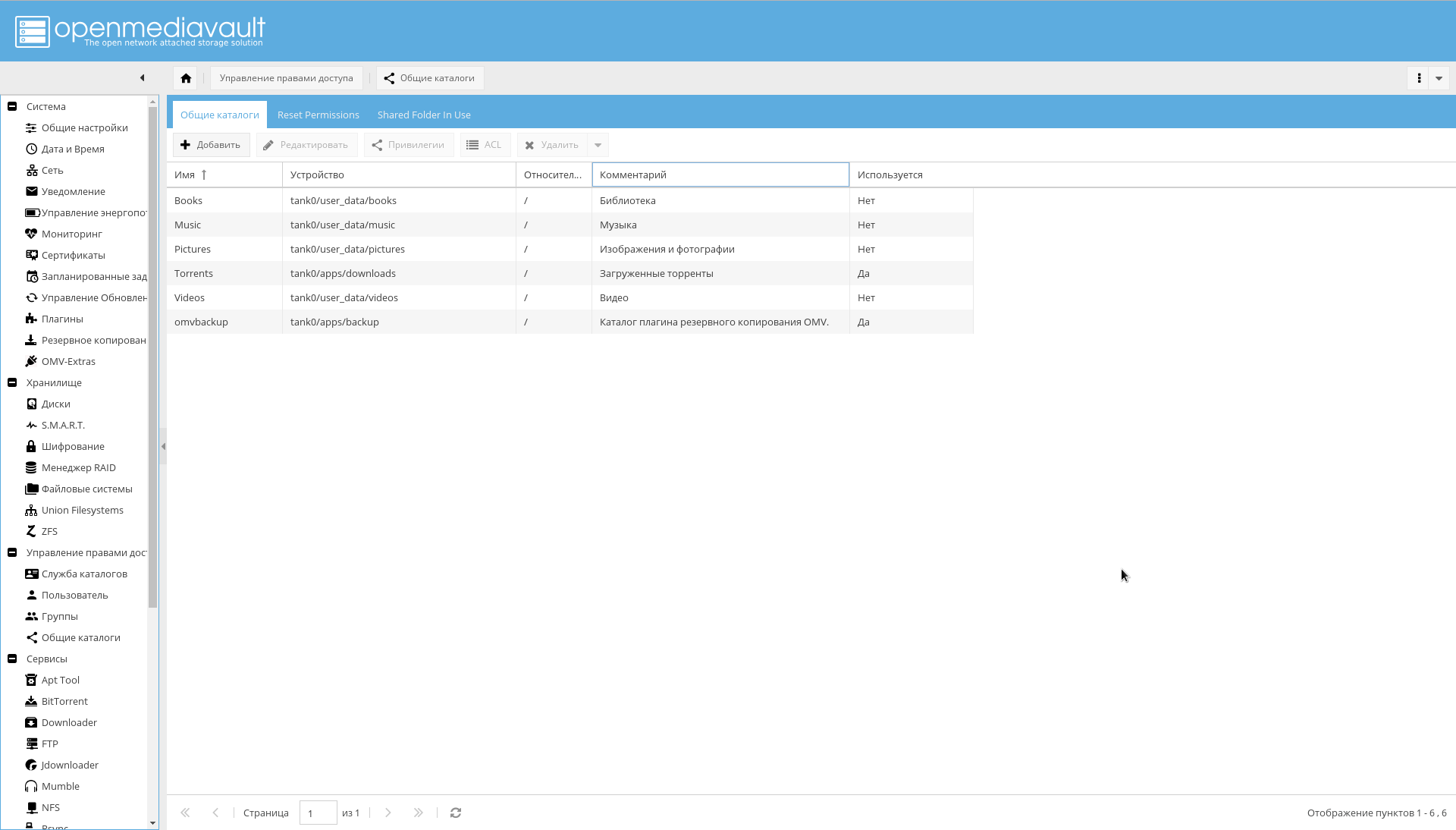

之后,在“管理访问权限->常规目录->添加”页面上将创建的FS添加为共享目录。

请注意, “设备”参数等于在ZFS中创建的文件系统的路径 ,所有目录的“路径”参数均为“ /”。

后备

备份由两个工具完成:

如果您使用插件,则很可能会收到错误消息:

lsblk: /dev/block/0:22: not a block device

如OMV开发人员在此“非常非标准配置”中指出的那样,为了对其进行修复,可以拒绝插件并以zfs send/receive的形式使用ZFS工具。

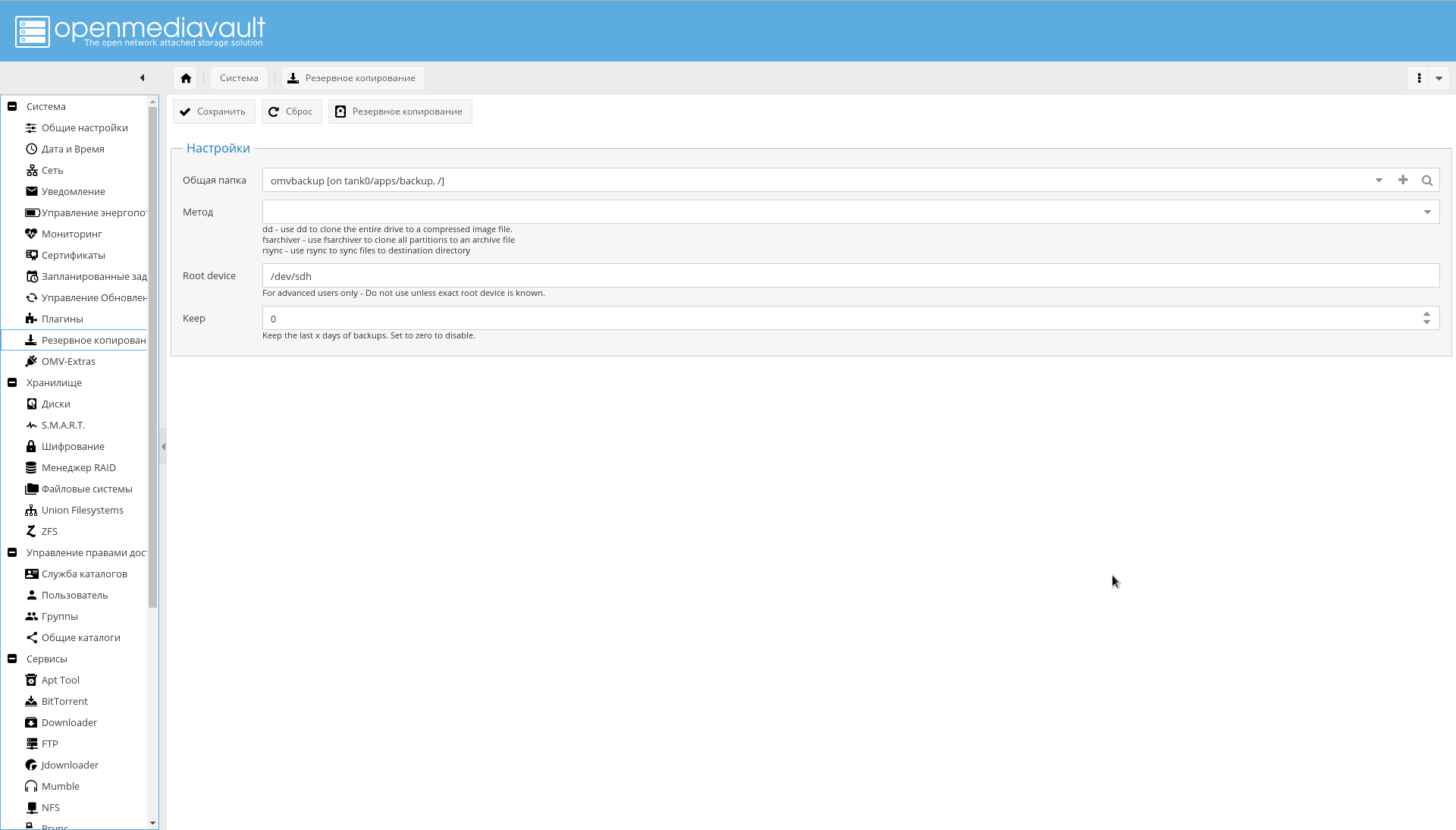

或者,以从中进行下载的物理设备的形式显式指定参数“ Root device”。

对于我来说,使用该插件并从该界面备份操作系统更为方便,而不是使用zfs send来构建自己的东西,因此我更喜欢第二种选择。

要进行备份,请首先通过ZFS创建tank0/apps/backup文件系统,然后在“系统->备份”菜单中,在“公共文件夹”参数字段中单击“ +”,然后将创建的设备添加为目标设备,然后将“路径”字段添加设置为“ /”。

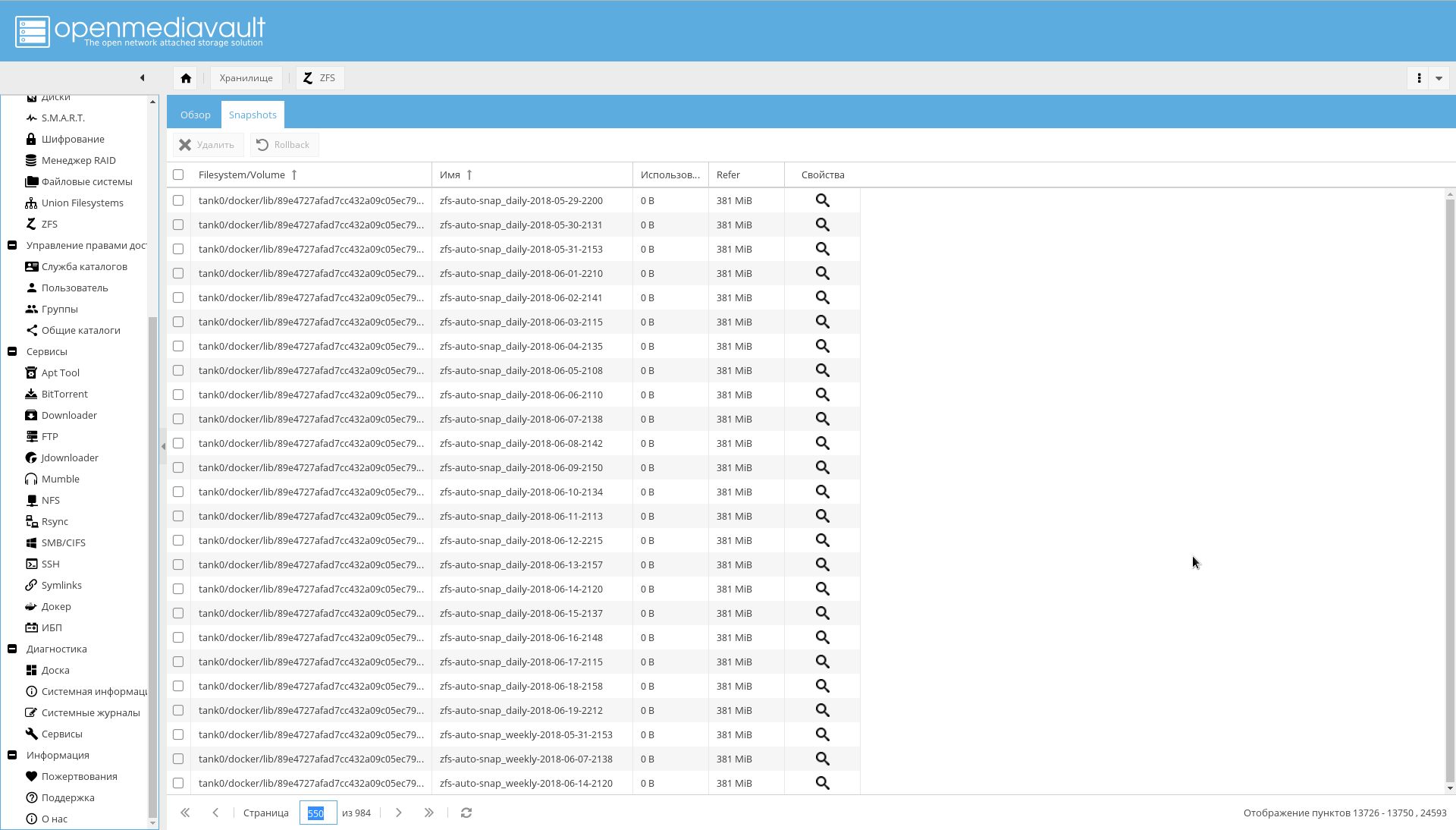

zfs-auto-snapshot也存在问题。 如果未配置,她将在一年中的每个小时,每天,每周,每月拍摄照片。

结果是屏幕截图中的内容:

如果您已经遇到过这种情况,请运行以下代码来删除自动快照:

zfs list -t snapshot -o name -S creation | grep "@zfs-auto-snap" | tail -n +1500 | xargs -n 1 zfs destroy -vr

然后将zfs-auto-snapshot配置为在cron中运行。

首先,如果不需要每小时拍照,只需删除/etc/cron.hourly/zfs-auto-snapshot 。

电邮通知

电子邮件通知已被指示为实现可靠性的手段之一。

因此,现在您需要配置电子邮件通知。

为此,请在其中一台公用服务器上注册一个框(或者,如果确实有理由,请自行配置SMTP服务器)。

然后,您需要转到“系统->通知”页面并输入:

- SMTP服务器地址。

- SMTP服务器端口。

- 用户名

- 发件人地址(通常地址的第一部分与名称匹配)。

- 用户密码

- 在“收件人”字段中,NAS将向其发送通知的通常地址。

强烈建议启用SSL / TLS。

屏幕快照显示了Yandex的示例设置:

NAS外部的网络设置

IP地址

我使用白色的静态IP地址,每月费用加上100卢布。 如果您不想付款,并且您的地址是动态的,但是对于NAT则不是,则可以通过所选服务的API调整外部DNS记录。

但是,应该记住,非NAT地址可能突然变成NAT地址:通常,提供程序不提供任何保证。

路由器

作为路由器,我有一个Mikrotik RouterBoard ,类似于下面的图片。

路由器需要三件事:

- 配置NAS的静态地址。 对于我来说,地址是通过DHCP发出的,我需要确保具有特定MAC地址的适配器始终获得相同的IP地址。 在RouterOS中,这是通过“ IP-> DHCP服务器”选项卡上的“使静态”按钮完成的。

- 配置DNS服务器,以便为名称“ nas”以及以“ .nas”和“ .NAS.cloudns.cc”结尾的名称(其中“ NAS”是ClouDNS或类似服务上的区域)提供IP系统。 下面的屏幕快照显示了在RouterOS中执行此操作的位置。 就我而言,这是通过将名称与正则表达式匹配来实现的:“

^.*\.nas$|^nas$|^.*\.NAS.cloudns.cc$ ” - 配置端口转发。 在RouterOS中,这是在“ IP->防火墙”选项卡上完成的 ,在此不再赘述。

域名解析

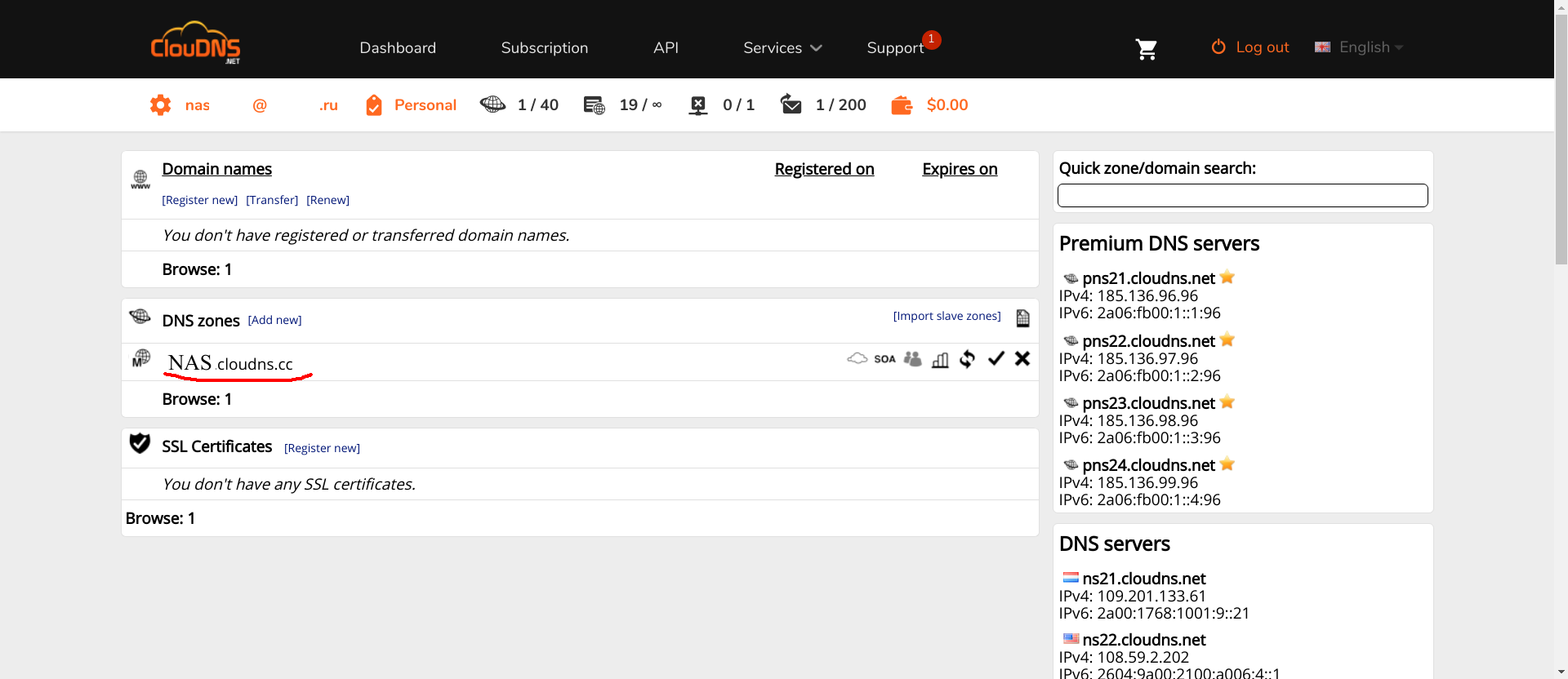

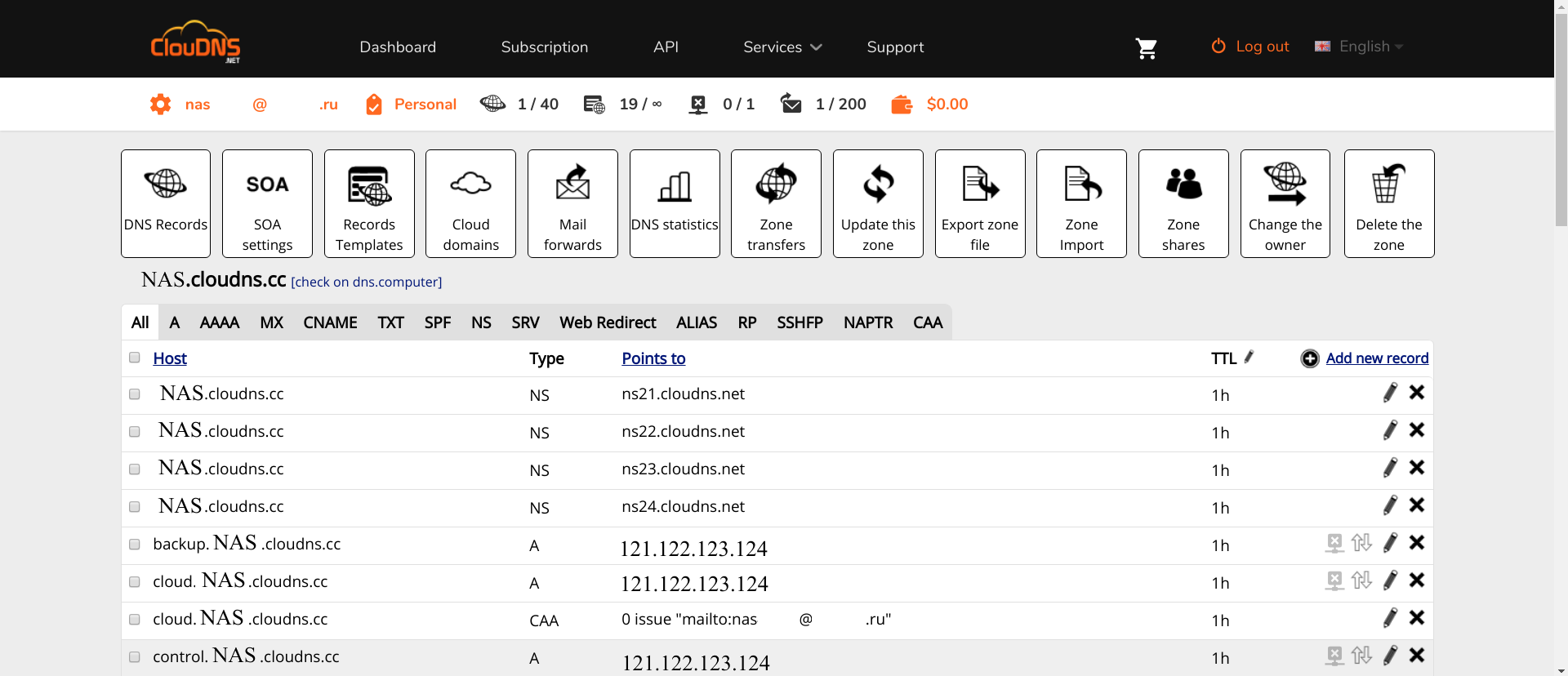

CLouDNS很简单。 创建一个帐户,确认。 NS记录将已经向您注册。 接下来,需要最少的设置。

首先,您需要创建必要的区域(当然,在屏幕快照中以红色突出显示的名称为NAS的区域就是您应该使用其他名称创建的区域)。

其次,在此区域中,您应该注册以下A记录 :

- nas , www , omv , control和一个空名称 。 访问OMV界面。

- ldap 。 PhpLdapAdmin界面

- ssp 。 修改用户密码的界面。

- 测试 。 测试服务器。

其余域名将在添加服务时添加。

单击区域,然后单击“添加新记录” ,选择A型,然后输入NAS所在的路由器的区域名称和IP地址。

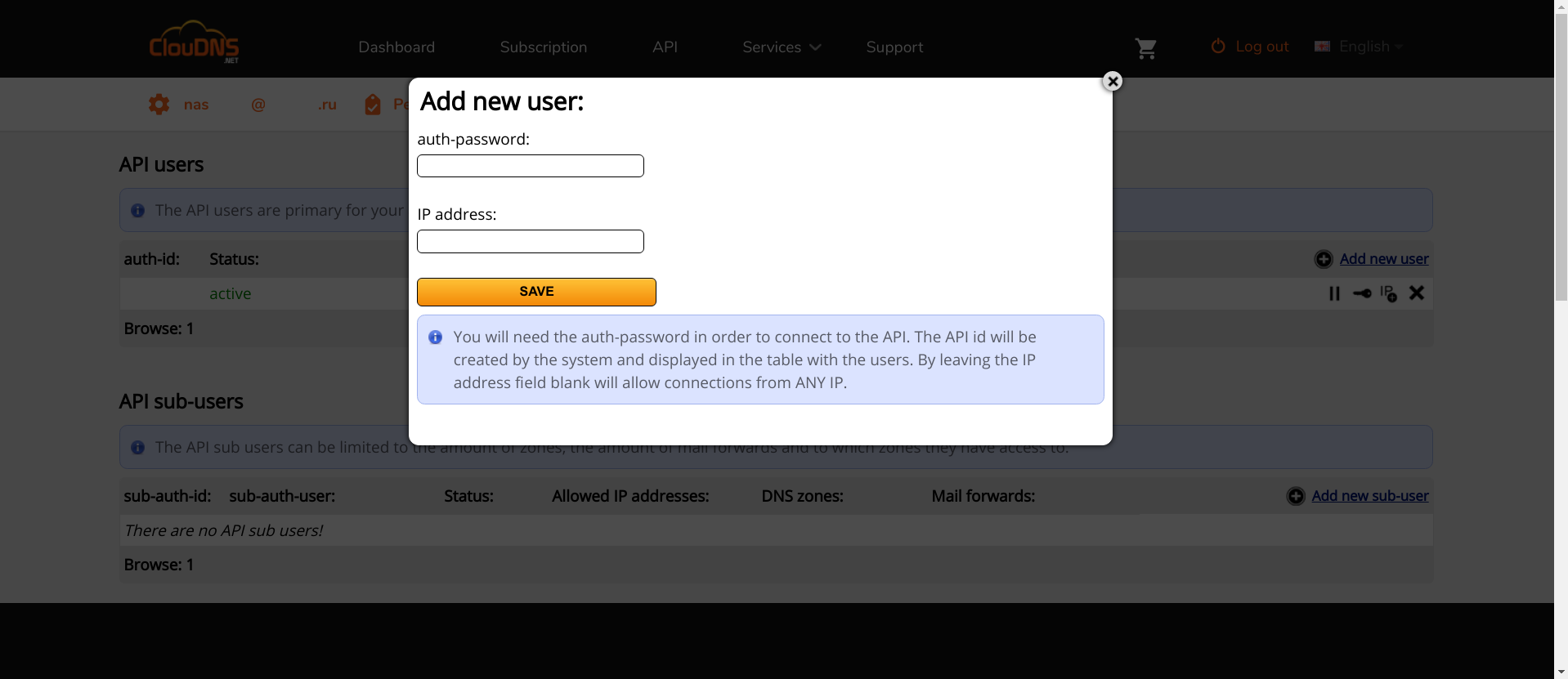

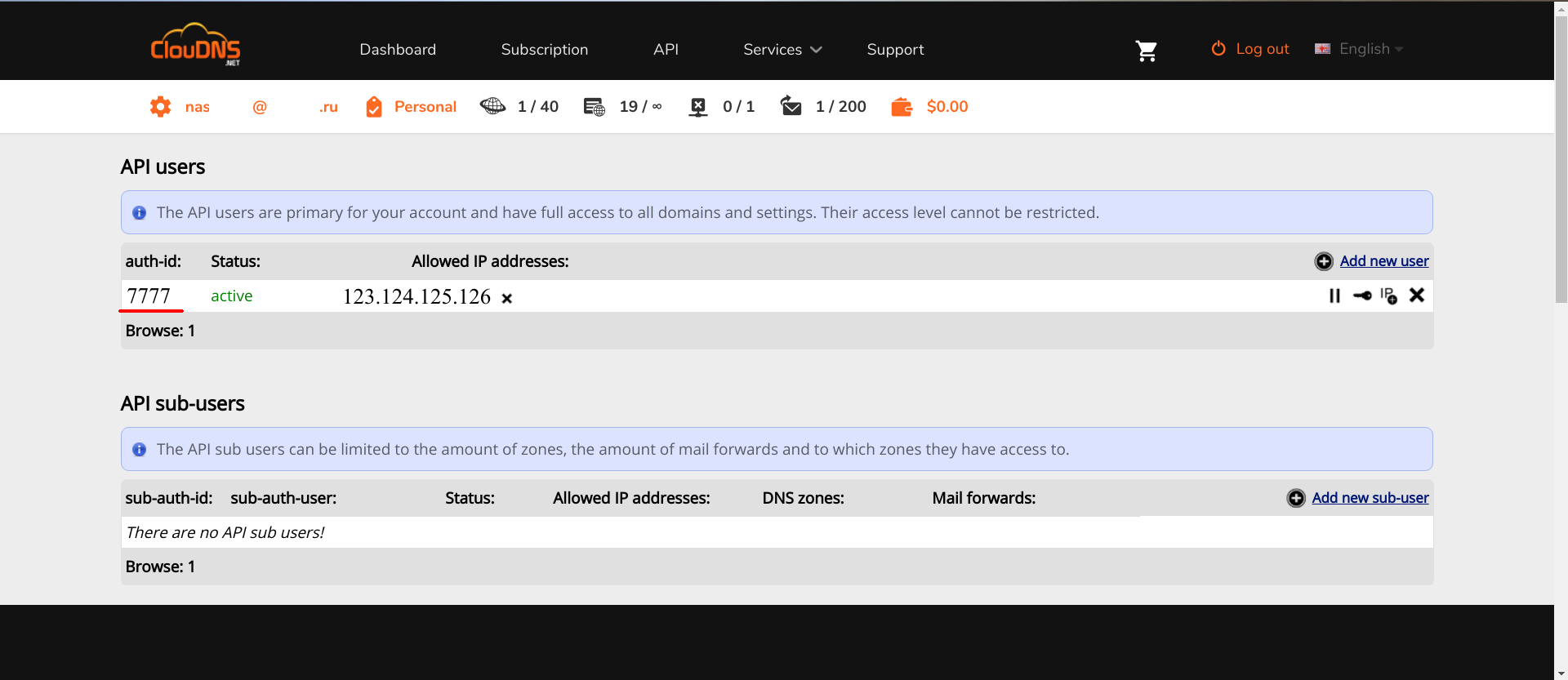

其次,您需要访问API。 在ClouDNS中,它是付费的,因此您必须先付费。 在其他服务中,它是免费的。 如果您知道哪个更好,并且Lexicon支持,那么请在评论中写。

获得对API的访问权限后,您需要在此处添加新的API用户。

"IP address" IP : , API. , , API, auth-id auth-password . Lexicon, .

ClouDNS .

Docker

openmediavault-docker-gui, docker-ce .

, docker-compose , :

apt-get install docker-compose

:

zfs create -p /tank0/docker/services

, /var/lib/docker . ( , SSD), , , .

..,

. .

, .

, GUI , : , .. , .

/var/lib :

service docker stop zfs create -o com.sun:auto-snapshot=false -p /tank0/docker/lib rm -rf /var/lib/docker ln -s /tank0/docker/lib /var/lib/docker service docker start

:

$ ls -l /var/lib/docker lrwxrwxrwx 1 root root 17 Apr 7 12:35 /var/lib/docker -> /tank0/docker/lib

:

docker network create docker0

Docker .

nginx-reverse-proxy

Docker , .

, .

: nginx-proxy letsencrypt-dns .

, OMV 10080 10443, 80 443.

/tank0/docker/services/nginx-proxy/docker-compose.yml version: '2' networks: docker0: external: name: docker0 services: nginx-proxy: networks: - docker0 restart: always image: jwilder/nginx-proxy ports: - "80:80" - "443:443" volumes: - ./certs:/etc/nginx/certs:ro - ./vhost.d:/etc/nginx/vhost.d - ./html:/usr/share/nginx/html - /var/run/docker.sock:/tmp/docker.sock:ro - ./local-config:/etc/nginx/conf.d - ./nginx.tmpl:/app/nginx.tmpl labels: - "com.github.jrcs.letsencrypt_nginx_proxy_companion.nginx_proxy=true" letsencrypt-dns: image: adferrand/letsencrypt-dns volumes: - ./certs/letsencrypt:/etc/letsencrypt environment: - "LETSENCRYPT_USER_MAIL=MAIL@MAIL.COM" - "LEXICON_PROVIDER=cloudns" - "LEXICON_OPTIONS=--delegated NAS.cloudns.cc" - "LEXICON_PROVIDER_OPTIONS=--auth-id=CLOUDNS_ID --auth-password=CLOUDNS_PASSWORD"

:

- nginx-reverse-proxy — c .

- letsencrypt-dns — ACME Let's Encrypt.

nginx-reverse-proxy jwilder/nginx-proxy .

docker0 — , , docker-compose.

nginx-proxy — , . docker0. , 80 443 ports (, , docker0, ).

restart: always , .

:

certs /etc/nginx/certs — , , Let's Encrypt. ACME ../vhost.d:/etc/nginx/vhost.d — . ../html:/usr/share/nginx/html — . ./var/run/docker.sock , /tmp/docker.sock — Docker . docker-gen ../local-config , /etc/nginx/conf.d — nginx. , ../nginx.tmpl , /app/nginx.tmpl — nginx, docker-gen .

letsencrypt-dns adferrand/letsencrypt-dns . ACME Lexicon, DNS .

certs/letsencrypt /etc/letsencrypt .

, :

LETSENCRYPT_USER_MAIL=MAIL@MAIL.COM — Let's Encrypt. , .LEXICON_PROVIDER=cloudns — Lexicon. — cloudns .LEXICON_PROVIDER_OPTIONS=--auth-id=CLOUDNS_ID --auth-password=CLOUDNS_PASSWORD --delegated=NAS.cloudns.cc — CLOUDNS_ID ClouDNS . CLOUDNS_PASSWORD — , API. NAS.cloudns.cc, NAS — DNS . cloudns , (cloudns.cc), ClouDNS API .

: .

, , , , Let's encrypt:

$ ls ./certs/letsencrypt/ accounts archive csr domains.conf keys live renewal renewal-hooks

, , .

/tank0/docker/services/nginx-proxy/nginx.tmpl {{ $CurrentContainer := where $ .Docker.CurrentContainerID | first }} {{ define }} {{ if .Address }} {{/* If we got the containers from swarm and this container's port is published to host, use host IP:PORT */}} {{ if and .Container.Node.ID .Address.HostPort }} # {{ .Container.Node.Name }}/{{ .Container.Name }} server {{ .Container.Node.Address.IP }}:{{ .Address.HostPort }}; {{/* If there is no swarm node or the port is not published on host, use container's IP:PORT */}} {{ else if .Network }} # {{ .Container.Name }} server {{ .Network.IP }}:{{ .Address.Port }}; {{ end }} {{ else if .Network }} # {{ .Container.Name }} {{ if .Network.IP }} server {{ .Network.IP }} down; {{ else }} server 127.0.0.1 down; {{ end }} {{ end }} {{ end }} # If we receive X-Forwarded-Proto, pass it through; otherwise, pass along the # scheme used to connect to this server map $http_x_forwarded_proto $proxy_x_forwarded_proto { default $http_x_forwarded_proto; '' $scheme; } # If we receive X-Forwarded-Port, pass it through; otherwise, pass along the # server port the client connected to map $http_x_forwarded_port $proxy_x_forwarded_port { default $http_x_forwarded_port; '' $server_port; } # If we receive Upgrade, set Connection to ; otherwise, delete any # Connection header that may have been passed to this server map $http_upgrade $proxy_connection { default upgrade; '' close; } # Apply fix for very long server names server_names_hash_bucket_size 128; # Default dhparam {{ if (exists ) }} ssl_dhparam /etc/nginx/dhparam/dhparam.pem; {{ end }} # Set appropriate X-Forwarded-Ssl header map $scheme $proxy_x_forwarded_ssl { default off; https on; } gzip_types text/plain text/css application/javascript application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript; log_format vhost '$host $remote_addr - $remote_user [$time_local] ' '"$request" $status $body_bytes_sent ' '"$http_referer" "$http_user_agent"'; access_log off; {{ if $.Env.RESOLVERS }} resolver {{ $.Env.RESOLVERS }}; {{ end }} {{ if (exists ) }} include /etc/nginx/proxy.conf; {{ else }} # HTTP 1.1 support proxy_http_version 1.1; proxy_buffering off; proxy_set_header Host $http_host; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection $proxy_connection; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $proxy_x_forwarded_proto; proxy_set_header X-Forwarded-Ssl $proxy_x_forwarded_ssl; proxy_set_header X-Forwarded-Port $proxy_x_forwarded_port; # Mitigate httpoxy attack (see README for details) proxy_set_header Proxy ; {{ end }} {{ $enable_ipv6 := eq (or ($.Env.ENABLE_IPV6) ) }} server { server_name _; # This is just an invalid value which will never trigger on a real hostname. listen 80; {{ if $enable_ipv6 }} listen [::]:80; {{ end }} access_log /var/log/nginx/access.log vhost; return 503; } {{ if (and (exists ) (exists )) }} server { server_name _; # This is just an invalid value which will never trigger on a real hostname. listen 443 ssl http2; {{ if $enable_ipv6 }} listen [::]:443 ssl http2; {{ end }} access_log /var/log/nginx/access.log vhost; return 503; ssl_session_tickets off; ssl_certificate /etc/nginx/certs/default.crt; ssl_certificate_key /etc/nginx/certs/default.key; } {{ end }} {{ range $host, $containers := groupByMulti $ }} {{ $host := trim $host }} {{ $is_regexp := hasPrefix $host }} {{ $upstream_name := when $is_regexp (sha1 $host) $host }} # {{ $host }} upstream {{ $upstream_name }} { {{ range $container := $containers }} {{ $addrLen := len $container.Addresses }} {{ range $knownNetwork := $CurrentContainer.Networks }} {{ range $containerNetwork := $container.Networks }} {{ if (and (ne $containerNetwork.Name ) (or (eq $knownNetwork.Name $containerNetwork.Name) (eq $knownNetwork.Name ))) }} ## Can be connected with network {{/* If only 1 port exposed, use that */}} {{ if eq $addrLen 1 }} {{ $address := index $container.Addresses 0 }} {{ template (dict $container $address $containerNetwork) }} {{/* If more than one port exposed, use the one matching VIRTUAL_PORT env var, falling back to standard web port 80 */}} {{ else }} {{ $port := coalesce $container.Env.VIRTUAL_PORT }} {{ $address := where $container.Addresses $port | first }} {{ template (dict $container $address $containerNetwork) }} {{ end }} {{ else }} # Cannot connect to network of this container server 127.0.0.1 down; {{ end }} {{ end }} {{ end }} {{ end }} } {{ $default_host := or ($.Env.DEFAULT_HOST) }} {{ $default_server := index (dict $host $default_host ) $host }} {{/* Get the VIRTUAL_PROTO defined by containers w/ the same vhost, falling back to */}} {{ $proto := trim (or (first (groupByKeys $containers )) ) }} {{/* Get the NETWORK_ACCESS defined by containers w/ the same vhost, falling back to */}} {{ $network_tag := or (first (groupByKeys $containers )) }} {{/* Get the HTTPS_METHOD defined by containers w/ the same vhost, falling back to */}} {{ $https_method := or (first (groupByKeys $containers )) }} {{/* Get the SSL_POLICY defined by containers w/ the same vhost, falling back to */}} {{ $ssl_policy := or (first (groupByKeys $containers )) }} {{/* Get the HSTS defined by containers w/ the same vhost, falling back to */}} {{ $hsts := or (first (groupByKeys $containers )) }} {{/* Get the VIRTUAL_ROOT By containers w/ use fastcgi root */}} {{ $vhost_root := or (first (groupByKeys $containers )) }} {{/* Get the first cert name defined by containers w/ the same vhost */}} {{ $certName := (first (groupByKeys $containers )) }} {{/* Get the best matching cert by name for the vhost. */}} {{ $vhostCert := (closest (dir ) (printf $host))}} {{/* vhostCert is actually a filename so remove any suffixes since they are added later */}} {{ $vhostCert := trimSuffix $vhostCert }} {{ $vhostCert := trimSuffix $vhostCert }} {{/* Use the cert specified on the container or fallback to the best vhost match */}} {{ $cert := (coalesce $certName $vhostCert) }} {{ $is_https := (and (ne $https_method ) (ne $cert ) (or (and (exists (printf $cert)) (exists (printf $cert))) (and (exists (printf $cert)) (exists (printf $cert)))) ) }} {{ if $is_https }} {{ if eq $https_method }} server { server_name {{ $host }}; listen 80 {{ $default_server }}; {{ if $enable_ipv6 }} listen [::]:80 {{ $default_server }}; {{ end }} access_log /var/log/nginx/access.log vhost; return 301 https://$host$request_uri; } {{ end }} server { server_name {{ $host }}; listen 443 ssl http2 {{ $default_server }}; {{ if $enable_ipv6 }} listen [::]:443 ssl http2 {{ $default_server }}; {{ end }} access_log /var/log/nginx/access.log vhost; {{ if eq $network_tag }} # Only allow traffic from internal clients include /etc/nginx/network_internal.conf; {{ end }} {{ if eq $ssl_policy }} ssl_protocols TLSv1.2; ssl_ciphers 'ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256'; {{ else if eq $ssl_policy }} ssl_protocols TLSv1 TLSv1.1 TLSv1.2; ssl_ciphers 'ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA:ECDHE-RSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-RSA-AES256-SHA256:DHE-RSA-AES256-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:!DSS'; {{ else if eq $ssl_policy }} ssl_protocols SSLv3 TLSv1 TLSv1.1 TLSv1.2; ssl_ciphers 'ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:ECDHE-RSA-DES-CBC3-SHA:ECDHE-ECDSA-DES-CBC3-SHA:EDH-RSA-DES-CBC3-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:DES-CBC3-SHA:HIGH:SEED:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!RSAPSK:!aDH:!aECDH:!EDH-DSS-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA:!SRP'; {{ else if eq $ssl_policy }} ssl_protocols TLSv1.2; ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:AES128-GCM-SHA256:AES128-SHA256:AES256-GCM-SHA384:AES256-SHA256'; {{ else if eq $ssl_policy }} ssl_protocols TLSv1.1 TLSv1.2; ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:AES128-GCM-SHA256:AES128-SHA256:AES128-SHA:AES256-GCM-SHA384:AES256-SHA256:AES256-SHA'; {{ else if eq $ssl_policy }} ssl_protocols TLSv1 TLSv1.1 TLSv1.2; ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:AES128-GCM-SHA256:AES128-SHA256:AES128-SHA:AES256-GCM-SHA384:AES256-SHA256:AES256-SHA'; {{ else if eq $ssl_policy }} ssl_protocols TLSv1 TLSv1.1 TLSv1.2; ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:AES128-GCM-SHA256:AES128-SHA256:AES128-SHA:AES256-GCM-SHA384:AES256-SHA256:AES256-SHA:DES-CBC3-SHA'; {{ else if eq $ssl_policy }} ssl_protocols TLSv1 TLSv1.1 TLSv1.2; ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:DHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:AES128-GCM-SHA256:AES128-SHA256:AES128-SHA:AES256-GCM-SHA384:AES256-SHA256:AES256-SHA:DHE-DSS-AES128-SHA:DES-CBC3-SHA'; {{ else if eq $ssl_policy }} ssl_protocols TLSv1 TLSv1.1 TLSv1.2; ssl_ciphers 'ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:DHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:AES128-GCM-SHA256:AES128-SHA256:AES128-SHA:AES256-GCM-SHA384:AES256-SHA256:AES256-SHA:DHE-DSS-AES128-SHA'; {{ end }} ssl_prefer_server_ciphers on; ssl_session_timeout 5m; ssl_session_cache shared:SSL:50m; ssl_session_tickets off; {{ if (and (exists (printf $cert)) (exists (printf $cert))) }} ssl_certificate /etc/nginx/certs/letsencrypt/live/{{ (printf $cert) }}; ssl_certificate_key /etc/nginx/certs/letsencrypt/live/{{ (printf $cert) }}; {{ else if (and (exists (printf $cert)) (exists (printf $cert))) }} ssl_certificate /etc/nginx/certs/{{ (printf $cert) }}; ssl_certificate_key /etc/nginx/certs/{{ (printf $cert) }}; {{ end }} {{ if (exists (printf $cert)) }} ssl_dhparam {{ printf $cert }}; {{ end }} {{ if (exists (printf $cert)) }} ssl_stapling on; ssl_stapling_verify on; ssl_trusted_certificate {{ printf $cert }}; {{ end }} {{ if (and (ne $https_method ) (ne $hsts )) }} add_header Strict-Transport-Security always; {{ end }} {{ if (exists (printf $host)) }} include {{ printf $host }}; {{ else if (exists ) }} include /etc/nginx/vhost.d/default; {{ end }} location / { {{ if eq $proto }} include uwsgi_params; uwsgi_pass {{ trim $proto }}://{{ trim $upstream_name }}; {{ else if eq $proto }} root {{ trim $vhost_root }}; include fastcgi.conf; fastcgi_pass {{ trim $upstream_name }}; {{ else }} proxy_pass {{ trim $proto }}://{{ trim $upstream_name }}; {{ end }} {{ if (exists (printf $host)) }} auth_basic ; auth_basic_user_file {{ (printf $host) }}; {{ end }} {{ if (exists (printf $host)) }} include {{ printf $host}}; {{ else if (exists ) }} include /etc/nginx/vhost.d/default_location; {{ end }} } } {{ end }} {{ if or (not $is_https) (eq $https_method ) }} server { server_name {{ $host }}; listen 80 {{ $default_server }}; {{ if $enable_ipv6 }} listen [::]:80 {{ $default_server }}; {{ end }} access_log /var/log/nginx/access.log vhost; {{ if eq $network_tag }} # Only allow traffic from internal clients include /etc/nginx/network_internal.conf; {{ end }} {{ if (exists (printf $host)) }} include {{ printf $host }}; {{ else if (exists ) }} include /etc/nginx/vhost.d/default; {{ end }} location / { {{ if eq $proto }} include uwsgi_params; uwsgi_pass {{ trim $proto }}://{{ trim $upstream_name }}; {{ else if eq $proto }} root {{ trim $vhost_root }}; include fastcgi.conf; fastcgi_pass {{ trim $upstream_name }}; {{ else }} proxy_pass {{ trim $proto }}://{{ trim $upstream_name }}; {{ end }} {{ if (exists (printf $host)) }} auth_basic ; auth_basic_user_file {{ (printf $host) }}; {{ end }} {{ if (exists (printf $host)) }} include {{ printf $host}}; {{ else if (exists ) }} include /etc/nginx/vhost.d/default_location; {{ end }} } } {{ if (and (not $is_https) (exists ) (exists )) }} server { server_name {{ $host }}; listen 443 ssl http2 {{ $default_server }}; {{ if $enable_ipv6 }} listen [::]:443 ssl http2 {{ $default_server }}; {{ end }} access_log /var/log/nginx/access.log vhost; return 500; ssl_certificate /etc/nginx/certs/default.crt; ssl_certificate_key /etc/nginx/certs/default.key; } {{ end }} {{ end }} {{ end }}

, nginx /etc/nginx/certs/%s.crt /etc/nginx/certs/%s.pem , %s — ( — , ).

/etc/nginx/certs/letsencrypt/live/%s/{fullchain.pem, privkey.pem} , :

{{ $is_https := (and (ne $https_method "nohttps") (ne $cert "") (or (and (exists (printf "/etc/nginx/certs/letsencrypt/live/%s/fullchain.pem" $cert)) (exists (printf "/etc/nginx/certs/letsencrypt/live/%s/privkey.pem" $cert)) ) (and (exists (printf "/etc/nginx/certs/%s.crt" $cert)) (exists (printf "/etc/nginx/certs/%s.key" $cert)) ) ) ) }}

, domains.conf .

/tank0/docker/services/nginx-proxy/certs/letsencrypt/domains.conf *.NAS.cloudns.cc NAS.cloudns.cc

. , , , client_max_body_size 20, .

/tank0/docker/services/nginx-proxy/local-config/max_upload_size.conf client_max_body_size 20G;

, :

docker-compose up

( ), Ctrl+C :

docker-compose up -d

— nginx, . , , .

, NAS.

.

docker-compose :

/tank0/docker/services/test_nginx/docker-compose.yml version: '2' networks: docker0: external: name: docker0 services: nginx-local: restart: always image: nginx:alpine expose: - 80 - 443 environment: - "VIRTUAL_HOST=test.NAS.cloudns.cc" - "VIRTUAL_PROTO=http" - "VIRTUAL_PORT=80" - CERT_NAME=NAS.cloudns.cc networks: - docker0

:

docker0 — . .expose — , . , 80 HTTP 443 HTTPS.VIRTUAL_HOST=test.NAS.cloudns.cc — , nginx-reverse-proxy .VIRTUAL_PROTO=http — nginx-reverse-proxy . , HTTP.VIRTUAL_PORT=80 — nginx-reverse-proxy.CERT_NAME=NAS.cloudns.cc — . , , . NAS — DNS .networks — , nginx-reverse-proxy docker0 .

, . docker-compose up , test.NAS.cloudns.cc .

:

$ docker-compose up Creating testnginx_nginx-local_1 Attaching to testnginx_nginx-local_1 nginx-local_1 | 172.22.0.5 - - [29/Jul/2018:15:32:02 +0000] "GET / HTTP/1.1" 200 612 "-" "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537 (KHTML, like Gecko) Chrome/67.0 Safari/537" "192.168.2.3" nginx-local_1 | 2018/07/29 15:32:02 [error] 8

:

, , , , : .

, Ctrl+C, docker-compose down .

local-rpoxy

, nginx-default , nas, omv 10080 10443 .

.

/tank0/docker/services/nginx-local/docker-compose.yml version: '2' networks: docker0: external: name: docker0 services: nginx-local: restart: always image: nginx:alpine expose: - 80 - 443 environment: - "VIRTUAL_HOST=NAS.cloudns.cc,nas,nas.*,www.*,omv.*,nas-controller.nas" - "VIRTUAL_PROTO=http" - "VIRTUAL_PORT=80" - CERT_NAME=NAS.cloudns.cc volumes: - ./local-config:/etc/nginx/conf.d networks: - docker0

docker-compose , .

, , , NAS.cloudns.cc . , NAS DNS , .

/tank0/docker/services/nginx-local/local-config/default.conf # If we receive X-Forwarded-Proto, pass it through; otherwise, pass along the # scheme used to connect to this server map $http_x_forwarded_proto $proxy_x_forwarded_proto { default $http_x_forwarded_proto; '' $scheme; } # If we receive X-Forwarded-Port, pass it through; otherwise, pass along the # server port the client connected to map $http_x_forwarded_port $proxy_x_forwarded_port { default $http_x_forwarded_port; '' $server_port; } # Set appropriate X-Forwarded-Ssl header map $scheme $proxy_x_forwarded_ssl { default off; https on; } access_log on; error_log on; # HTTP 1.1 support proxy_http_version 1.1; proxy_buffering off; proxy_set_header Host $http_host; proxy_set_header Upgrade $http_upgrade; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $proxy_x_forwarded_proto; proxy_set_header X-Forwarded-Ssl $proxy_x_forwarded_ssl; proxy_set_header X-Forwarded-Port $proxy_x_forwarded_port; # Mitigate httpoxy attack (see README for details) proxy_set_header Proxy ""; server { server_name _; # This is just an invalid value which will never trigger on a real hostname. listen 80; return 503; } server { server_name www.* nas.* omv.* ""; listen 80; location / { proxy_pass https:

172.21.0.1 — . 443, OMV HTTPS. .https://nas-controller/ — -, IPMI, nas, nas-controller.nas, nas-controller. .

LDAP

LDAP-

LDAP- — .

Docker . , , .

LDIF- .

/tank0/docker/services/ldap/docker-compose.yml version: "2" networks: ldap: docker0: external: name: docker0 services: open-ldap: image: "osixia/openldap:1.2.0" hostname: "open-ldap" restart: always environment: - "LDAP_ORGANISATION=NAS" - "LDAP_DOMAIN=nas.nas" - "LDAP_ADMIN_PASSWORD=ADMIN_PASSWORD" - "LDAP_CONFIG_PASSWORD=CONFIG_PASSWORD" - "LDAP_TLS=true" - "LDAP_TLS_ENFORCE=false" - "LDAP_TLS_CRT_FILENAME=ldap_server.crt" - "LDAP_TLS_KEY_FILENAME=ldap_server.key" - "LDAP_TLS_CA_CRT_FILENAME=ldap_server.crt" volumes: - ./certs:/container/service/slapd/assets/certs - ./ldap_data/var/lib:/var/lib/ldap - ./ldap_data/etc/ldap/slapd.d:/etc/ldap/slapd.d networks: - ldap ports: - 172.21.0.1:389:389 - 172.21.0.1::636:636 phpldapadmin: image: "osixia/phpldapadmin:0.7.1" hostname: "nas.nas" restart: always networks: - ldap - docker0 expose: - 443 links: - open-ldap:open-ldap-server volumes: - ./certs:/container/service/phpldapadmin/assets/apache2/certs environment: - VIRTUAL_HOST=ldap.* - VIRTUAL_PORT=443 - VIRTUAL_PROTO=https - CERT_NAME=NAS.cloudns.cc - "PHPLDAPADMIN_LDAP_HOSTS=open-ldap-server" #- "PHPLDAPADMIN_HTTPS=false" - "PHPLDAPADMIN_HTTPS_CRT_FILENAME=certs/ldap_server.crt" - "PHPLDAPADMIN_HTTPS_KEY_FILENAME=private/ldap_server.key" - "PHPLDAPADMIN_HTTPS_CA_CRT_FILENAME=certs/ldap_server.crt" - "PHPLDAPADMIN_LDAP_CLIENT_TLS_REQCERT=allow" ldap-ssp: image: openfrontier/ldap-ssp:https volumes: #- ./ssp/mods-enabled/ssl.conf:/etc/apache2/mods-enabled/ssl.conf - /etc/ssl/certs/ssl-cert-snakeoil.pem:/etc/ssl/certs/ssl-cert-snakeoil.pem - /etc/ssl/private/ssl-cert-snakeoil.key:/etc/ssl/private/ssl-cert-snakeoil.key restart: always networks: - ldap - docker0 expose: - 80 links: - open-ldap:open-ldap-server environment: - VIRTUAL_HOST=ssp.* - VIRTUAL_PORT=80 - VIRTUAL_PROTO=http - CERT_NAME=NAS.cloudns.cc - "LDAP_URL=ldap://open-ldap-server:389" - "LDAP_BINDDN=cn=admin,dc=nas,dc=nas" - "LDAP_BINDPW=ADMIN_PASSWORD" - "LDAP_BASE=ou=users,dc=nas,dc=nas" - "MAIL_FROM=admin@nas.nas" - "PWD_MIN_LENGTH=8" - "PWD_MIN_LOWER=3" - "PWD_MIN_DIGIT=2" - "SMTP_HOST=" - "SMTP_USER=" - "SMTP_PASS="

:

LDAP- , :

LDAP_ORGANISATION=NAS — . .LDAP_DOMAIN=nas.nas — . . , .LDAP_ADMIN_PASSWORD=ADMIN_PASSWORD — .LDAP_CONFIG_PASSWORD=CONFIG_PASSWORD — .

-, " ", .

:

/container/service/slapd/assets/certs certs — . ../ldap_data/ — , . LDAP .

ldap , 389 ( LDAP) 636 (LDAP SSL, ) .

PhpLdapAdmin : LDAP ldap 443 docker0 , , nginx-reverse-proxy.

:

VIRTUAL_HOST=ldap.* — , nginx-reverse-proxy .VIRTUAL_PORT=443 — nginx-reverse-proxy.VIRTUAL_PROTO=https — nginx-reverse-proxy.CERT_NAME=NAS.cloudns.cc — , .

SSL .

SSP HTTP .

, , .

— LDAP.

LDAP_URL=ldap://open-ldap-server:389 — LDAP (. links ).LDAP_BINDDN=cn=admin,dc=nas,dc=nas — .LDAP_BINDPW=ADMIN_PASSWORD — , , open-ldap.LDAP_BASE=ou=users,dc=nas,dc=nas — , .

LDAP LDAP :

apt-get install ldap-utils ldapadd -x -H ldap://172.21.0.1 -D "cn=admin,dc=nas,dc=nas" -W -f ldifs/inititialize_ldap.ldif ldapadd -x -H ldap://172.21.0.1 -D "cn=admin,dc=nas,dc=nas" -W -f ldifs/base.ldif ldapadd -x -H ldap://172.21.0.1 -D "cn=admin,cn=config" -W -f ldifs/gitlab_attr.ldif

gitlab_attr.ldif , Gitlab ( ) .

.

LDAP $ ldapsearch -x -H ldap://172.21.0.1 -b dc=nas,dc=nas -D "cn=admin,dc=nas,dc=nas" -W Enter LDAP Password:

LDAP . WEB-.

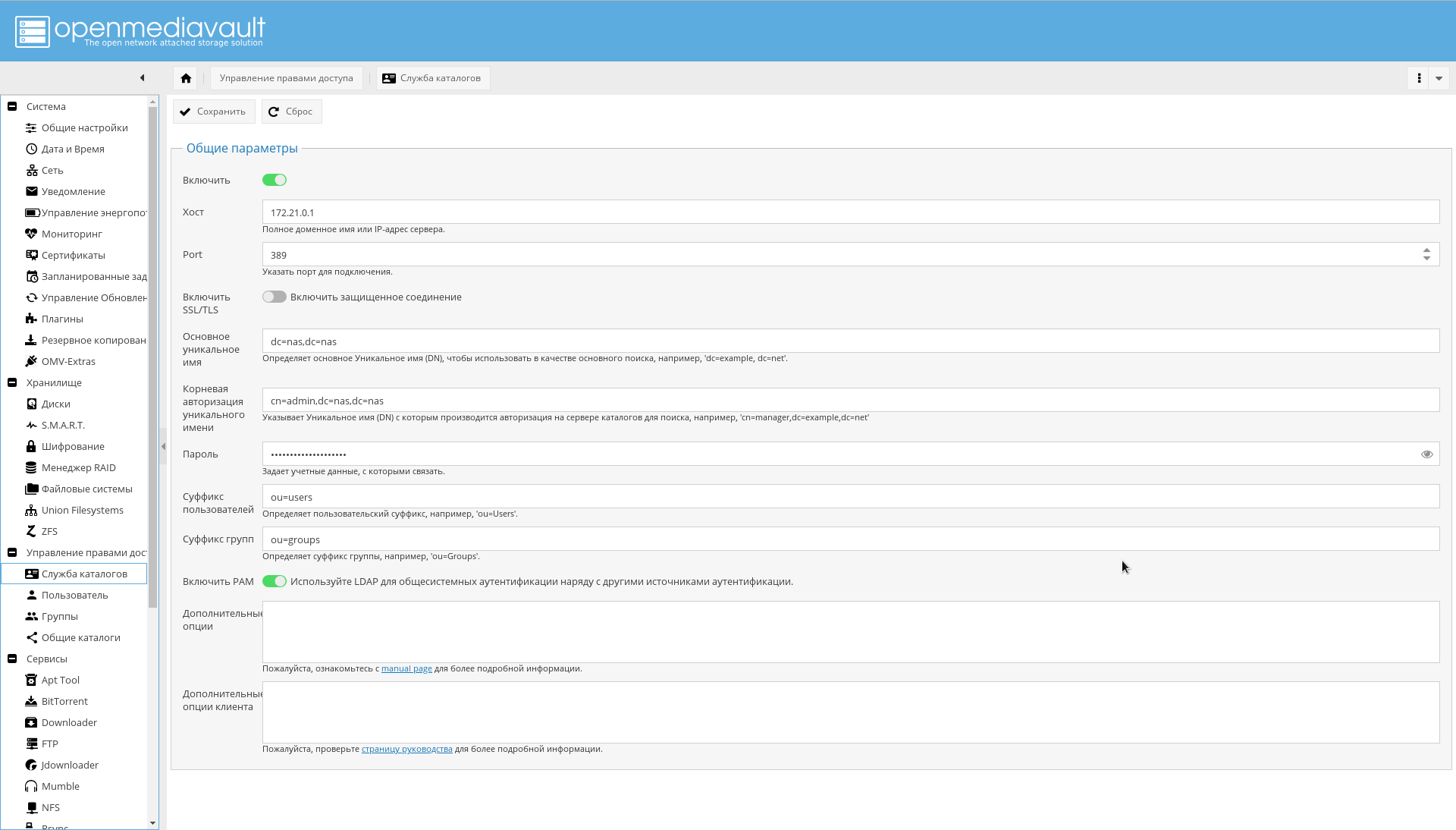

OMV LDAP

LDAP , OMV : , , , , — .

LDAP .

:

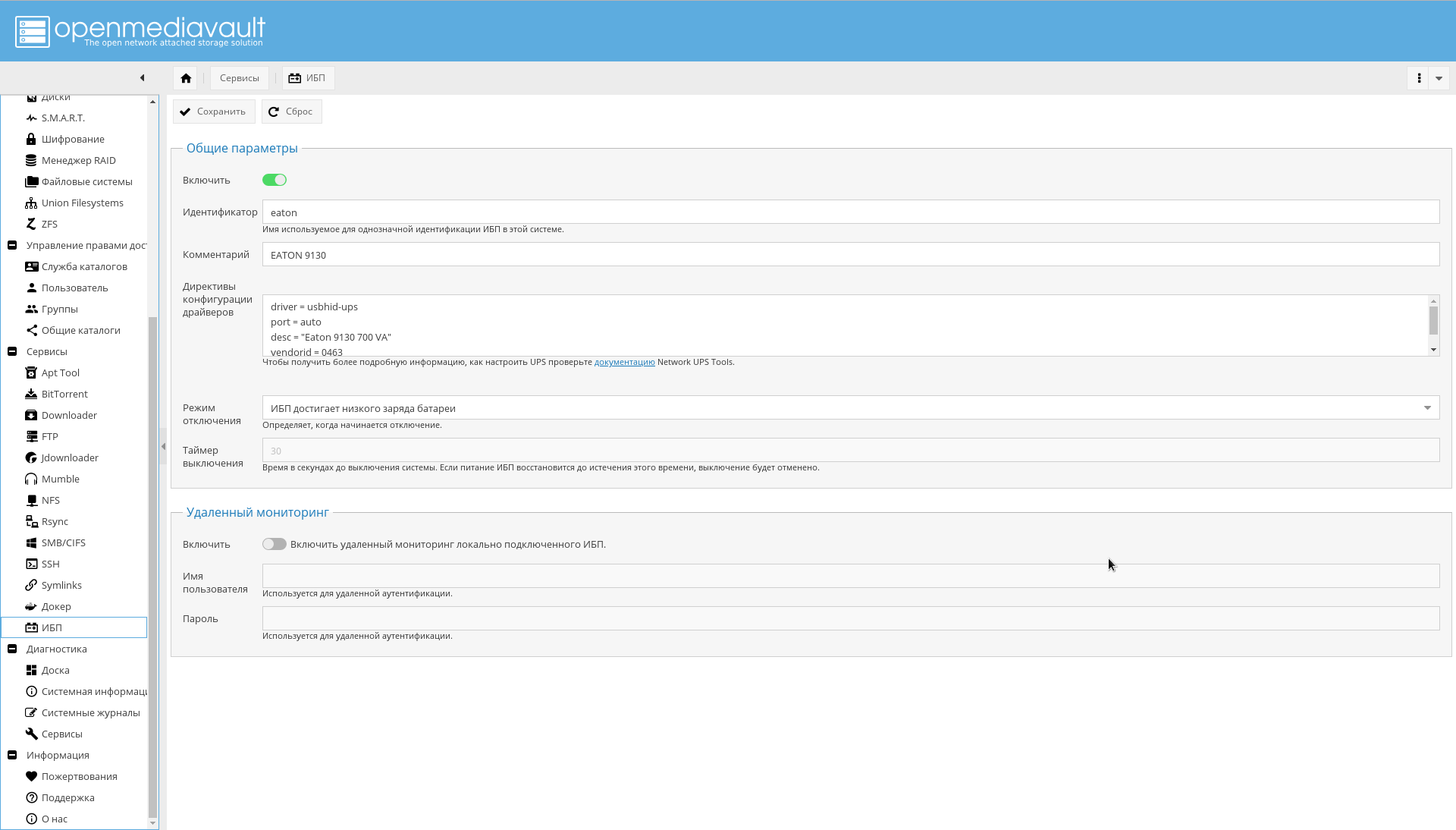

, , NAS USB.

.

NUT GUI OMV.

"->" , , , , "eaton".

" " :

driver = usbhid-ups port = auto desc = "Eaton 9130 700 VA" vendorid = 0463 pollinterval = 10

driver = usbhid-ups — USB, USB HID.vendorid — , lsusb .pollinterval — c.

.

lsusb , :

" " " ".

, :

. , .

.

结论

. , , , , .

— .

-, OMV .

WEB-, , :

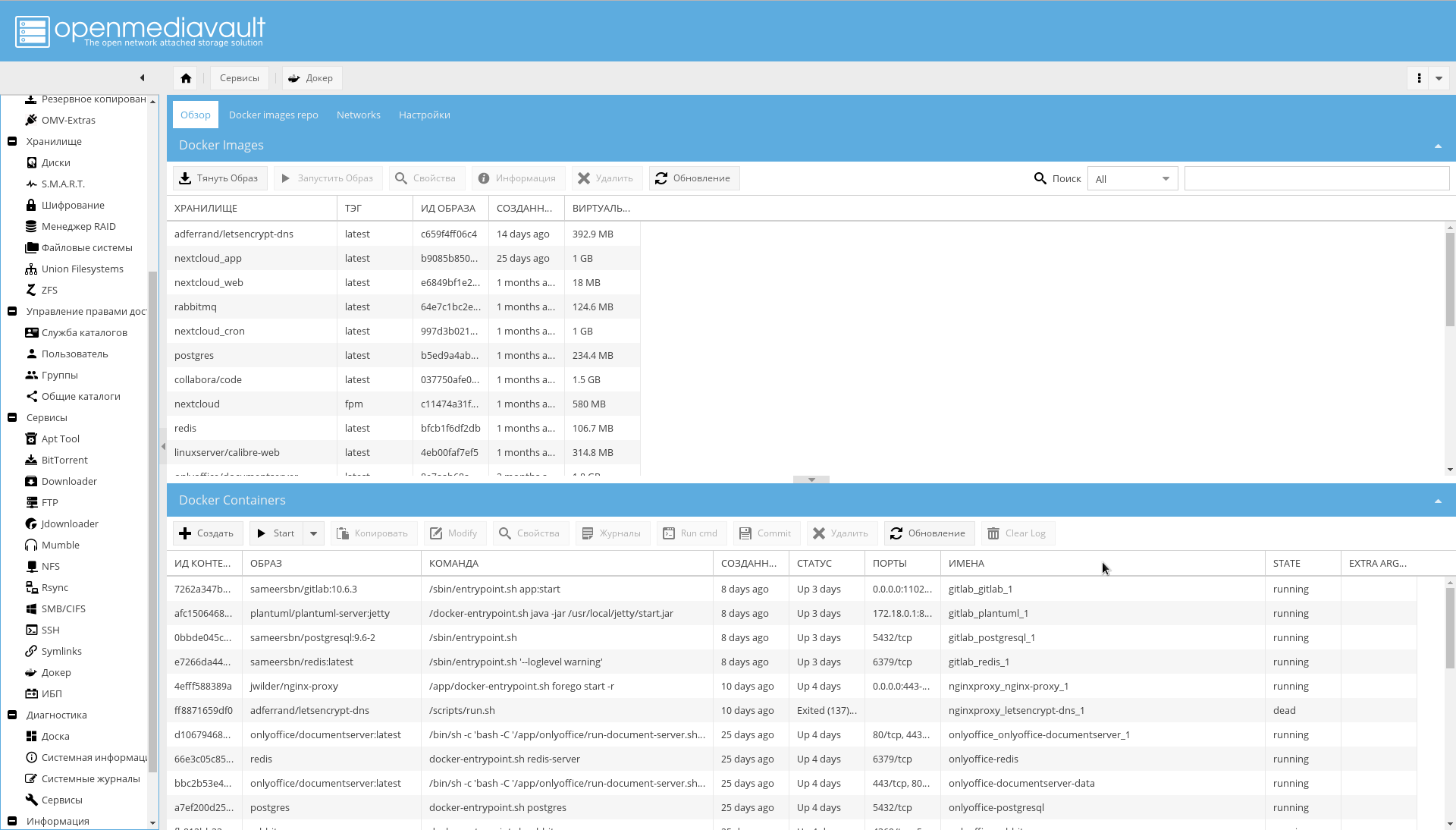

Docker WEB-:

, OMV .

:

:

CPU:

.

!