基于Icinga2和Puppet的监控系统的自动化

基于Icinga2和Puppet的监控系统的自动化

让我们谈谈...基础架构即代码(IaC)。

在Habré上有一些关于Icinga2的很好的文章,也有关于Puppet的很好的文章:

Icinga2是一个简单的选择我们以最低的费用在icinga2上进行微监控从头开始设置现代Puppet服务器但是,这两个惊人的系统的自动化和集成的主题根本没有公开。

在本指南中,我将在一个“活着的”示例中展示如何将这两者结合

系统,请使用功能强大的工具来监视具有所有必要功能的基础架构。 本文是有关“全部装在一个瓶子中”安装软件包的一种操作指南。 完成本指南后,您将获得完整的运行监控解决方案,可以自己进一步“完成”。 试试吧!

因此:

我们提出了一个新主人。 我们需要:

1.使其监视自动出现在Icinga2中,并创建基本检查:

支票

| 快照

| 说明

|

主持人

|

| 在给定的频率下,我们使用ping命令检查主机是否处于“活动状态”。

|

磁盘使用率

|

| 检查我们是否有足够的可用磁盘空间。 |

平均负载

|

| 我们动态监视服务器上的负载。 考虑到其上的处理器数量。 |

可用内存

|

| 我们检查服务器上是否有足够的可用内存。 |

开放端口

|

| 我们扫描服务器上的端口并创建开放端口的映射。 我们监视我们没有新的打开或关闭的端口。 |

重要更新

|

| 我们监视服务器上关键更新的存在。 |

2.以方便易懂的方式为各种服务添加自定义检查。 然后会向导演展示什么,我们是好伙伴,并获得奖赏!

一些例子:

服务专区

| YAML验证码

|

|---|

| '%{::fqdn} virtual host' : target: /etc/icinga2/zones.d/master/%{::fqdn}.conf apply: true assign: [ 'host.name == %{::fqdn}' ] display_name: '%{::fqdn} virtualhost' check_command: 'http' vars: http_uri: / http_ssl: true http_vhost: 'hostname' http_address: "%{lookup('host_address')}"

|

PostgreSQL的检查我们是否可以连接到PostgreSQL数据库。

| '%{::fqdn} PostgreSQL': target: /etc/icinga2/zones.d/master/%{::fqdn}.conf apply: true assign: [ 'host.name == %{::fqdn}' ] display_name: 'PostgreSQL' command_endpoint: '%{::fqdn}' check_command: "postgres" vars: postgres_host: "localhost" postgres_action: "connection" phone_notifications: true

|

Nginx状态我们通过stub_status监视Nginx的状态。

| '%{::fqdn} nginx status' : target: /etc/icinga2/zones.d/master/%{::fqdn}.conf apply: true assign: [ 'host.name == %{::fqdn}' ] command_endpoint: '%{::fqdn}' display_name: 'nginx status' check_command: 'nginx_status' vars: nginx_status_host_address: localhost nginx_status_servername: server.com nginx_status_critical: '1600,60,30' nginx_status_warn: '1500,55,25'

|

3.一切都整洁,可靠和美丽。 最重要的是,花费不超过30分钟的时间进行初始设置。

您必须具有Docker的经验,因此必须具有Linux的经验-本身。

该设置在Debian / Ubuntu下进行了描述。 而且,尽管我没有理由不让他不在其他类似Unix的系统上工作,但我本人无法提供此类保证。 我有几台使用CentOS的计算机,它可以在其中运行,但是尽管如此,大多数还是Debian / Ubuntu。

让我们开始吧

我马上说,当整个主机配置为服务,配置,软件,帐户等时,这对我来说很方便。 -它们由一个yaml文件描述,实际上避免了记录基础结构并提供了清晰的配置。 他在git存储库中打开了相应的项目,文件名与主机名相对应,然后打开所需主机的配置。 您可以立即查看主机上有哪些服务,从中备份了哪些内容,监视了哪些内容等。

这是存储库中的项目结构的外观: project_1/hostname1.com.yaml project_2/hostname2.com.yaml project_3/hostname3.com.yaml

我在这里使用这样的模板,它描述了我们任何服务器的配置:

完整范本 #============================|INPUT DATA|=================================# #---------------------------------------|VARS|----------------------------# #---//Information about variables, keys & contacts//----------------------# host_address: xxxx my_company: my_mail_domain: my_ssh_port: #---------------------------------------|CLASSES|-------------------------# #---//Classes are modules installed on the server.//----------------------# #---//These modules process the arguments typed below.//------------------# #---//Without classes nothing will work.//--------------------------------# #---//Class default_role is mandatory. This class will install//----------# #---//etckeeper, some required perl modules and manages all the logics.//-# classes: - default_role #---------------------------------------|TIMEZONE|------------------------# #---//Set timezone, which will be used on the host.//---------------------# timezone::timezone: Europe/Moscow #---------------------------------------|FACTS|---------------------------# #---//Facts are variables which puppet agent uses.//----------------------# facter::facts_hash: role: value: 'name' company: value: 'name of company' file: 'location.txt' #=========================================================================# #============================|PUPPET|=====================================# #---//Settings for puppet agent.//----------------------------------------# puppet::runmode: cron puppet::ca_server: puppet::puppetmaster: #=========================================================================# #============================|CRON TASKS|=================================# cron_tasks: Name: command: user: minute: '' hour: '' #=========================================================================# #============================|SUPERVISOR|=================================# #---//Supervisor is a process manager//-----------------------------------# supervisord::install_pip: false supervisord::install_init: false supervisord::service_name: supervisor supervisord::package_provider: apt supervisord::executable: /usr/bin/supervisord supervisord::executable_ctl: /usr/bin/supervisorctl supervisord::config_file: /etc/supervisor/supervisord.conf supervisord::programs: 'name': ensure: present command: 'su - rails -c "/home/name/s2"' autostart: no autorestart: 'false' directory: /home/name/domainName/current #=========================================================================# #============================|SECURITY|===================================# #-------------------------------------|FIREWALL|--------------------------# #---//Iptables rules//----------------------------------------------------# firewall: 096 Allow inbound SSH: dport: proto: tcp action: accept #-------------------------------------|FAIL2BAN|--------------------------# #-------------------------------------|ACCESS|----------------------------# #--------------------------------------------|ACCOUNTS|-------------------# #---//Discription of accounts which will be created on server.//----------# accounts: user: shell: '/bin/bash' password: '' locked: false purge_sshkeys: true groups: - docker sshkeys: - #--------------------------------------------|SUDO|-----------------------# #---//Appointment permissions for users.//--------------------------------# sudo::config_file_replace: false sudo::configs: user: content: #--------------------------------------------|SSH|------------------------# #--------//Settings for ssh server.//-------------------------------------# ssh::storeconfigs_enabled: true ssh::server_options: Protocol: '2' Port: PasswordAuthentication: 'yes' PermitRootLogin: 'without-password' SyslogFacility: 'AUTHPRIV' UsePAM: 'yes' X11Forwarding: 'no' #--------------------------------------------|VPN|------------------------# #---//Settings for vpn server.//------------------------------------------# openvpn::servers: 'namehost': country: '' province: '' city: '' organization: '' email: '' server: 'xxxx 255.255.255.0' dev: tun local: openvpn::client_defaults: server: 'namehost' openvpn::clients: # Firstname Lastname 'user': {} openvpn::client_specific_configs: 'user': server: 'namehost' redirect_gateway: true route: - xxxx 255.255.255.255 #=========================================================================# #============================|OPERATING SYSTEM|===========================# #---------------------------------------------|SYSCTL|--------------------# #---//set sysctl parameters//---------------------------------------------# sysctl::base::values: fs.file-max: value: '2097152000' net.netfilter.nf_conntrack_max: value: '1048576' net.nf_conntrack_max: value: '1048576' net.ipv6.conf.all.disable_ipv6: value: '1' vm.oom_kill_allocating_task: value: '1' net.ipv4.ip_forward: value: '0' net.ipv4.tcp_keepalive_time: value: '3' net.ipv4.tcp_keepalive_intvl: value: '60' net.ipv4.tcp_keepalive_probes: value: '9' #---------------------------------------------|RCS|-----------------------# #---//Managment of RC scenario//------------------------------------------# rcs::tmptime: '-1' #---------------------------------------------|WEB SERVERS|---------------# #---------------------------------------------------------|HAPROXY|-------# #---//HAProxy is software that provides a high availability load//--------# #---//balancer and proxy server for TCP and HTTP-based applications//-----# #---// that spreads requests across multiple servers.//-------------------# haproxy::merge_options: true haproxy::defaults_options: log: global maxconn: 20000 option: [ 'tcplog', 'redispatch', 'dontlognull' ] retries: 3 stats: enable timeout: [ 'http-request 10s', 'queue 1m', 'check 10s', 'connect 300000000ms', 'client 300000000ms', 'server 300000000ms' ] haproxy_server: stats: ipaddress: ports: '9090' options: mode: 'http' stats: [ 'uri /', 'auth puppet:123123123' ] postgres: collect_exported: false ipaddress: '0.0.0.0' ports: '5432' options: option: - tcplog balance: roundrobin haproxy_balancemember: hostname1: listening_service: postgres server_names: hostname1 ipaddresses: ports: 6432 options: check hostname2: listening_service: postgres server_names: hostname2 ipaddresses: ports: 6432 options: - check - backup #---------------------------------------------|NGINX|---------------------# #---//Nginx is a web server which can also be used as a reverse proxy,//--# #---//load balancer, mail proxy and HTTP cache//--------------------------# nginx::nginx_cfg_prepend: 'load_module': - modules/ngx_http_geoip_module.so nginx::http_raw_append: - 'real_ip_header X-Forwarded-For;' - 'geoip_country /usr/share/GeoIP/GeoIP.dat;' - 'set_real_ip_from 0.0.0.0/0;' nginx::worker_rlimit_nofile: 16384 nginx::confd: true nginx::server_purrge: true nginx::server::maintenance: true #---------------------------------------------nginx-|MAPS|----------------# nginx::string_mappings: allowed_country: ensure: present string: '$geoip_country_code' mappings: - key: 'default' value: 'yes' - key: 'US' value: 'no' #---------------------------------------------nginx-|UPSTREAMS|-----------# nginx::nginx_upstreams: : ensure: present members: - #---------------------------------------------nginx-|VHOSTS|--------------# nginx::nginx_servers: 'hostname': proxy: 'http://' location_raw_append: - 'if ($allowed_country = no) {return 403;}' try_files: - '' - /index.html - =404 ssl: true ssl_cert: ssl_key: ssl_trusted_cert: ssl_redirect: false ssl_port: 443 error_pages: '403': /usa-restrict.html #---------------------------------------------nginx-|HTTPAUTH|------------# httpauth: 'admin': file: password: '' realm: realm mechanism: basic ensure: present #---------------------------------------------nginx-|LOCATIONS|-----------# nginx::nginx_locations: 'domain1.com/usa-restricted': location: /usa-restrict.html www_root: /home/clientName1/clientName1-client-release/current/dist server: domain1.com ssl: true '^~ domain2.com/resources/upload/': location: '^~ /resources/upload/' server: domain2.com location_alias: '/home/clientName2/upload/' raw_append: - 'if ($allowed_country = no) {return 403;}' '/nginx_status-domain3.com': location: /nginx_status stub_status: on raw_append: - access_log off; - allow 127.0.0.1; - deny all; #---------------------------------------------nginx-|WELL-KNOWN|----------# #---//These locations are needed for SSL certificates generating//--------# #---//with Letsencrypt.//-------------------------------------------------# 'x.hostname.zz/.well-known': location: '/.well-known' server: x.hostname.zz proxy: 'http://kibana' auth_basic: auth_basic_user_file: add_header Pragma public;public, must-revalidate, proxy-revalidate/home/hostname/hostname-release/shared/ecosystem.config.jslogslog_typeformat%{::domain}%{lookup('host_address')}/32(rw,fsid=root,insecure,no_subtree_check,async,no_root_squash)host_addresso=Debian,n=${distro_codename}o=Debian,n=${distro_codename}-securityo=Debian,n=${distro_codename}-updateso=Debian,n=${distro_codename}-proposedo=Debian,n=${distro_codename}-backportso=debian icinga,n=icinga-${distro_codename}o=Zabbix,n=${distro_codename}%{lookup('my_ftp_hostname')}%{lookup('my_backup_ftp_username')}%{lookup('my_backup_ftp_password')}%{lookup('my_backup_compressor')}/tmp/mediastagetv_netbynet%{lookup('host_address')}:/%{lookup('host_address')}redis%{lookup('host_address')}*.*;auth,authpriv.none /var/log/syslog\nmail.* -/var/log/mail.log\n& stopauth,authpriv.* /var/log/auth\n& stop:programname,contains,\ /var/log/puppetlabs/puppet/puppet-agent.log\n& stop:msg,contains,\ /var/log/iptables/iptables.log\n& stop:msg,regex,\ /var/log/admin/auth.log\n& stop:msg,regex,\ /var/log/admin/auth.log\n& stop #---------------------------------------------|LOGWATCH|------------------# #---//Logwatch is a log-analysator for create short reports.//------------# logwatch::format: text logwatch::service: # Ignore this servie - -http - -iptables #---------------------------------------------|LOGROTATE|-----------------# #---//Management of rotation of log files.//------------------------------# my_rclogs_path: '/home/hostname/hostname/shared/log' my_rclogs_amazon_path: '/home/hostname1/hostname1/shared/log_amazon' my_ttlogs_path: '/home/hostname2/hostname2/shared/log' my_ttlogs_amazon_path: '/home/hostname/hostname/shared/log_amazon' logrotate::ensure: latest logrotate::config: dateext: true compress: true logrotate::rules: booking-logs: path: '%{lookup("my_rclogs_path")}/booking_com.log' size: 2500M rotate: 20 copytruncate: true delaycompress: true dateext: true dateformat: -%Y%m%d-%s compress: true postrotate: mv %{lookup('my_rclogs_path')}/booking_com.log*.gz %{lookup('my_rclogs_amazon_path')}/ #---------------------------------------------|ATOP|----------------------# #---//ATOP service displays a new information about CPU//-----------------# #---//and memory utilization.//-------------------------------------------# atop::service: true atop::interval: 30 #----------------------------------------|DNS|----------------------------# #---//Management of file /etc/resolv.conf//-------------------------------# resolv_conf::nameservers: - 0.0.0.0 - 0.0.0.0 #=========================================================================# #============================|DATABASES|==================================# #--------------------------------------|MYSQL|----------------------------# #---//MySQL is a relational database management system.//-----------------# mysql::server::package_ensure: 'installed' #· mysql::server::root_password: mysql::server::manage_config_file: true mysql::server::service_name: 'mysql' # required for puppet module mysql::server::override_options: 'mysqld': 'bind-address': '*' : , : , : , mysql::server::db: : user: password: host: grant: - #-------------------------------------|ELASTICSEARCH|---------------------# #---//Elasticsearch is a search engine based on Lucene.//-----------------# #---//It provides a distributed, multitenant-capable full-text search//---# #---//engine with an HTTP web interface and schema-free JSON documents.//-# elasticsearch::version: 5.5.1 elasticsearch::manage_repo: true elasticsearch::repo_version: 5.x elasticsearch::java_install: false elasticsearch::restart_on_change: true elasticsearch_instance: 'es-01': ensure: 'present' #-------------------------------------|REDIS|-----------------------------# #---//Redis is an in-memory database project implementing//---------------# #---//a distributed, in-memory key-value store with//---------------------# #---//optional durability.//----------------------------------------------# redis::bind: 0.0.0.0 #-------------------------------------|ZOOKEPER|--------------------------# #---//Zooker is a centralized service for distributed systems//-----------# #---//to a hierarchical key-value store, which is used to provide//-------# #---//a distributed configuration service, synchronization service,//-----# #---//and naming registry for large distributed systems.//----------------# zookeeper::init_limit: '1000' zookeeper::id: '1' zookeeper::purge_interval: '1' zookeeper::servers: - #-------------------------------------|POSTGRES|--------------------------# #---//Postgres, is an object-relational database management system//------# #---//with an emphasis on extensibility and standards compliance.//-------# #---//As a database server, its primary functions are to store data//----# #---//securely and return that data in response to requests from other//--# #---//software applications.//--------------------------------------------# postgresql::server::postgres_password: postgresql::server::ip_mask_allow_all_users: '0.0.0.0/0' postgresql::postgresql::server: ip_mask_allow_all_users: '0.0.0.0/32' postgres_db: master: user: password: confluence: user: password: postgres_config: 'max_connections': value: 300 postgres_hba: 'Allow locals without password': order: 1 description: 'locals postgres no password' type: 'host' address: '127.0.0.1/32' database: 'all' user: 'all' auth_method: 'trust' #----------------------------------------------|PGBOUNCER|----------------# #---//PgBouncer is a connection pooler for PostgreSQL//-------------------# pgbouncer::group: postgres pgbouncer::user: postgres pgbouncer::userlist: - user: password: pgbouncer::databases: - source_db: recommender host: dest_db: recommender auth_user: recommender pool_size: 200 auth_pass: - source_db: master host: dest_db: recommender auth_user: recommender pool_size: 50 auth_pass: - source_db: slave host: dest_db: recommender auth_user: recommender pool_size: 200 auth_pass: #=========================================================================# #==============================|APPLICATION SERVICES|=====================# #---------------------------------------------------|CONFLUENCE|----------# #---------------------------------------------------|JENKINS|-------------# #=========================================================================#

监视部分示例:

#============================|MONITORING|=================================# #---------------------------------------|ICINGA SERVICES|-----------------# #---//Managment of monitoring system.//-----------------------------------# icinga2_service: '%{::fqdn} virtual host' : target: /etc/icinga2/zones.d/master/%{::fqdn}.conf apply: true assign: [ 'host.name == %{::fqdn}' ] display_name: '%{::fqdn} virtualhost' check_command: 'http' vars: http_uri: / http_ssl: true http_vhost: 'hostname' http_address: '%{::fqdn} nginx status' : target: /etc/icinga2/zones.d/master/%{::fqdn}.conf apply: true assign: [ 'host.name == %{::fqdn}' ] command_endpoint: '%{::fqdn}' display_name: 'nginx status' check_command: 'nginx_status' vars: nginx_status_host_address: localhost nginx_status_servername: server.com nginx_status_critical: '1600,60,30' nginx_status_warn: '1500,55,25' '%{::fqdn} redis': target: /etc/icinga2/zones.d/master/%{::fqdn}.conf apply: true assign: [ 'host.name == %{::fqdn}' ] display_name: 'Redis' command_endpoint: '%{::fqdn}' check_command: vars: redis_hostname: localhost redis_port: 6379 redis_perfvars: '*' #=========================================================================#

这种方案的一般操作方案仅包含两个简单的操作:

- 我们在主机上启动puppet-主机看到属于它的支票并将其导出到puppetDB。

- 在icinga2容器中运行puppet-来自puppetdb的检查将转换为真正的Icinga2配置。

在这里,许多人会说:

“如果我能举起平常的icinga2并用手或通过Web界面添加支票,为什么要使这一切成为现实?”的确,如果您需要监视十二个主机并提供数百种服务,并且您是一个非常整洁的人,并且拥有良好的记忆力(自然界中找不到),那么围堵花园毫无意义。 如果您拥有相当大的基础架构,并且感觉到您可能忘记了某个地方,那就完全不同了。 在这样的时刻,自动化有很大帮助,因为 有助于将所有物品放在货架上,并在许多方面避免了人为因素。

表示主要优点:

让我们看一下这个模板: icinga2_service: '%{::fqdn} disk service': target: /etc/icinga2/zones.d/master/%{::fqdn}.conf apply: true assign: [ 'host.name == %{::fqdn}' ] display_name: 'Disk usage' command_endpoint: '%{::fqdn}' check_command: 'disk' vars: #All disks disk_all: true disk_exclude_type: - aufs - tmpfs disk_ignore_ereg_path: - /run/docker

+ 1. Hiera — .. . Icinga2, , . , , , .

+ 2. , . — .

+ 3. , .. , Icinga2 , puppet Icinga2.

+ 4.因此,我们已将所有检查存储在一个puppetDB数据库中,我们可以创建功能强大的脚本来进一步自动化,我们将在其中使用此信息。

所以走吧

1.设置人偶。

希望您已经配置了docker和docker-compose。如果没有,那么您需要安装它们:安装Docker ...安装Docker-compose ...2.我们将存储库克隆到我们的服务器:

git clone http://git.comgress64.com/external/puppet-icinga2-how-to.git

3.使用您喜欢的编辑器打开docker-compose.yaml并查看它。

我们看到在这个包中,我们有几个容器同时出现-PuppetServer,PuppetDb,PostgreSQL服务器和PuppetBoard。还从当前目录装入卷。此配置并非所有人都最佳,因此请考虑您的基础架构。某人已经拥有PostgreSQL服务器,某人想将代码存储在另一部分中-这是创造的自由。在此阶段,我建议保留默认模板-您以后可以随时返回它。让我们拿起我们的容器包,看看发生了什么:4.启动Puppet,Postgres,Puppetdb和Graphite容器。

现在,我们已经加载了多个映像并启动了容器,并在PostgreSQL中创建了数据库。让我们等待所有服务器启动-错误可以忽略,因为它们会在一段时间后稳定下来。按Ctrl + C退出日志查看模式。5.检查我们的木偶大师的工作:

docker run --net puppeticinga2howto_default --link puppet:puppet puppet/puppet-agent

6.如果一切正常,您将看到以下结论:

Notice: Applied catalog in 0.03 seconds Changes: Total: 1 Events: Success: 1 Total: 1 Resources: Changed: 1 Out of sync: 1 Total: 8 Time: Schedule: 0.00 File: 0.00 Transaction evaluation: 0.01 Catalog application: 0.03 Convert catalog: 0.04 Config retrieval: 0.45 Node retrieval: 1.38 Last run: 1532605377 Fact generation: 2.24 Plugin sync: 4.50 Filebucket: 0.00 Total: 8.65 Version: Config: 1532605376 Puppet: 5.5.1

设置Icinga2服务器。7.通过执行以下操作从Dockerfile中创建映像:

docker-compose build icinga

8.运行将容纳Icinga2服务器的容器。

docker-compose up -d icinga

9.到目前为止,我们的容器是空的。让我们通过执行以下操作来使用我们的Puppet服务器配置icinga2服务器:

10.安装并配置Icinga,Icingaweb2和Apache。

您的Icinga2服务器已准备就绪!

Icingaweb2

1.让我们看看我们得到了什么。

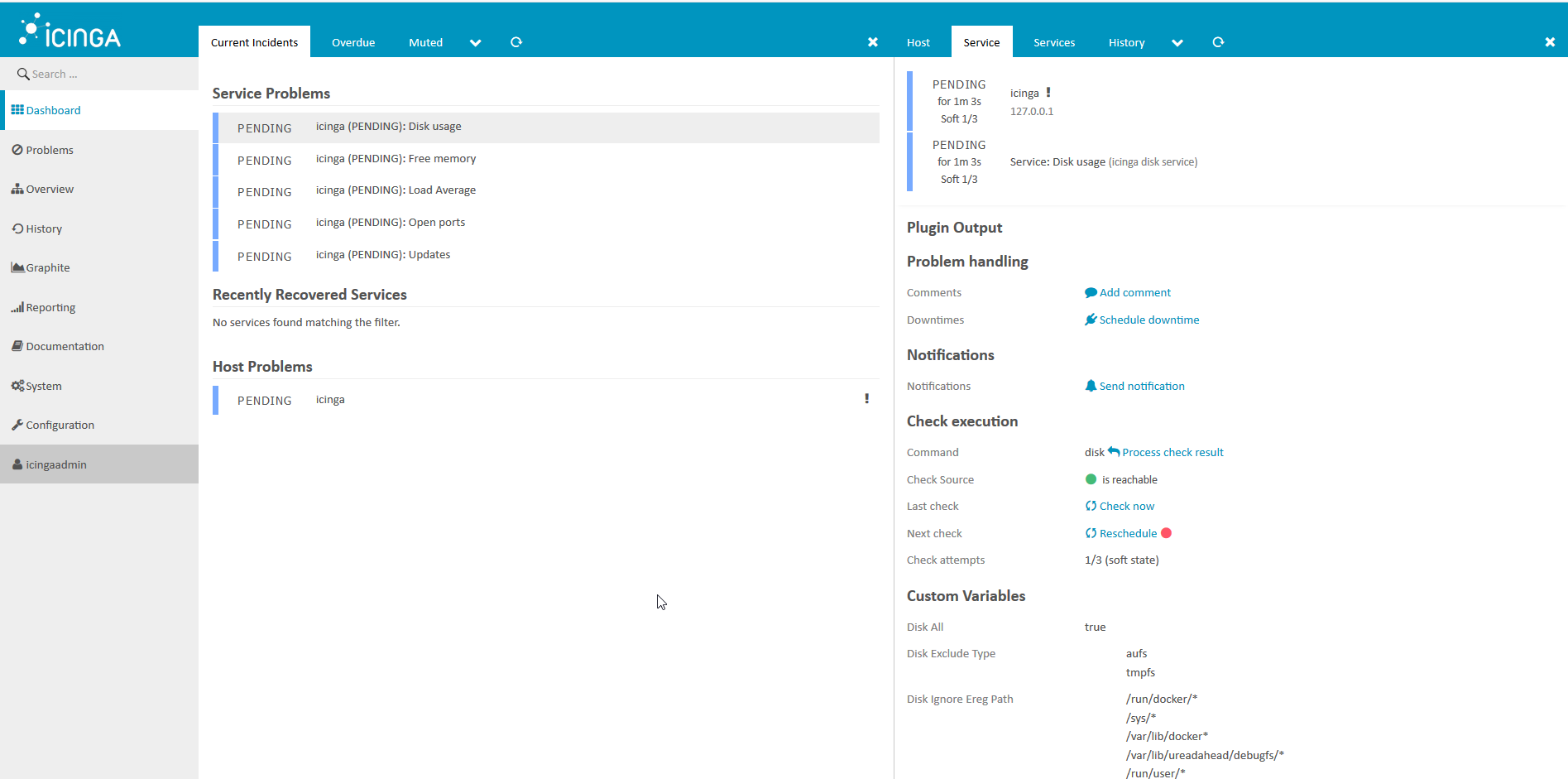

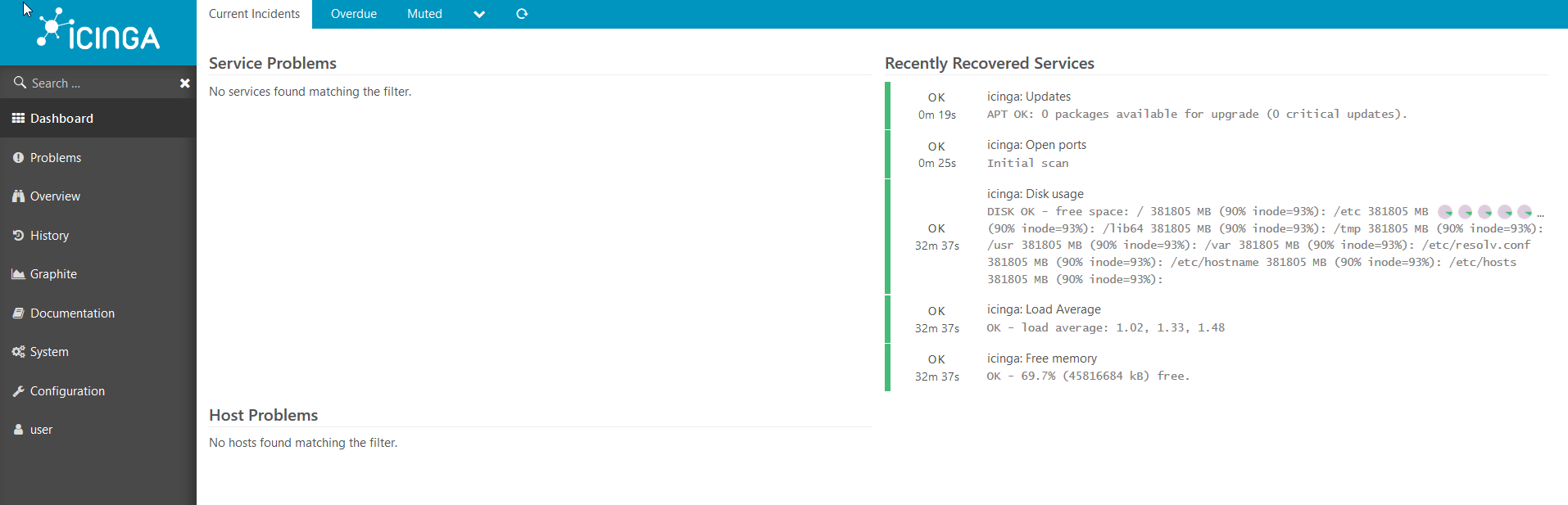

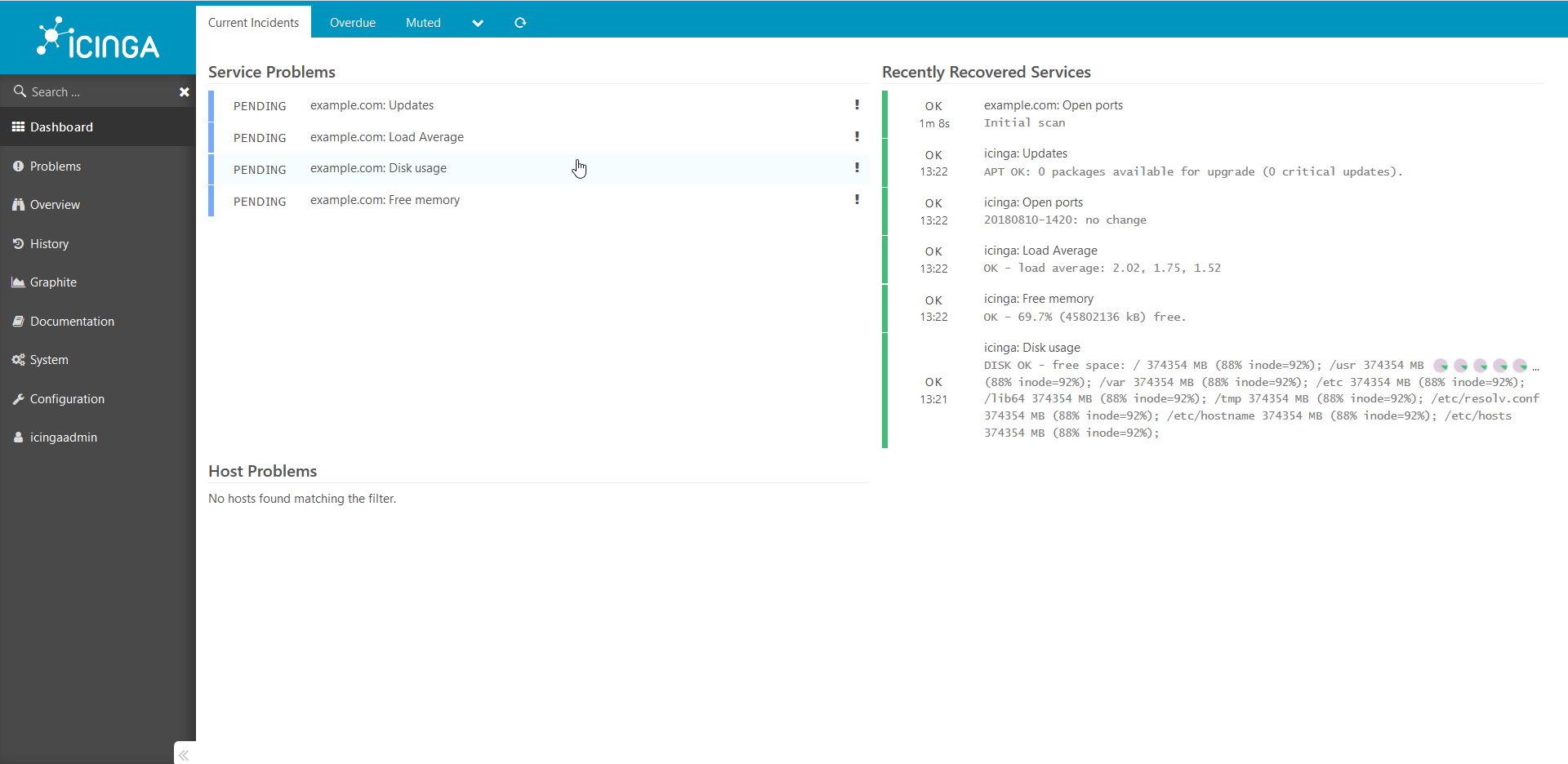

在浏览器中查看监视系统的状态很方便,请执行以下操作:在浏览器中打开http://ip___:8081/icingaweb2并使用默认用户名icingaadmin / icinga转到Icinga。我们看到这张图片: 几分钟后,我们看到所有检查都对我们 有用:

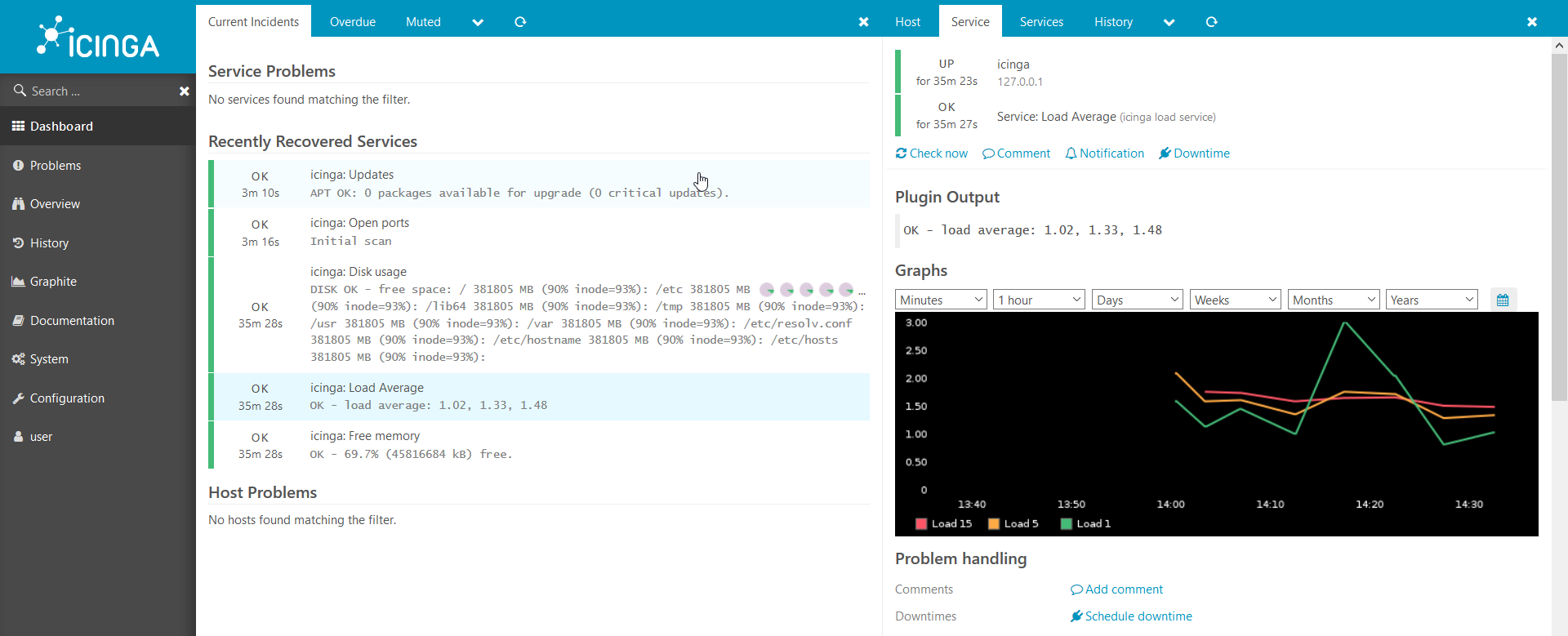

几分钟后,我们看到所有检查都对我们 有用: 图形也起作用:

图形也起作用: 我们有一个适用于Icinga2的Web环境,但是还没有主机连接到Icinga,让我们连接第一个主机。

我们有一个适用于Icinga2的Web环境,但是还没有主机连接到Icinga,让我们连接第一个主机。2.将新主机连接到监视系统

现在,我们只有一台主机连接到icinga2-Icinga本身。让我们连接另一个。我在docker中显示一个示例,但是所有这些都可以在裸机上运行:首先,您需要准备一个模板,并提供一些有关木偶主机的信息:

. hostname , : docker run --hostname example.com --rm -t --link puppet:puppet --net puppeticinga2howto_default -i phusion/baseimage:latest /sbin/my_init -- bash -l

puppet : apt-get update && apt-get install -y ruby make gcc perl-modules && gem install --no-ri --no-rdoc puppet

puppet: puppet agent --server puppet --waitforcert 60 --test

icinga2 puppetDB. , puppet icinga2: docker-compose exec icinga puppet agent --server puppet --waitforcert 60 --test

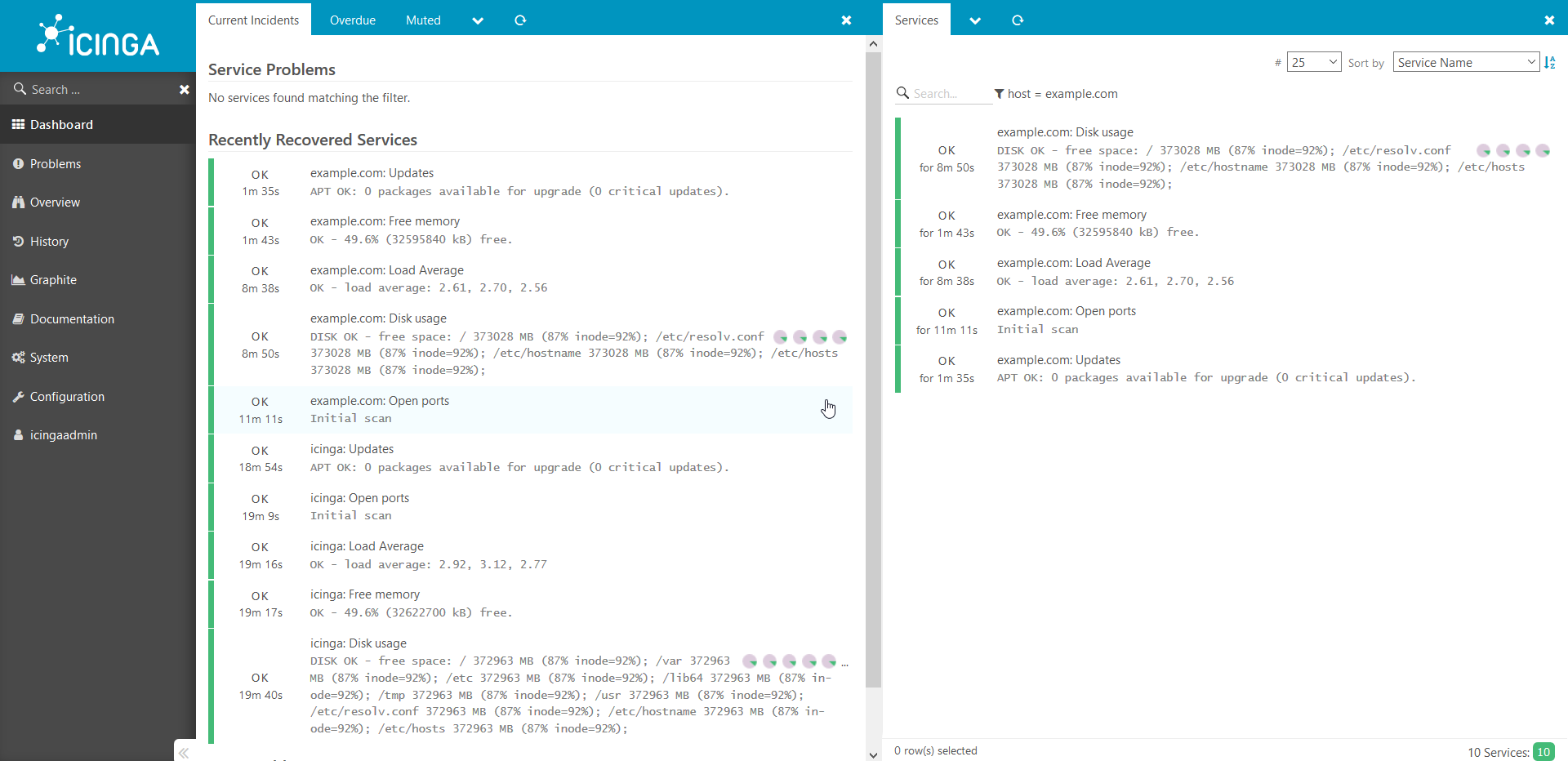

我们通过浏览器查看得到的结果: 已添加检查并等待完成。5分钟后:

已添加检查并等待完成。5分钟后: 以同样的方式,将所有其他主机添加到系统中。我想提请您注意这个指南是一个概念证明,未经修改就不能在生产环境中使用。如果这篇文章看起来很有趣,那么下一次我将展示如何添加各种有趣的自定义检查,共享秘密以及讲述各种微妙之处。另外,如果有需要,我会从内部更详细地介绍这一切的工作方式。感谢您的关注!

以同样的方式,将所有其他主机添加到系统中。我想提请您注意这个指南是一个概念证明,未经修改就不能在生产环境中使用。如果这篇文章看起来很有趣,那么下一次我将展示如何添加各种有趣的自定义检查,共享秘密以及讲述各种微妙之处。另外,如果有需要,我会从内部更详细地介绍这一切的工作方式。感谢您的关注!

Confluence:/ ./docs.comgress64.com/...:https://github.com/Icinga/puppet-icinga2https://forge.puppet.com/icinga/icingaweb2https://docs.docker.com/https://docs.puppet.com/https://docs.puppet.com/hiera/