在Habr上已经有 很多关于在Unity上使用计算着色器的 文章 ,但是,很难找到关于在“干净的” Win32 API + DirectX 11上使用计算着色器的文章。 但是,此任务并没有复杂得多,更详细地说是“削减”。

为此,我们将使用:

- Windows 10

- Visual Studio 2017社区版,带有模块“用C ++开发经典应用程序”

创建项目后,我们将告诉链接器使用`d3d11.lib`库。

头文件要计算每秒的帧数,我们将使用标准库

#include <time.h>

我们将通过窗口标题输出每秒的帧数,为此我们需要形成相应的行

#include <stdio.h>

我们将不考虑错误处理的详细信息,在我们的情况下,应用程序在调试版本中崩溃并在崩溃时表明已经足够:

#include <assert.h>

WinAPI的头文件:

#define WIN32_LEAN_AND_MEAN #include <tchar.h> #include <Windows.h>

Direct3D 11的头文件:

#include <dxgi.h> #include <d3d11.h>

用于加载着色器的资源ID。 相反,您可以将HLSL编译器生成的着色器目标文件加载到内存中。 稍后描述创建资源文件。

#include "resource.h"

着色器和调用部分共有的常量将在单独的头文件中声明。

#include "SharedConst.h"

我们声明一个用于处理Windows事件的函数,稍后将对其进行定义:

LRESULT CALLBACK WndProc(HWND hWnd, UINT Msg, WPARAM wParam, LPARAM lParam);

我们将编写用于创建和销毁窗口的函数

int windowWidth, windowHeight; HINSTANCE hInstance; HWND hWnd; void InitWindows() {

接下来是用于访问视频卡的接口(Device和DeviceContext)和输出缓冲区链(SwapChain)的初始化:

IDXGISwapChain *swapChain; ID3D11Device *device; ID3D11DeviceContext *deviceContext; void InitSwapChain() { HRESULT result; DXGI_SWAP_CHAIN_DESC swapChainDesc;

初始化从着色器到将执行渲染的缓冲区的访问:

ID3D11RenderTargetView *renderTargetView; void InitRenderTargetView() { HRESULT result; ID3D11Texture2D *backBuffer;

在初始化着色器之前,需要创建它们。 Visual Studio可以识别文件扩展名,因此我们可以简单地创建扩展名为.hlsl的源,或通过菜单直接创建着色器。 我选择第一种方法,因为 无论如何,您必须通过属性设置使用Shader Model 5。

同样,创建顶点和像素着色器。

在顶点着色器中,我们只需将坐标从二维矢量(因为我们拥有的点的位置为二维)转换为四维(由视频卡接收):

float4 main(float2 input: POSITION): SV_POSITION { return float4(input, 0, 1); }

在像素着色器中,我们将返回白色:

float4 main(float4 input: SV_POSITION): SV_TARGET { return float4(1, 1, 1, 1); }

现在是一个计算着色器。 我们为点的相互作用定义以下公式:

大众采用1

这是在HLSL上实现的样子:

#include "SharedConst.h"

您可能会注意到SharedConst.h文件包含在着色器中。 这是带有常量的头文件,包含在main.cpp 。 这是此文件的内容:

#ifndef PARTICLE_COUNT #define PARTICLE_COUNT (1 << 15) #endif #ifndef NUMTHREADS #define NUMTHREADS 64 #endif

仅声明一组中的粒子数和流数。 我们将为每个粒子分配一个流,因此我们将PARTICLE_COUNT / NUMTHREADS数PARTICLE_COUNT / NUMTHREADS为PARTICLE_COUNT / NUMTHREADS 。 该数字必须是整数,因此有必要将粒子数除以组中的流数。

我们将使用Windows资源机制加载已编译的着色器字节码。 为此,请创建以下文件:

resource.h ,它将包含相应资源的ID:

#pragma once #define IDR_BYTECODE_COMPUTE 101 #define IDR_BYTECODE_VERTEX 102 #define IDR_BYTECODE_PIXEL 103

还有resource.rc ,一个用于生成以下内容的相应资源的文件:

#include "resource.h" IDR_BYTECODE_COMPUTE ShaderObject "compute.cso" IDR_BYTECODE_VERTEX ShaderObject "vertex.cso" IDR_BYTECODE_PIXEL ShaderObject "pixel.cso"

其中ShaderObject是资源的类型, compute.cso , vertex.cso和pixel.cso是输出目录中已编译Shader Object文件的对应名称。

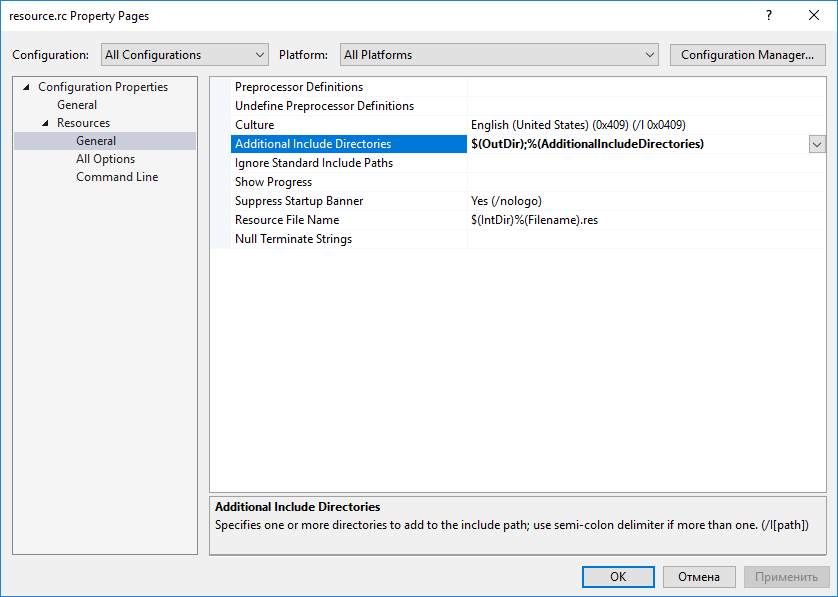

为了找到文件,您应该在resource.rc属性中指定项目输出目录的路径:

Visual Studio自动将文件识别为资源的描述并将其添加到程序集中,您无需手动执行此操作

现在您可以编写着色器初始化代码:

ID3D11ComputeShader *computeShader; ID3D11VertexShader *vertexShader; ID3D11PixelShader *pixelShader; ID3D11InputLayout *inputLayout; void InitShaders() { HRESULT result; HRSRC src; HGLOBAL res;

缓冲区初始化代码:

ID3D11Buffer *positionBuffer; ID3D11Buffer *velocityBuffer; void InitBuffers() { HRESULT result; float *data = new float[2 * PARTICLE_COUNT];

并且来自计算着色器的缓冲区访问初始化代码:

ID3D11UnorderedAccessView *positionUAV; ID3D11UnorderedAccessView *velocityUAV; void InitUAV() { HRESULT result;

接下来,您应该告诉驱动程序将创建的着色器和束与缓冲区一起使用:

void InitBindings() {

要计算平均帧时间,我们将使用以下代码:

const int FRAME_TIME_COUNT = 128; clock_t frameTime[FRAME_TIME_COUNT]; int currentFrame = 0; float AverageFrameTime() { frameTime[currentFrame] = clock(); int nextFrame = (currentFrame + 1) % FRAME_TIME_COUNT; clock_t delta = frameTime[currentFrame] - frameTime[nextFrame]; currentFrame = nextFrame; return (float)delta / CLOCKS_PER_SEC / FRAME_TIME_COUNT; }

并在每个帧上调用此函数:

void Frame() { float frameTime = AverageFrameTime();

如果窗口大小已更改,我们还需要更改渲染缓冲区的大小:

void ResizeSwapChain() { HRESULT result; RECT rect;

最后,您可以定义消息处理功能:

LRESULT CALLBACK WndProc(HWND hWnd, UINT Msg, WPARAM wParam, LPARAM lParam) { switch (Msg) { case WM_CLOSE: PostQuitMessage(0); break; case WM_KEYDOWN: if (wParam == VK_ESCAPE) PostQuitMessage(0); break; case WM_SIZE: ResizeSwapChain(); break; default: return DefWindowProc(hWnd, Msg, wParam, lParam); } return 0; }

和main功能:

int main() { InitWindows(); InitSwapChain(); InitRenderTargetView(); InitShaders(); InitBuffers(); InitUAV(); InitBindings(); ShowWindow(hWnd, SW_SHOW); bool shouldExit = false; while (!shouldExit) { Frame(); MSG msg; while (!shouldExit && PeekMessage(&msg, NULL, 0, 0, PM_REMOVE)) { TranslateMessage(&msg); DispatchMessage(&msg); if (msg.message == WM_QUIT) shouldExit = true; } } DisposeUAV(); DisposeBuffers(); DisposeShaders(); DisposeRenderTargetView(); DisposeSwapChain(); DisposeWindows(); }

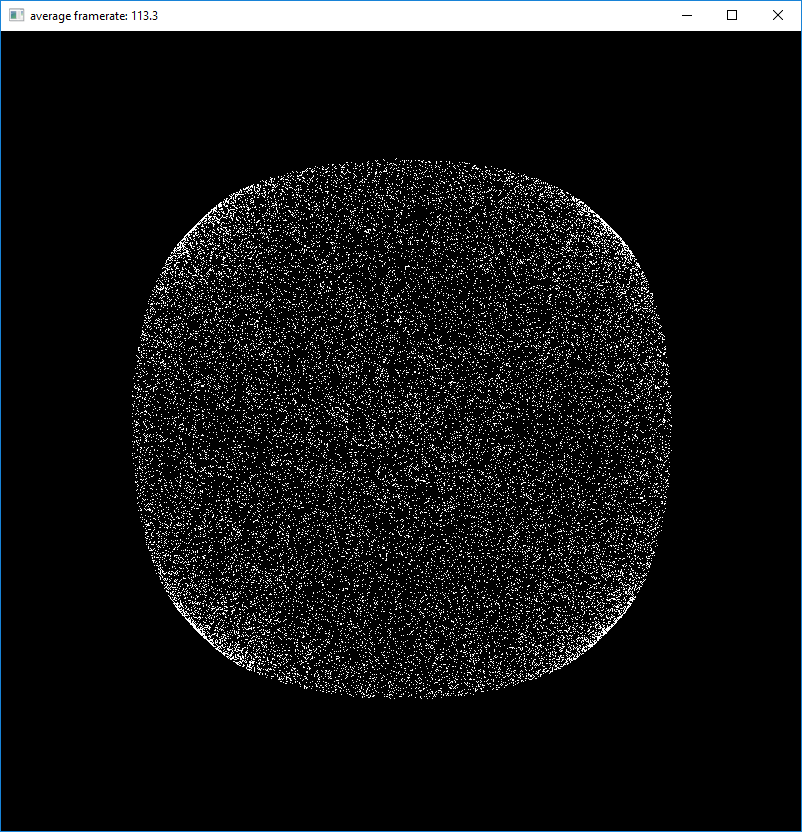

在文章标题中可以看到正在运行的程序的屏幕截图。

→该项目已完全上传到GitHub