开班

- 扫开Openshift。

- 安装后配置。

- 创建并连接PV。

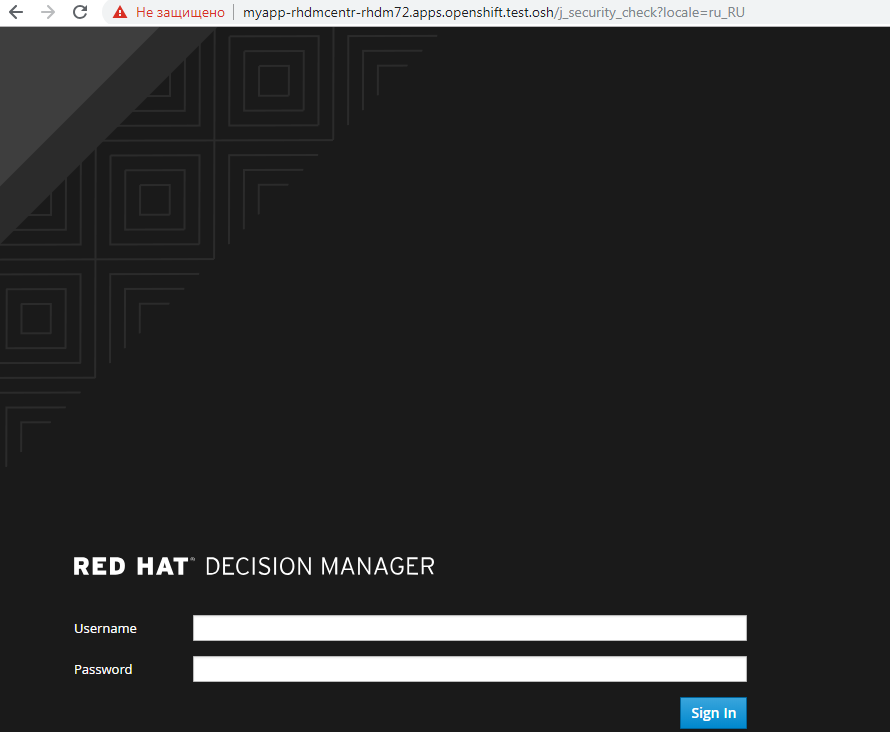

- 创建和部署Red Hat Decision Manager项目(与kie-workbench相似的企业)。

- 使用持久性存储库创建和部署AMQ(red hat active mq)和postgressql项目。

- 为服务创建单独的项目,为它们的模板,管道,与gitlab集成,gitlab收录。

1. Openshift扫频

服务器要求,准备dns服务器,服务器名称列表,服务器要求。

最低要求是简短的-所有服务器必须至少具有16Gb Ram 2内核和至少100 GB,才能满足docker的需求。

基于绑定的dns应该具有以下配置。

dkm-主节点,dk0-执行中,ifr-基础设施,bln-平衡器,shd-nfs,dkr-用于配置群集的控制节点,也计划作为docker regestion下的单独节点。

db.osh $TTL 1h @ IN SOA test.osh. root.test.osh. ( 2008122601 ; Serial 28800 ; Refresh 14400 ; Retry 604800 ; Expire - 1 week 86400 ) ; Minimum @ IN NS test.osh. @ IN A 127.0.0.1 rnd-osh-dk0-t01 IN A 10.19.86.18 rnd-osh-dk0-t02 IN A 10.19.86.19 rnd-osh-dk0-t03 IN A 10.19.86.20 rnd-osh-dkm-t01 IN A 10.19.86.21 rnd-osh-dkm-t02 IN A 10.19.86.22 rnd-osh-dkm-t03 IN A 10.19.86.23 rnd-osh-ifr-t01 IN A 10.19.86.24 rnd-osh-ifr-t02 IN A 10.19.86.25 rnd-osh-ifr-t03 IN A 10.19.86.26 rnd-osh-bln-t01 IN A 10.19.86.27 rnd-osh-shd-t01 IN A 10.19.86.28 rnd-osh-dkr-t01 IN A 10.19.86.29 lb IN A 10.19.86.27 openshift IN A 10.19.86.27 api-openshift IN A 10.19.86.27 *.apps.openshift IN A 10.19.86.21 *.apps.openshift IN A 10.19.86.22 *.apps.openshift IN A 10.19.86.23

db.rv.osh $TTL 1h @ IN SOA test.osh. root.test.osh. ( 1 ; Serial 604800 ; Refresh 86400 ; Retry 2419200 ; Expire 604800 ) ; Negative Cache TTL ; @ IN NS test.osh. @ IN A 127.0.0.1 18 IN PTR rnd-osh-dk0-t01.test.osh. 19 IN PTR rnd-osh-dk0-t02.test.osh. 20 IN PTR rnd-osh-dk0-t03.test.osh. 21 IN PTR rnd-osh-dkm-t01.test.osh. 22 IN PTR rnd-osh-dkm-t02.test.osh. 23 IN PTR rnd-osh-dkm-t03.test.osh. 24 IN PTR rnd-osh-ifr-t01.test.osh. 25 IN PTR rnd-osh-ifr-t02.test.osh. 26 IN PTR rnd-osh-ifr-t03.test.osh. 27 IN PTR rnd-osh-bln-t01.test.osh. 28 IN PTR rnd-osh-shd-t01.test.osh. 29 IN PTR rnd-osh-dkr-t01.test.osh. 27 IN PTR lb.test.osh. 27 IN PTR api-openshift.test.osh. named.conf.default-zones

zone "test.osh" IN { type master; file "/etc/bind/db.osh"; allow-update { none; }; notify no; }; zone "86.19.10.in-addr.arpa" { type master; file "/etc/bind/db.rv.osh"; };

服务器准备连接订阅后。 启用存储库并安装最初需要的软件包。

rm -rf /etc/yum.repos.d/cdrom.repo subscription-manager repos --disable="*" subscription-manager repos --enable="rhel-7-server-rpms" --enable="rhel-7-server-extras-rpms" --enable="rhel-7-server-ose-3.10-rpms" --enable="rhel-7-server-ansible-2.4-rpms" yum -y install wget git net-tools bind-utils yum-utils iptables-services bridge-utils bash-completion kexec-tools sos psacct yum -y update yum -y install docker

Docker存储配置(单独的驱动器)。

systemctl stop docker rm -rf /var/lib/docker/* echo "STORAGE_DRIVER=overlay2" > /etc/sysconfig/docker-storage-setup echo "DEVS=/dev/sdc" >> /etc/sysconfig/docker-storage-setup echo "CONTAINER_ROOT_LV_NAME=dockerlv" >> /etc/sysconfig/docker-storage-setup echo "CONTAINER_ROOT_LV_SIZE=100%FREE" >> /etc/sysconfig/docker-storage-setup echo "CONTAINER_ROOT_LV_MOUNT_PATH=/var/lib/docker" >> /etc/sysconfig/docker-storage-setup echo "VG=docker-vg" >> /etc/sysconfig/docker-storage-setup systemctl enable docker docker-storage-setup systemctl is-active docker systemctl restart docker docker info | grep Filesystem

安装剩余的必要软件包。

yum -y install atomic atomic trust show yum -y install docker-novolume-plugin systemctl enable docker-novolume-plugin systemctl start docker-novolume-plugin yum -y install openshift-ansible

创建,添加用户以及键。

useradd --create-home --groups users,wheel ocp sed -i 's/# %wheel/%wheel/' /etc/sudoers mkdir -p /home/ocp/.ssh echo "ssh-rsa AAAAB3NzaC........8Ogb3Bv ocp SSH Key" >> /home/ocp/.ssh/authorized_keys

如果与已使用的子网冲突-更改容器内的默认地址。

echo '{ "bip": "172.26.0.1/16" }' > /etc/docker/daemon.json systemctl restart docker

网络管理器配置。 (DNS应该能够超越外界)

nmcli connection modify ens192 ipv4.dns 172.17.70.140 nmcli connection modify ens192 ipv4.dns-search cluster.local +ipv4.dns-search test.osh +ipv4.dns-search cpgu systemctl stop firewalld systemctl disable firewalld systemctl restart network

如有必要,以全名编辑计算机名称。

hhh=$(cat /etc/hostname) echo "$hhh".test.osh > /etc/hostname

完成步骤后,重新启动服务器。

准备dkr控制节点

控制节点与其余节点之间的区别在于,无需将docker连接到单独的磁盘。

还需要配置ntp。

yum install ntp -y systemctl enable ntpd service ntpd start service ntpd status ntpq -p chmod 777 -R /usr/share/ansible/openshift-ansible/

您还需要将私钥添加到ocp。

在所有节点上以ocp身份访问ssh。

准备库存文件并扩展集群。 host-poc.yaml [OSEv3:children] masters nodes etcd lb nfs [OSEv3:vars] ansible_ssh_user=ocp ansible_become=yes openshift_override_hostname_check=True openshift_master_cluster_method=native openshift_disable_check=memory_availability,disk_availability,package_availability openshift_deployment_type=openshift-enterprise openshift_release=v3.10 oreg_url=registry.access.redhat.com/openshift3/ose-${component}:${version} debug_level=2 os_firewall_use_firewalld=True openshift_install_examples=true openshift_clock_enabled=True openshift_router_selector='node-role.kubernetes.io/infra=true' openshift_registry_selector='node-role.kubernetes.io/infra=true' openshift_examples_modify_imagestreams=true os_sdn_network_plugin_name='redhat/openshift-ovs-multitenant' openshift_master_identity_providers=[{'name': 'htpasswd_auth', 'login': 'true', 'challenge': 'true', 'kind': 'HTPasswdPasswordIdentityProvider'}] openshift_master_htpasswd_users={'admin': '$apr1$pQ3QPByH$5BDkrp0m5iclRske.M0m.0'} openshift_master_default_subdomain=apps.openshift.test.osh openshift_master_cluster_hostname=api-openshift.test.osh openshift_master_cluster_public_hostname=openshift.test.osh openshift_enable_unsupported_configurations=true openshift_use_crio=true openshift_crio_enable_docker_gc=true # registry openshift_hosted_registry_storage_kind=nfs openshift_hosted_registry_storage_access_modes=['ReadWriteMany'] openshift_hosted_registry_storage_nfs_directory=/exports openshift_hosted_registry_storage_nfs_options='*(rw,root_squash)' openshift_hosted_registry_storage_volume_name=registry openshift_hosted_registry_storage_volume_size=30Gi # cluster monitoring openshift_cluster_monitoring_operator_install=true openshift_cluster_monitoring_operator_node_selector={'node-role.kubernetes.io/master': 'true'} #metrics openshift_metrics_install_metrics=true openshift_metrics_hawkular_nodeselector={"node-role.kubernetes.io/infra": "true"} openshift_metrics_cassandra_nodeselector={"node-role.kubernetes.io/infra": "true"} openshift_metrics_heapster_nodeselector={"node-role.kubernetes.io/infra": "true"} openshift_metrics_storage_kind=nfs openshift_metrics_storage_access_modes=['ReadWriteOnce'] openshift_metrics_storage_nfs_directory=/exports openshift_metrics_storage_nfs_options='*(rw,root_squash)' openshift_metrics_storage_volume_name=metrics openshift_metrics_storage_volume_size=20Gi #logging openshift_logging_kibana_nodeselector={"node-role.kubernetes.io/infra": "true"} openshift_logging_curator_nodeselector={"node-role.kubernetes.io/infra": "true"} openshift_logging_es_nodeselector={"node-role.kubernetes.io/infra": "true"} openshift_logging_install_logging=true openshift_logging_es_cluster_size=1 openshift_logging_storage_kind=nfs openshift_logging_storage_access_modes=['ReadWriteOnce'] openshift_logging_storage_nfs_directory=/exports openshift_logging_storage_nfs_options='*(rw,root_squash)' openshift_logging_storage_volume_name=logging openshift_logging_storage_volume_size=20Gi #ASB ansible_service_broker_install=true openshift_hosted_etcd_storage_kind=nfs openshift_hosted_etcd_storage_nfs_options="*(rw,root_squash,sync,no_wdelay)" openshift_hosted_etcd_storage_nfs_directory=/opt/osev3-etcd openshift_hosted_etcd_storage_volume_name=etcd-vol2 openshift_hosted_etcd_storage_access_modes=["ReadWriteOnce"] openshift_hosted_etcd_storage_volume_size=30G openshift_hosted_etcd_storage_labels={'storage': 'etcd'} ansible_service_broker_local_registry_whitelist=['.*-apb$'] #cloudforms #openshift_management_install_management=true #openshift_management_app_template=cfme-template # host group for masters [masters] rnd-osh-dkm-t0[1:3].test.osh # host group for etcd [etcd] rnd-osh-dkm-t0[1:3].test.osh [lb] rnd-osh-bln-t01.test.osh containerized=False [nfs] rnd-osh-shd-t01.test.osh [nodes] rnd-osh-dkm-t0[1:3].test.osh openshift_node_group_name='node-config-master' rnd-osh-ifr-t0[1:3].test.osh openshift_node_group_name='node-config-infra' rnd-osh-dk0-t0[1:3].test.osh openshift_node_group_name='node-config-compute'

交替运行剧本。

ansible-playbook -i host-poc.yaml /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i host-poc.yaml /usr/share/ansible/openshift-ansible/playbooks/openshift-checks/pre-install.yml ansible-playbook -i host-poc.yaml /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

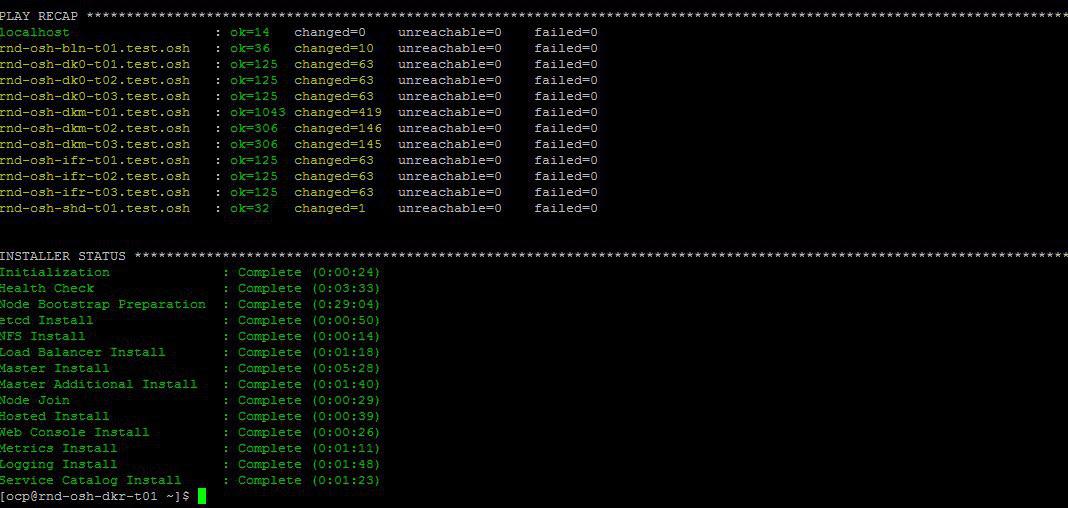

如果一切顺利,大约将是以下情况。

通过Web界面安装oprenshift操作后,编辑本地主机文件以进行验证。

10.19.86.18 rnd-osh-dk0-t01.test.osh 10.19.86.19 rnd-osh-dk0-t02.test.osh 10.19.86.20 rnd-osh-dk0-t03.test.osh 10.19.86.21 rnd-osh-dkm-t01.test.osh 10.19.86.22 rnd-osh-dkm-t02.test.osh 10.19.86.23 rnd-osh-dkm-t03.test.osh 10.19.86.24 rnd-osh-ifr-t01.test.osh 10.19.86.25 rnd-osh-ifr-t02.test.osh 10.19.86.26 rnd-osh-ifr-t03.test.osh 10.19.86.27 rnd-osh-bln-t01.test.osh openshift.test.osh 10.19.86.28 rnd-osh-shd-t01.test.osh 10.19.86.29 rnd-osh-dkr-t01.test.osh

验证网址

openshift.test.osh :8443

2.安装后的配置

输入dkm。

oc login -u system:admin oc adm policy add-cluster-role-to-user cluster-admin admin --rolebinding-name=cluster-admin

检查是否可以在Web界面中看到以前隐藏的项目(例如,openshift)。

3.创建并连接PV

在nfs服务器上创建持久卷。

mkdir -p /exports/examplpv chmod -R 777 /exports/examplpv chown nfsnobody:nfsnobody -R /exports/examplpv echo '"/exports/examplpv" *(rw,root_squash)' >> /etc/exports.d/openshift-ansible.exports exportfs -ar restorecon -RvF

将PV添加到openshift。

您必须创建一个oc new-projectexamplpv-project项目。

如果已经创建了项目,请转到项目examplpv-project。 使用以下内容创建yaml。

apiVersion: v1 kind: PersistentVolume metadata: name: examplpv-ts1 spec: capacity: storage: 20Gi accessModes: - ReadWriteOnce nfs: path: /exports/examplpv server: rnd-osh-shd-t01 persistentVolumeReclaimPolicy: Recycle

并申请。 oc apply -f filename.yaml

做完之后

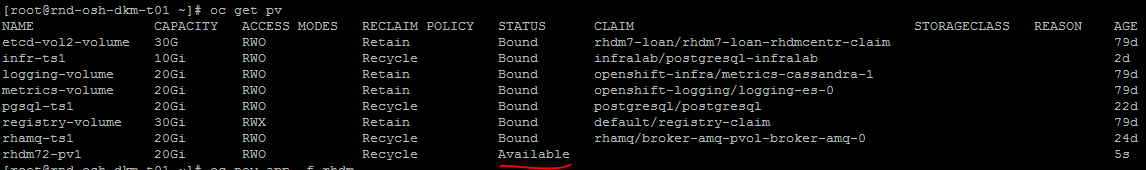

oc get pv

创建的PV将在列表中可见。

4.创建和部署Red Hat Decision Manager项目(与kie-workbench相似的企业)

检查模板。

oc get imagestreamtag -n openshift | grep rhdm

添加模板-可以找到链接和更完整的描述

unzip rhdm-7.2.1-openshift-templates.zip -d ./rhdm-7.2.1-openshift-templates

创建一个新项目:

oc new-project rhdm72

向docker Registry.redhat.io服务器添加授权:

docker login registry.redhat.io cat ~/.docker/config.json oc create secret generic pull-secret-name --from-file=.dockerconfigjson=/root/.docker/config.json --type=kubernetes.io/dockerconfigjson oc secrets link default pull-secret-name --for=pull oc secrets link builder pull-secret-name

导入imagetream,密钥创建Decision Server,Decision Central。

keytool -genkeypair -alias jboss -keyalg RSA -keystore keystore.jks -storepass mykeystorepass --dname "CN=STP,OU=Engineering,O=POC.mos,L=Raleigh,S=NC,C=RU" oc create -f rhdm72-image-streams.yaml oc create secret generic kieserver-app-secret --from-file=keystore.jks oc create secret generic decisioncentral-app-secret --from-file=keystore.jks

在nfs服务器上创建持久卷。

mkdir -p /exports/rhdm72 chmod -R 777 /exports/rhdm72 chown nfsnobody:nfsnobody -R /exports/rhdm72 echo '"/exports/rhamq72" *(rw,root_squash)' >> /etc/exports.d/openshift-ansible.exports exportfs -ar restorecon -RvF

将pv添加到项目中:

apiVersion: v1 kind: PersistentVolume metadata: name: rhdm72-pv1 spec: capacity: storage: 20Gi accessModes: - ReadWriteMany nfs: path: /exports/rhdm72 server: rnd-osh-shd-t01 persistentVolumeReclaimPolicy: Recycle

Rhdm70所需的PV参数

accessModes:

-ReadWriteOnce

但是7.2已经需要

accessModes:

-ReadWriteMany

套用-oc套用-f filename.yaml

+检查创建的PV是否可用。

根据官方文档从模板创建应用程序。

oc new-app -f rhdm-7.2.1-openshift-templates/templates/rhdm72-authoring.yaml -p DECISION_CENTRAL_HTTPS_SECRET=decisioncentral-app-secret -p KIE_SERVER_HTTPS_SECRET=kieserver-app-secret

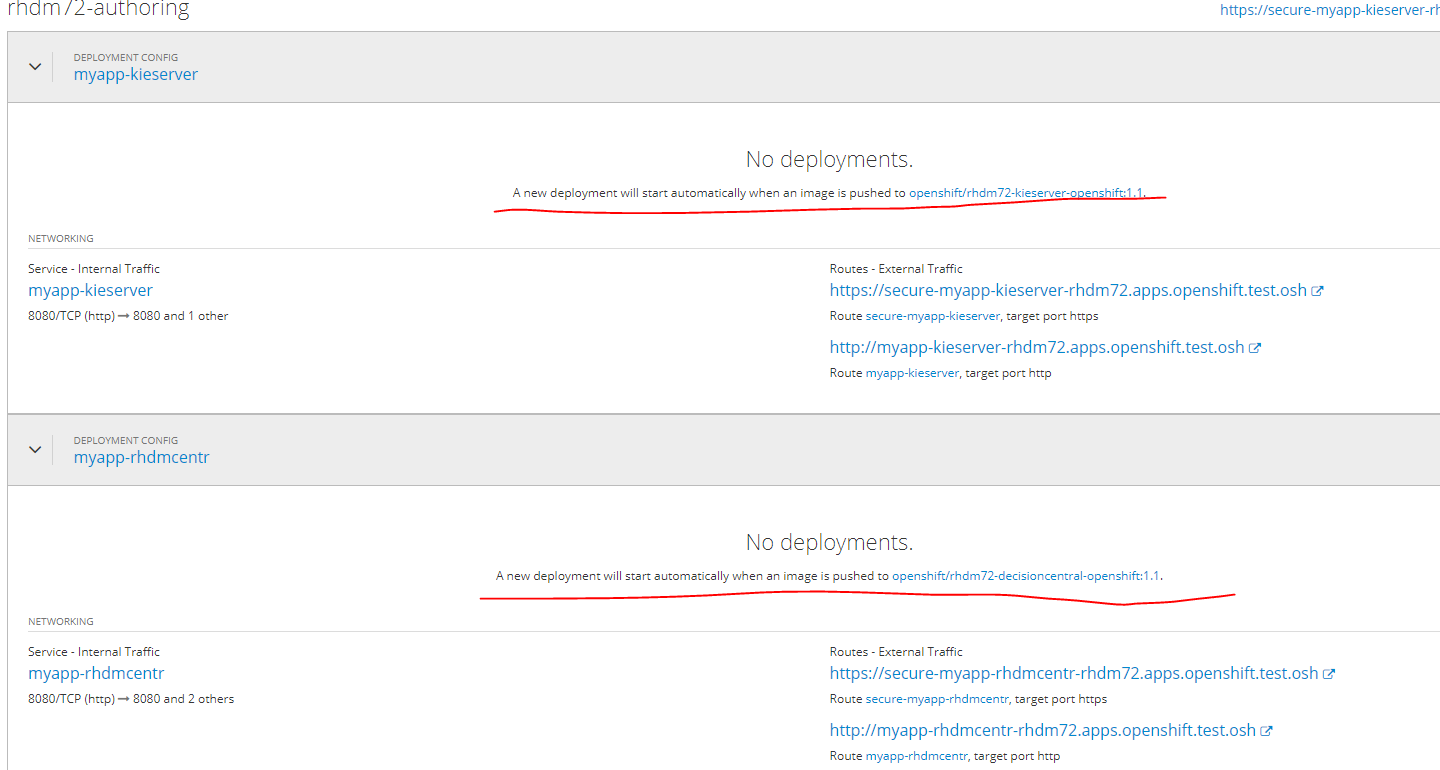

该应用程序将在docker-registry中的Pull映像完成后自动部署。

在这一刻之前,状态将是这样。

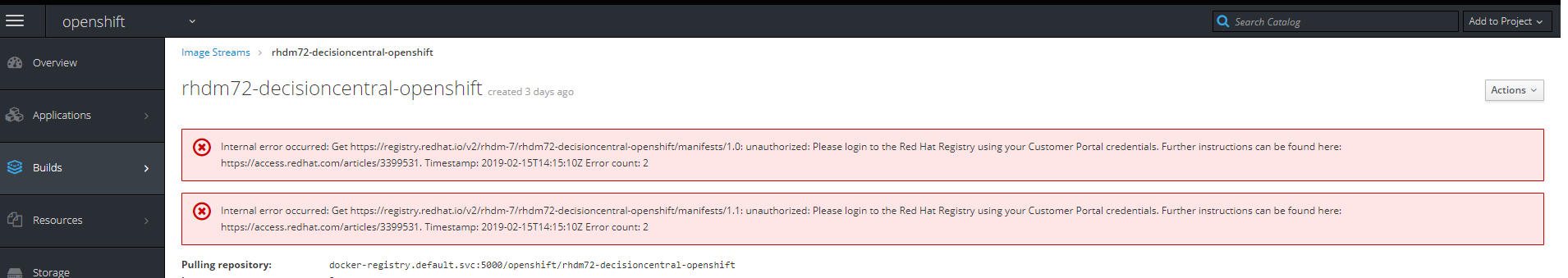

如果单击图像的链接,将出现以下错误

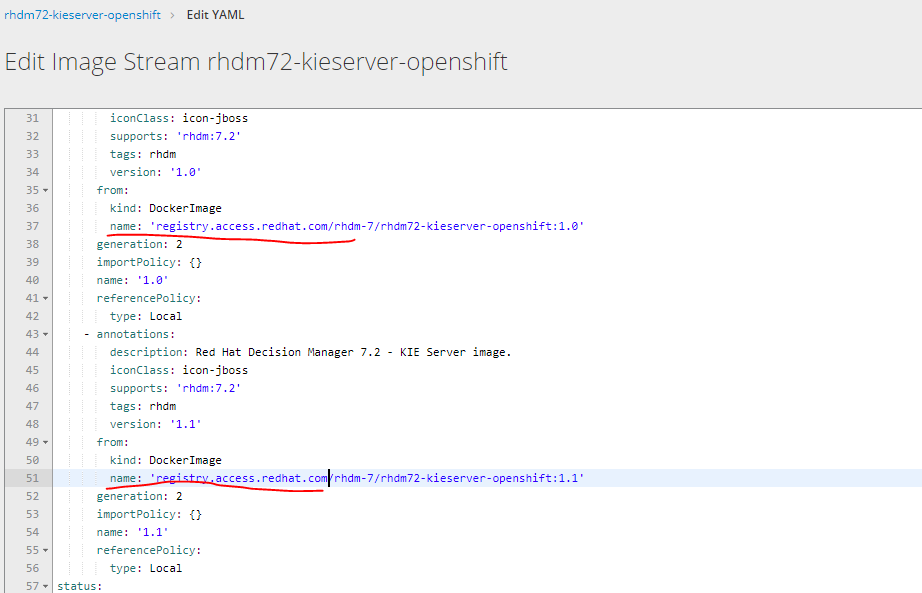

您必须通过选择将yaml从Registry.redhat.io更改为Registry.access.redhat.com来更改图片上传网址

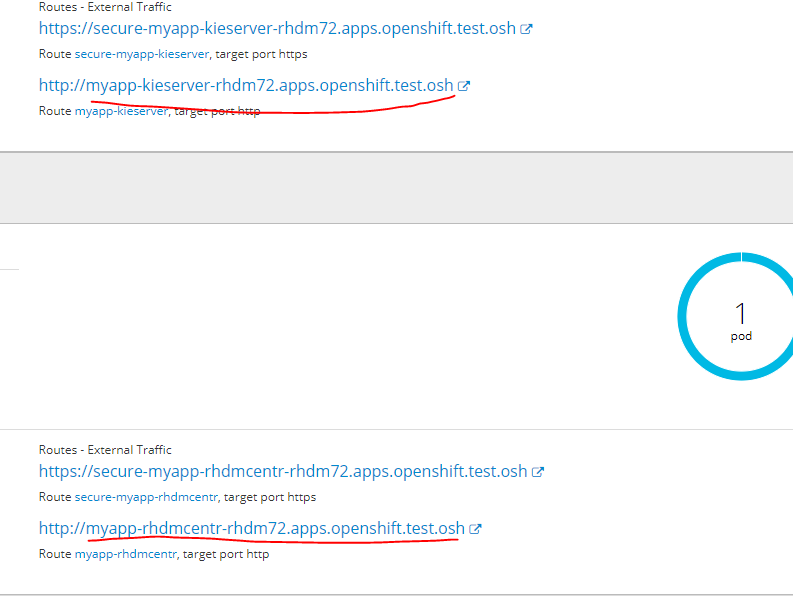

要在其Web界面中转到已部署的服务,请将以下url添加到hosts文件

在任何基础节点上

10.19.86.25 rnd-osh-ifr-t02.test.osh myapp-rhdmcentr-rhdm72.apps.openshift.test.osh

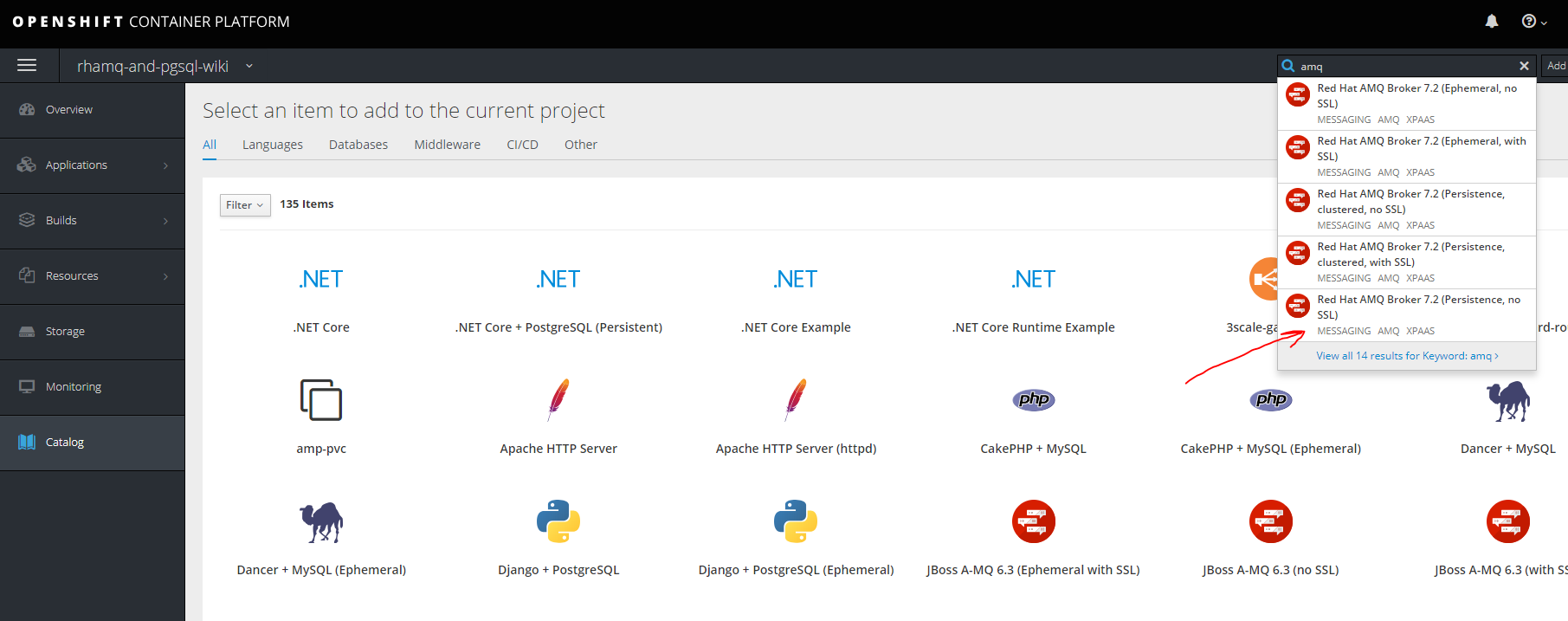

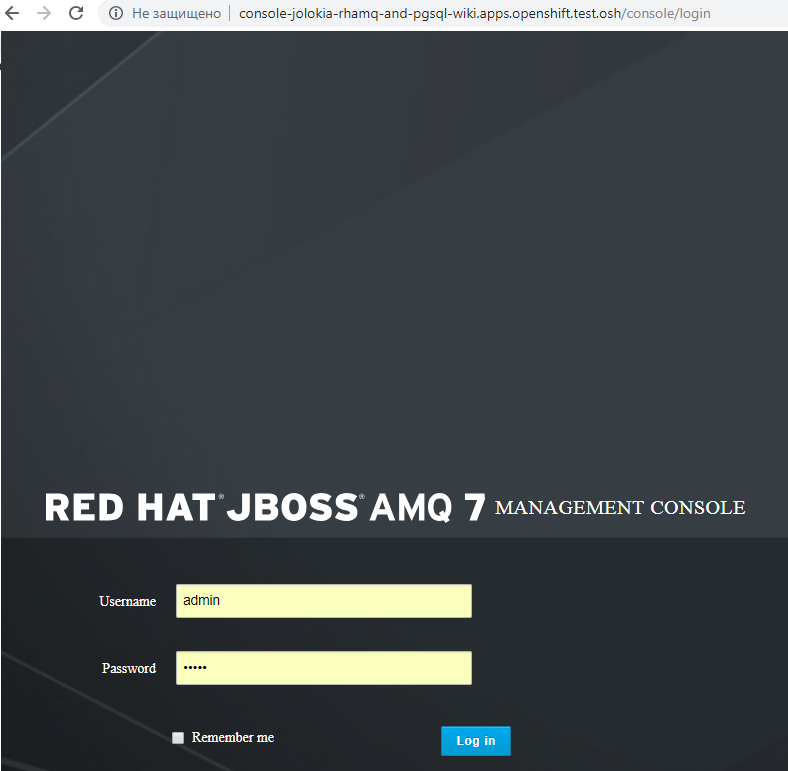

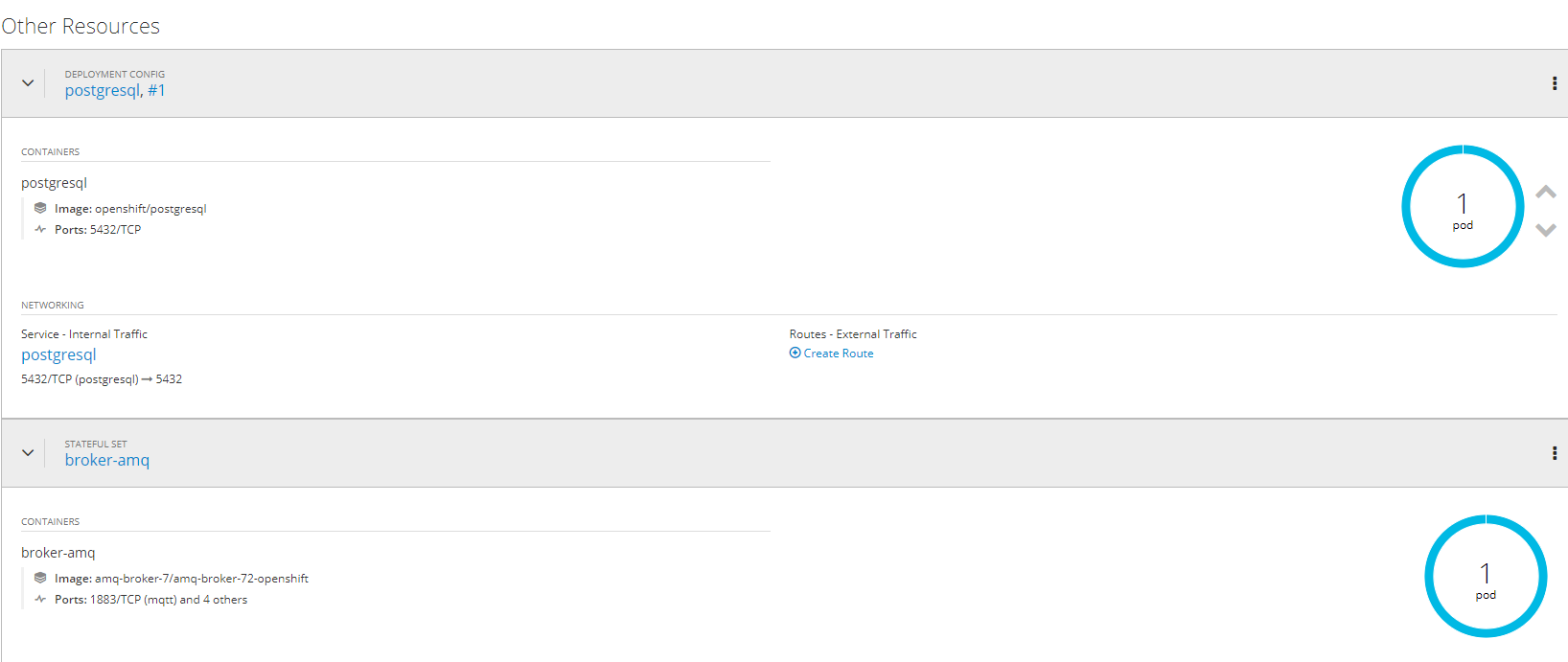

5.使用持久性存储库创建和部署AMQ(red hat active mq)和postgressql项目

拉姆克创建一个新项目

oc new-project rhamq-and-pgsql

如果没有图像,我们会导入图像。

oc replace --force -f https://raw.githubusercontent.com/jboss-container-images/jboss-amq-7-broker-openshift-image/72-1.1.GA/amq-broker-7-image-streams.yaml oc replace --force -f https://raw.githubusercontent.com/jboss-container-images/jboss-amq-7-broker-openshift-image/72-1.1.GA/amq-broker-7-scaledown-controller-image-streams.yaml oc import-image amq-broker-72-openshift:1.1 oc import-image amq-broker-72-scaledown-controller-openshift:1.0

模板安装

for template in amq-broker-72-basic.yaml \ amq-broker-72-ssl.yaml \ amq-broker-72-custom.yaml \ amq-broker-72-persistence.yaml \ amq-broker-72-persistence-ssl.yaml \ amq-broker-72-persistence-clustered.yaml \ amq-broker-72-persistence-clustered-ssl.yaml; do oc replace --force -f \ https://raw.githubusercontent.com/jboss-container-images/jboss-amq-7-broker-openshift-image/72-1.1.GA/templates/${template} done

向服务帐户添加角色。

oc policy add-role-to-user view -z default

在NFS服务器上创建PV

mkdir -p /exports/pgmq chmod -R 777 /exports/pgmq chown nfsnobody:nfsnobody -R /exports/pgmq echo '"/exports/pgmq" *(rw,root_squash)' >> /etc/exports.d/openshift-ansible.exports exportfs -ar restorecon -RvF

创建YAML

pgmq_storage.yaml

apiVersion: v1 kind: PersistentVolume metadata: name: pgmq-ts1 spec: capacity: storage: 20Gi accessModes: - ReadWriteOnce nfs: path: /exports/pgmq server: rnd-osh-shd-t01 persistentVolumeReclaimPolicy: Recycle

申请光伏

oc apply -f pgmq_storage.yaml

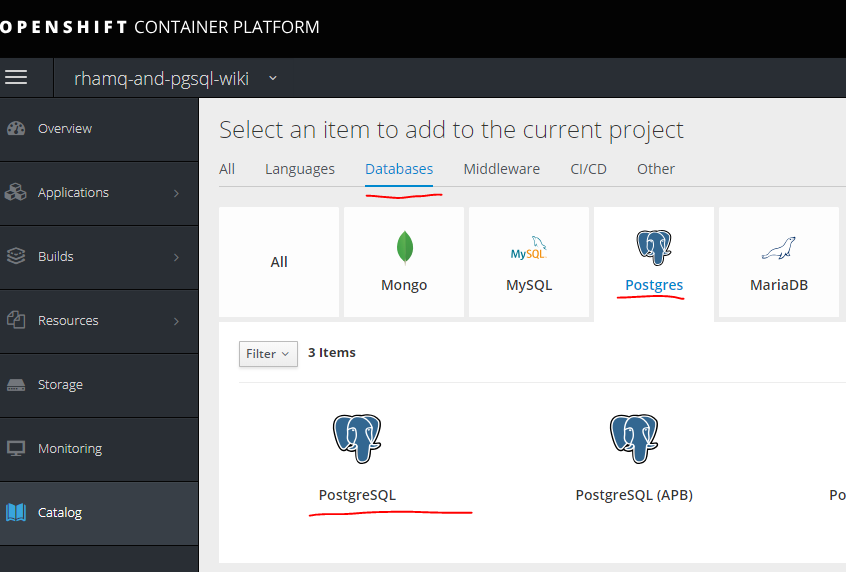

从模板创建

做完了

对于带有ssl群集等的其他选项。 您可以参考文档

access.redhat.com/documentation/en-us/red_hat_amq/7.2/html/deploying_amq_broker_on_openshift_container_platformPostgreSQL

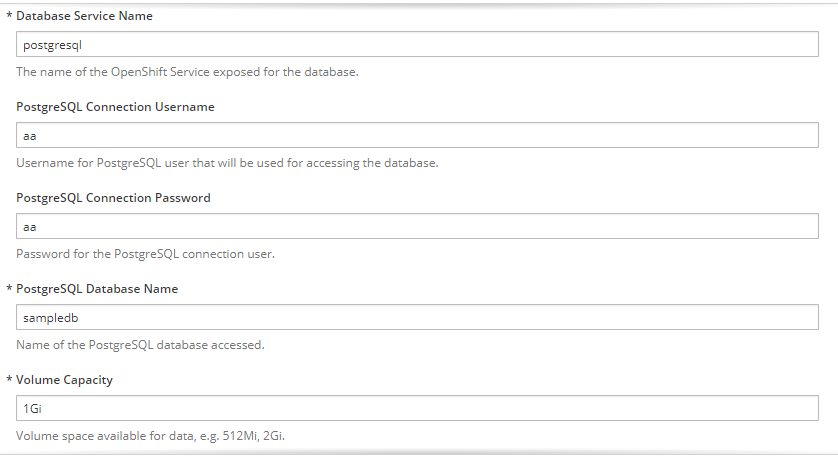

我们以与MQ相同的方式创建另一个PV。

mkdir -p /exports/pgmq2 chmod -R 777 /exports/pgmq2 chown nfsnobody:nfsnobody -R /exports/pgmq2 echo '"/exports/pgmq2" *(rw,root_squash)' >> /etc/exports.d/openshift-ansible.exports exportfs -ar restorecon -RvF

pgmq_storage.yaml

apiVersion: v1 kind: PersistentVolume metadata: name: pgmq-ts2 spec: capacity: storage: 20Gi accessModes: - ReadWriteOnce nfs: path: /exports/pgmq2 server: rnd-osh-shd-t01 persistentVolumeReclaimPolicy: Recycle

我们填写必要的参数

完成。

6.创建服务的单独项目,服务模板,管道,与gitlab集成,gitlab收录

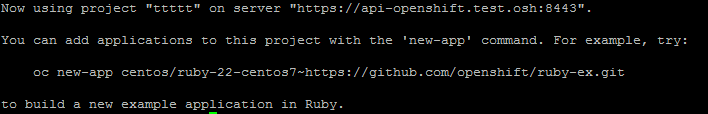

第一步是创建一个项目。 oc new-project ttttt

最初没有模板,您可以在手动应用程序中创建它。

有两种方法。首先是简单地使用现成的图像,但是图像的版本等将不可用,但在某些情况下将是相关的。

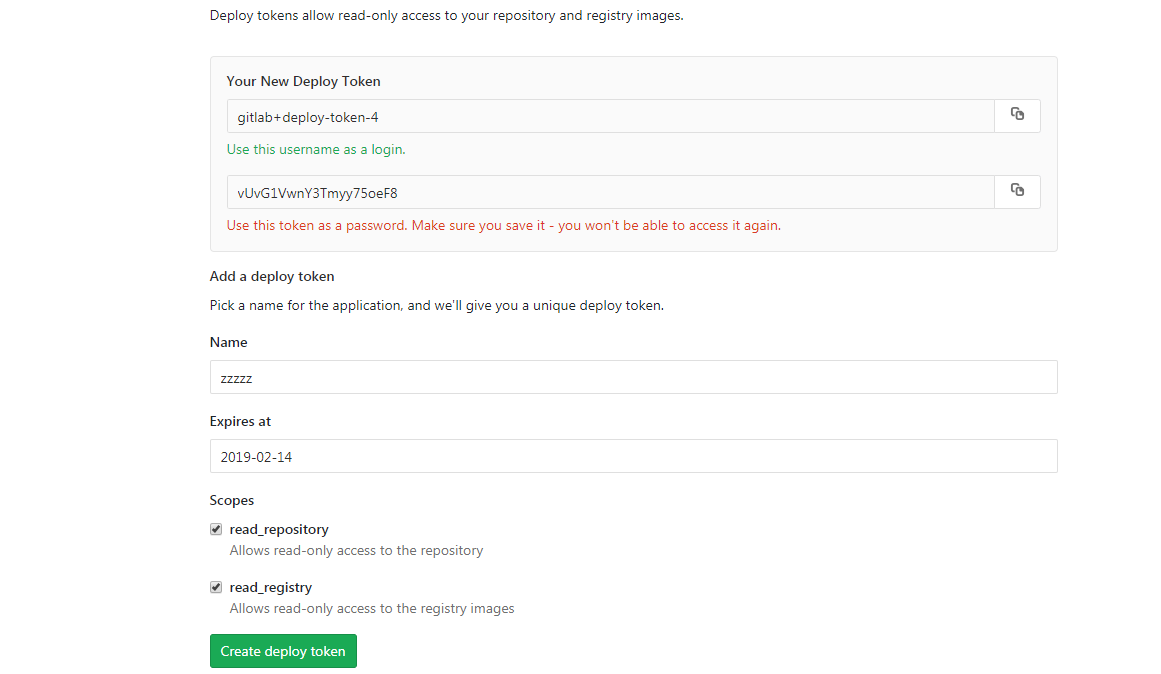

首先,您需要获取用于注册表验证的数据。 以Gitlab中的组装图像为例,这样做是这样的。

首先,您需要创建用于访问Docker注册表的机密-请参阅选项和语法。

oc create secret docker-registry

然后创建一个秘密

oc create secret docker-registry gitlabreg --docker-server='gitlab.xxx.com:4567' --docker-username='gitlab+deploy-token-1' --docker-password='syqTBSMjHtT_t-X5fiSY' --docker-email='email'

然后创建我们的应用程序。

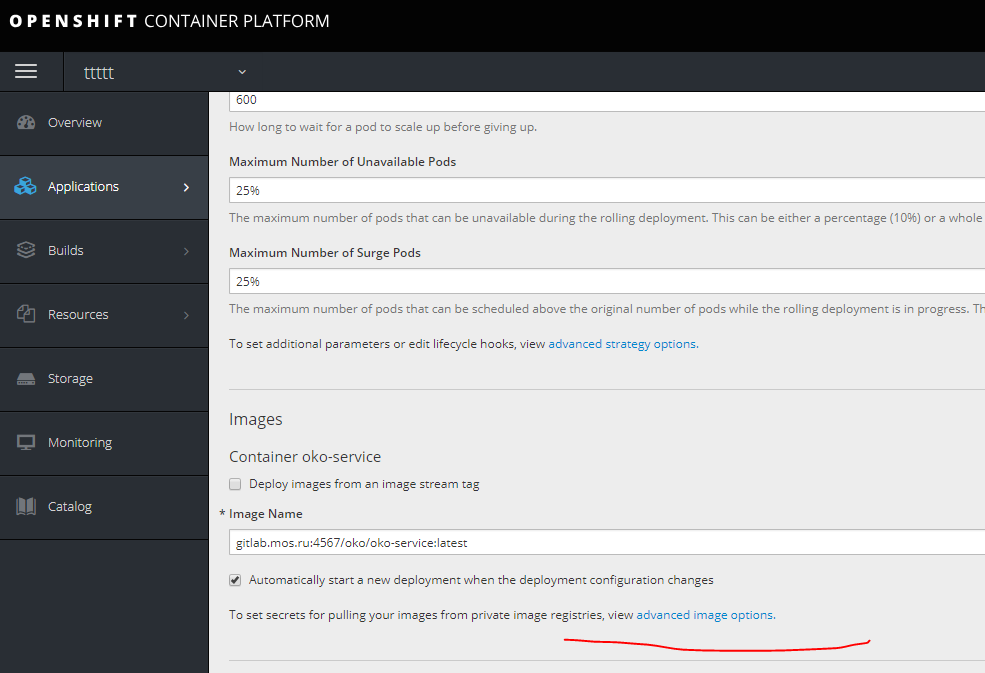

oc new-app --docker-image='gitlab.xxx.com:4567/oko/oko-service:latest'

如果出现问题,并且图像在应用程序设置中没有拉伸,请指定我们重试的秘诀。

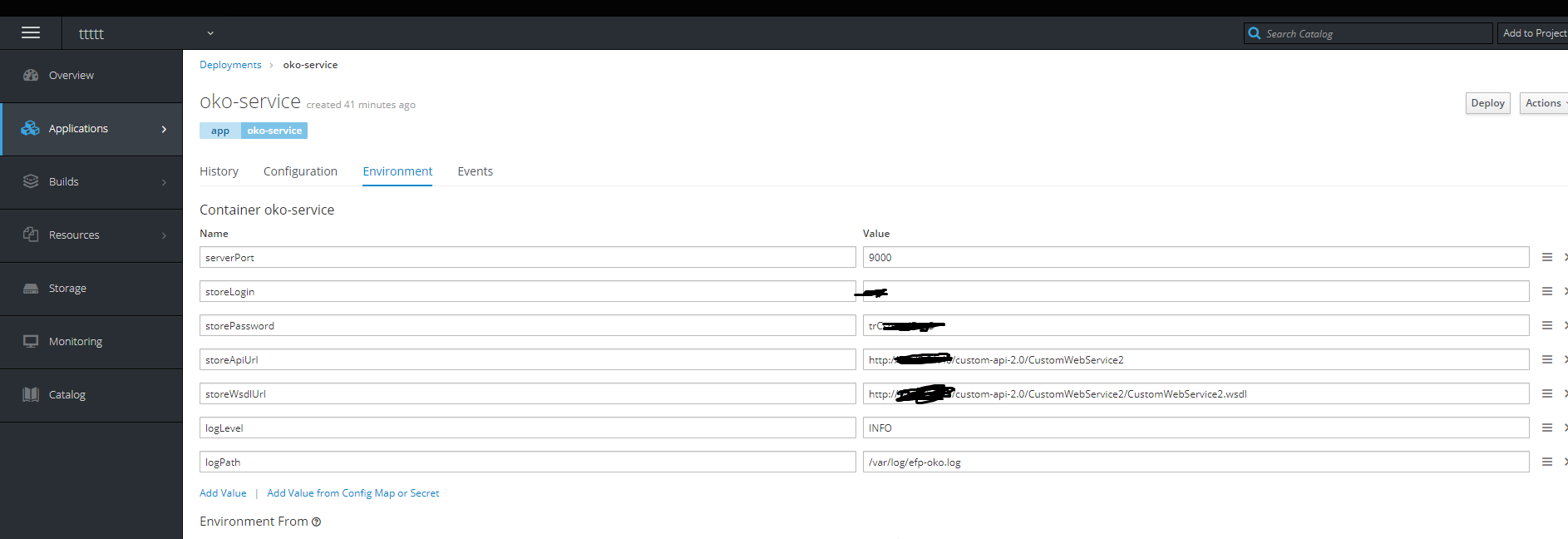

然后我们添加必要的环境变量。

完成-容器还活着。

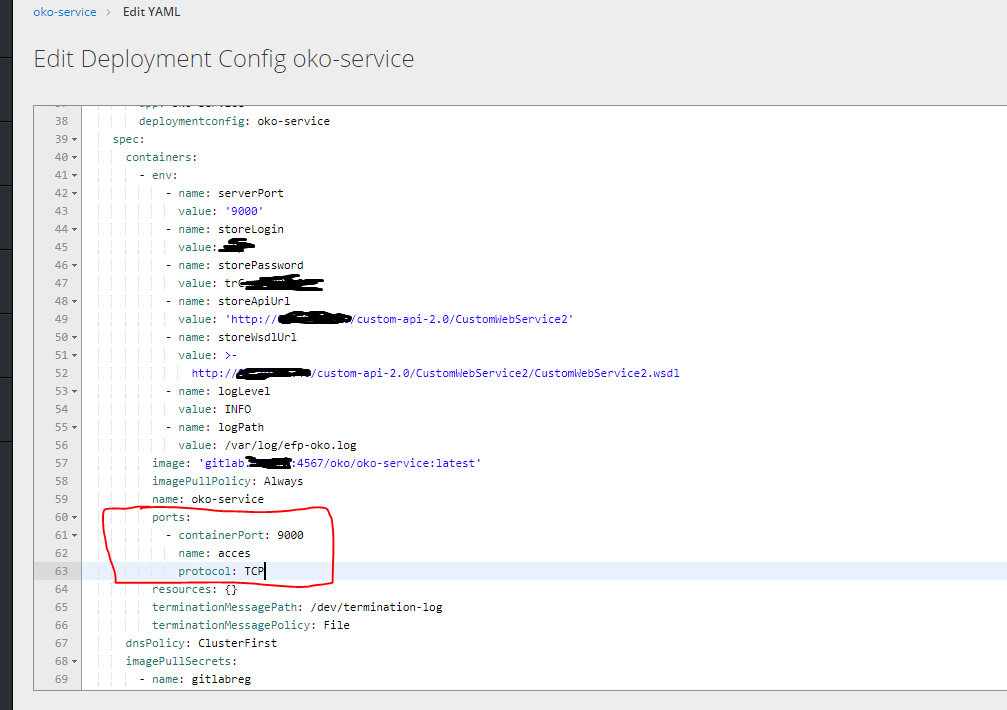

然后单击右侧的编辑yaml并指定端口。

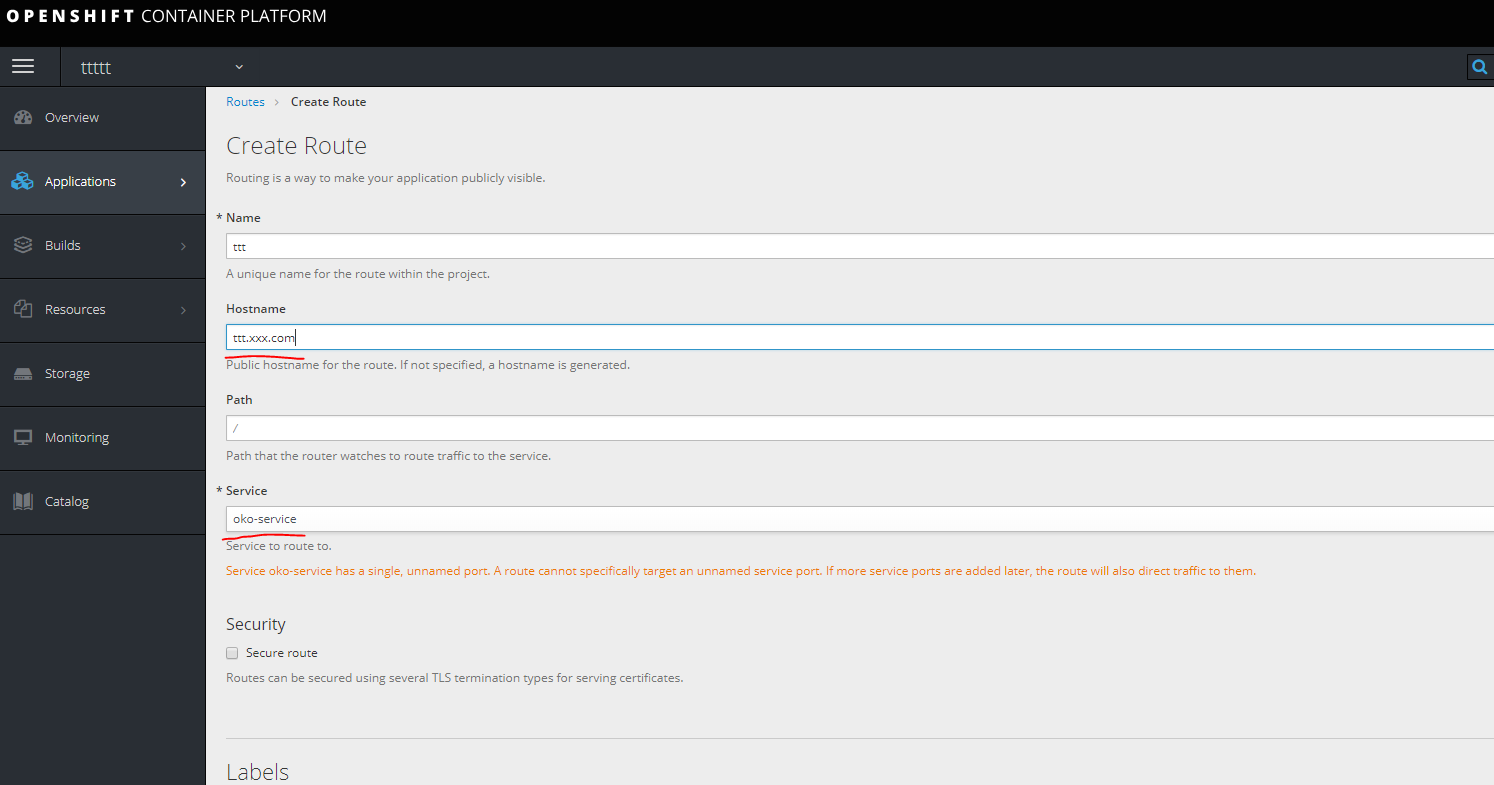

然后,要访问我们的容器,您需要创建一条路线,但是没有服务就无法创建它,因此首先需要创建服务。

service.yaml

kind: Service apiVersion: v1 metadata: name: oko-service spec: type: ClusterIP ports: - port: 9000 protocol: TCP targetPort: 9000 selector: app: oko-service sessionAffinity: None status: loadBalancer: {}

oc apply -f service.yaml

创建一条路线。

在查看以下节点之一的计算机上的主机中注册url。

在查看以下节点之一的计算机上的主机中注册url。

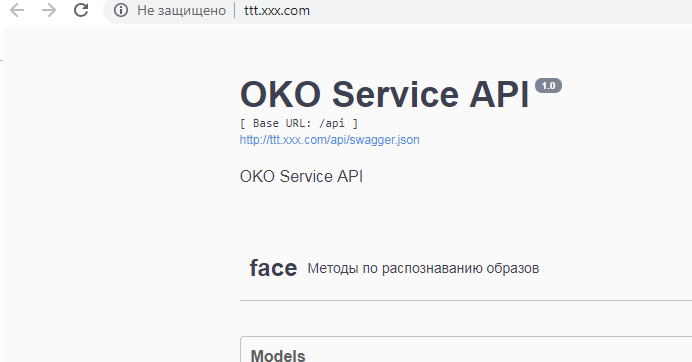

做完了

模板。通过分别在yaml中卸载与该服务相关的所有组件来创建模板。

即,在这种情况下,它是秘密dc服务路由。

您可以看到特定项目中已完成的所有操作

oc get all

卸载有趣

oc get deploymentconfig.apps.openshift.io oko-service -o yaml

或

oc get d oko-service -o yaml

然后,您可以将opensihft的任何模板作为基础,并整合收到的内容以获得模板。

在这种情况下,结果将如下所示:

template.yaml

kind: "Template" apiVersion: "v1" metadata: name: oko-service-template objects: - kind: DeploymentConfig apiVersion: v1 metadata: name: oko-service annotations: description: "ImageStream Defines how to build the application oko-service" labels: app: oko-service spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: app: oko-service deploymentconfig: oko-service template: metadata: labels: app: oko-service spec: selector: app: oko-service deploymentconfig: oko-service containers: - env: - name: serverPort value: "9000" - name: storeLogin value: "iii" - name: storePassword value: "trCsm5" - name: storeApiUrl value: "http://14.75.41.20/custom-api-2.0/CustomWebService2" - name: storeWsdlUrl value: "http://14.75.41.20/custom-api-2.0/CustomWebService2/CustomWebService2.wsdl" - name: logLevel value: "INFO" - name: logPath value: "/var/log/efp-oko.log" ports: - containerPort: 9000 name: acces protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: / port: 9000 scheme: HTTP initialDelaySeconds: 5 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 image: gitlab.xxx.com:4567/oko/oko-service imagePullPolicy: Always name: oko-service imagePullSecrets: - name: gitlab.xxx.com type: ImageChange strategy: activeDeadlineSeconds: 21600 resources: {} rollingParams: intervalSeconds: 1 maxSurge: 25% maxUnavailable: 25% timeoutSeconds: 600 updatePeriodSeconds: 5 type: Rolling triggers: - type: "ImageChange" imageChangeParams: automatic: true containerNames: - "oko-service" from: kind: ImageStream name: 'oko-service:latest' - kind: ImageStream apiVersion: v1 metadata: name: oko-service annotations: openshift.io/generated-by: OpenShiftNewApp labels: app: oko-service deploymentconfig: oko-service spec: dockerImageRepository: gitlab.xxx.com:4567/oko/oko-service tags: - annotations: openshift.io/imported-from: gitlab.xxx.com:4567/oko/oko-service from: kind: DockerImage name: gitlab.xxx.com:4567/oko/oko-service importPolicy: insecure: "true" name: latest referencePolicy: type: Source forcePull: true - kind: Service apiVersion: v1 metadata: name: oko-service spec: type: ClusterIP ports: - port: 9000 protocol: TCP targetPort: 9000 selector: app: oko-service sessionAffinity: None status: loadBalancer: {} - kind: Route apiVersion: route.openshift.io/v1 metadata: name: oko-service spec: host: oko-service.moxs.ru to: kind: Service name: oko-service weight: 100 wildcardPolicy: None status: ingress: - conditions: host: oko-service.xxx.com routerName: router wildcardPolicy: None

您可以在此处添加机密,在以下示例中,我们将研究在openshift端构建的服务选项,该机密将在模板中。

第二种方式通过Push创建一个具有图像组装的完整阶段,简单的管道和组装的项目。

首先,创建一个新项目。

首先,您需要从git创建一个Buildconfig(在这种情况下,项目具有三个docker文件,一个使用两个FROMs为上面的Docker版本1.17设计的常规docker文件,以及两个用于构建基础映像和目标dockerfile的单独的dockerfile。)

要访问git(如果它是私有的),您需要授权。 创建具有以下内容的机密。

oc create secret generic sinc-git --from-literal=username=gitsinc --from-literal=password=Paaasssword123

让我们为服务帐户构建者授予访问我们秘密的权限

oc secrets link builder sinc-git

将秘密绑定到网址git

oc annotate secret sinc-git 'build.openshift.io/source-secret-match-uri-1=https://gitlab.xxx.com/*'

最后,让我们尝试使用密钥--allow-missing-images从Gita创建一个应用程序,因为我们还没有组装基本的图像。

oc new-app

gitlab.xxx.com/OKO/oko-service.git --strategy = docker --allow-missing-images

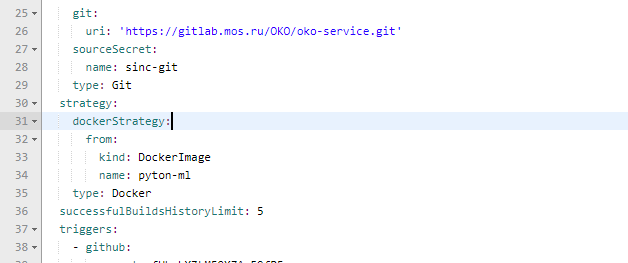

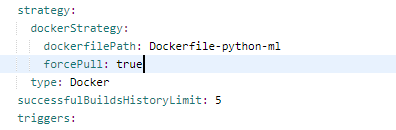

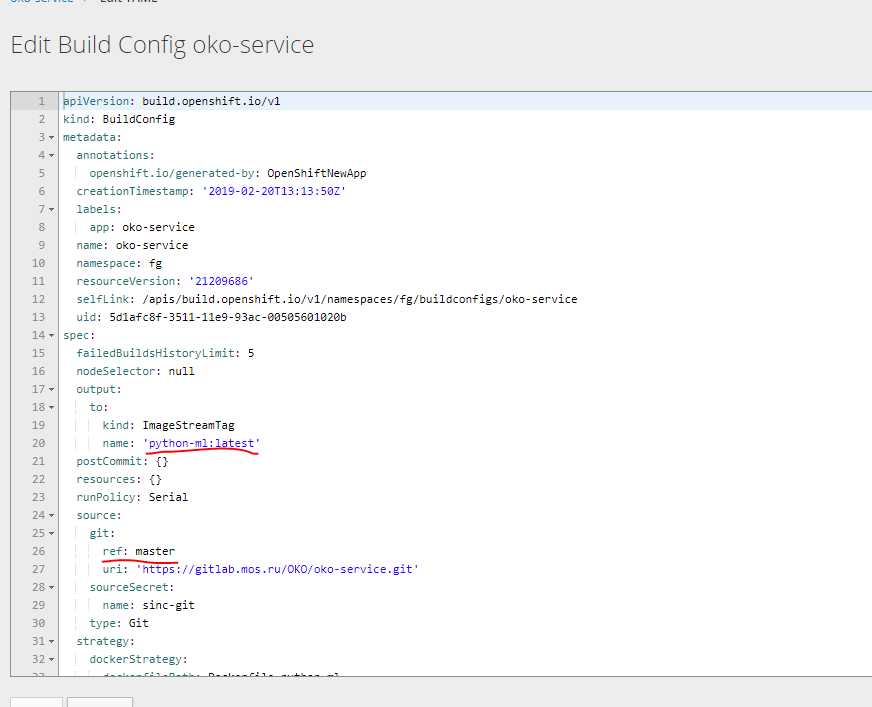

然后,在创建的buildconfig中,您需要为所需的dockerfile修复程序集。

正确的

我们还更改了参数以制作基本容器。

让我们尝试从该目录创建两个Buildcconfig,在基本映像下,卸载yaml并获取必要的。

您可以在输出中获得两个这样的模式。

公元前

kind: "BuildConfig" apiVersion: "v1" metadata: name: "oko-service-build-pyton-ml" labels: app: oko-service spec: completionDeadlineSeconds: 2400 triggers: - type: "ImageChange" source: type: git git: uri: "https://gitlab.xxx.com/OKO/oko-service.git" ref: "master" sourceSecret: name: git-oko strategy: type: Docker dockerStrategy: dockerfilePath: Dockerfile-python-ml forcePull: true output: to: kind: "ImageStreamTag" name: "python-ml:latest"

公元前

kind: "BuildConfig" apiVersion: "v1" metadata: name: "oko-service-build" labels: app: oko-service spec: completionDeadlineSeconds: 2400 triggers: - type: "ImageChange" source: type: git git: uri: "https://gitlab.xxx.xom/OKO/oko-service.git" ref: "master" sourceSecret: name: git-oko strategy: type: Docker dockerStrategy: dockerfilePath: Dockerfile-oko-service from: kind: ImageStreamTag name: "python-ml:latest" forcePull: true env: - name: serverPort value: "9000" - name: storeLogin value: "iii" - name: storePassword value: "trCsn5" - name: storeApiUrl value: "http://14.75.41.20/custom-api-2.0/CustomWebService2" - name: storeWsdlUrl value: "http://14.75.41.20/custom-api-2.0/CustomWebService2/CustomWebService2.wsdl" - name: logLevel value: "INFO" - name: logPath value: "/var/log/efp-oko.log" output: to: kind: "ImageStreamTag" name: "oko-service:latest"

我们还需要创建一个deployconfig两个映像流,并完成服务和路由的部署。

我不希望不单独产生所有配置,而是立即创建一个包含该服务所有组件的模板。 基于先前版本的模板,无需汇编。

template kind: "Template" apiVersion: "v1" metadata: name: oko-service-template objects: - kind: Secret apiVersion: v1 type: kubernetes.io/basic-auth metadata: name: git-oko annotations: build.openshift.io/source-secret-match-uri-1: https://gitlab.xxx.com/* data: password: R21ZFSw== username: Z2l0cec== - kind: "BuildConfig" apiVersion: "v1" metadata: name: "oko-service-build-pyton-ml" labels: app: oko-service spec: completionDeadlineSeconds: 2400 triggers: - type: "ImageChange" source: type: git git: uri: "https://gitlab.xxx.com/OKO/oko-service.git" ref: "master" sourceSecret: name: git-oko strategy: type: Docker dockerStrategy: dockerfilePath: Dockerfile-python-ml forcePull: true output: to: kind: "ImageStreamTag" name: "python-ml:latest" - kind: "BuildConfig" apiVersion: "v1" metadata: name: "oko-service-build" labels: app: oko-service spec: completionDeadlineSeconds: 2400 triggers: - type: "ImageChange" source: type: git git: uri: "https://gitlab.xxx.com/OKO/oko-service.git" ref: "master" sourceSecret: name: git-oko strategy: type: Docker dockerStrategy: dockerfilePath: Dockerfile-oko-service from: kind: ImageStreamTag name: "python-ml:latest" forcePull: true env: - name: serverPort value: "9000" - name: storeLogin value: "iii" - name: storePassword value: "trC" - name: storeApiUrl value: "http://14.75.41.20/custom-api-2.0/CustomWebService2" - name: storeWsdlUrl value: "http://14.75.41.20/custom-api-2.0/CustomWebService2/CustomWebService2.wsdl" - name: logLevel value: "INFO" - name: logPath value: "/var/log/efp-oko.log" output: to: kind: "ImageStreamTag" name: "oko-service:latest" - kind: DeploymentConfig apiVersion: v1 metadata: name: oko-service annotations: description: "ImageStream Defines how to build the application oko-service" labels: app: oko-service spec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: app: oko-service deploymentconfig: oko-service template: metadata: labels: app: oko-service spec: selector: app: oko-service deploymentconfig: oko-service containers: - env: - name: serverPort value: "9000" - name: storeLogin value: "iii" - name: storePassword value: "trCsn5" - name: storeApiUrl value: "http://14.75.41.20/custom-api-2.0/CustomWebService2" - name: storeWsdlUrl value: "http://14.75.41.20/custom-api-2.0/CustomWebService2/CustomWebService2.wsdl" - name: logLevel value: "INFO" - name: logPath value: "/var/log/efp-oko.log" ports: - containerPort: 9000 name: acces protocol: TCP readinessProbe: failureThreshold: 3 httpGet: path: / port: 9000 scheme: HTTP initialDelaySeconds: 5 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 image: docker-registry.default.svc:5000/oko-service-p/oko-service imagePullPolicy: Always name: oko-service type: ImageChange strategy: activeDeadlineSeconds: 21600 resources: {} rollingParams: intervalSeconds: 1 maxSurge: 25% maxUnavailable: 25% timeoutSeconds: 600 updatePeriodSeconds: 5 type: Rolling triggers: - type: "ImageChange" imageChangeParams: automatic: true containerNames: - "oko-service" from: kind: ImageStreamTag name: 'oko-service:latest' - kind: ImageStream apiVersion: v1 metadata: name: oko-service annotations: openshift.io/generated-by: OpenShiftNewApp labels: app: oko-service deploymentconfig: oko-service spec: dockerImageRepository: "" tags: - annotations: openshift.io/imported-from: oko-service from: kind: DockerImage name: oko-service importPolicy: insecure: "true" name: latest referencePolicy: type: Source - kind: ImageStream apiVersion: v1 metadata: name: python-ml spec: dockerImageRepository: "" tags: - annotations: openshift.io/imported-from: oko-service-build from: kind: DockerImage name: python-ml importPolicy: insecure: "true" name: latest referencePolicy: type: Source - kind: Service apiVersion: v1 metadata: name: oko-service spec: type: ClusterIP ports: - port: 9000 protocol: TCP targetPort: 9000 selector: app: oko-service sessionAffinity: None status: loadBalancer: {} - kind: Route apiVersion: route.openshift.io/v1 metadata: name: oko-service spec: host: oko-service.xxx.com to: kind: Service name: oko-service weight: 100 wildcardPolicy: None status: ingress: - conditions: host: oko-service.xxx.com routerName: router wildcardPolicy: None

该模板是为项目oko-service-p创建的,因此您需要考虑这一点。

您可以使用变量自动替换所需的值。

我再重复一次,可以使用oc get ... -o yaml上传数据来获取基本Yaml。

您可以按以下方式使用此模板进行扫描

oc process -f oko-service-templatebuild.yaml | oc create -f -

然后创建管道

oko-service-pipeline.yaml

kind: "BuildConfig" apiVersion: "v1" type: "GitLab" gitlab: secret: "secret101" metadata: name: "oko-service-sample-pipeline" spec: strategy: jenkinsPipelineStrategy: jenkinsfile: |- // path of the template to use // def templatePath = 'https://raw.githubusercontent.com/openshift/nodejs-ex/master/openshift/templates/nodejs-mongodb.json' // name of the template that will be created def templateName = 'oko-service-template' // NOTE, the "pipeline" directive/closure from the declarative pipeline syntax needs to include, or be nested outside, pipeline { agent any environment { DEV_PROJECT = "oko-service"; } stages { stage('deploy') { steps { script { openshift.withCluster() { openshift.withProject() { echo "Hello from project ${openshift.project()} in cluster ${openshift.cluster()}" def dc = openshift.selector('dc', "${DEV_PROJECT}") openshiftBuild(buildConfig: 'oko-service-build', waitTime: '3000000') openshiftDeploy(deploymentConfig: 'oko-service', waitTime: '3000000') } } } } } } // stages } // pipeline type: JenkinsPipeline triggers: - type: GitLab gitlab: secret: ffffffffk

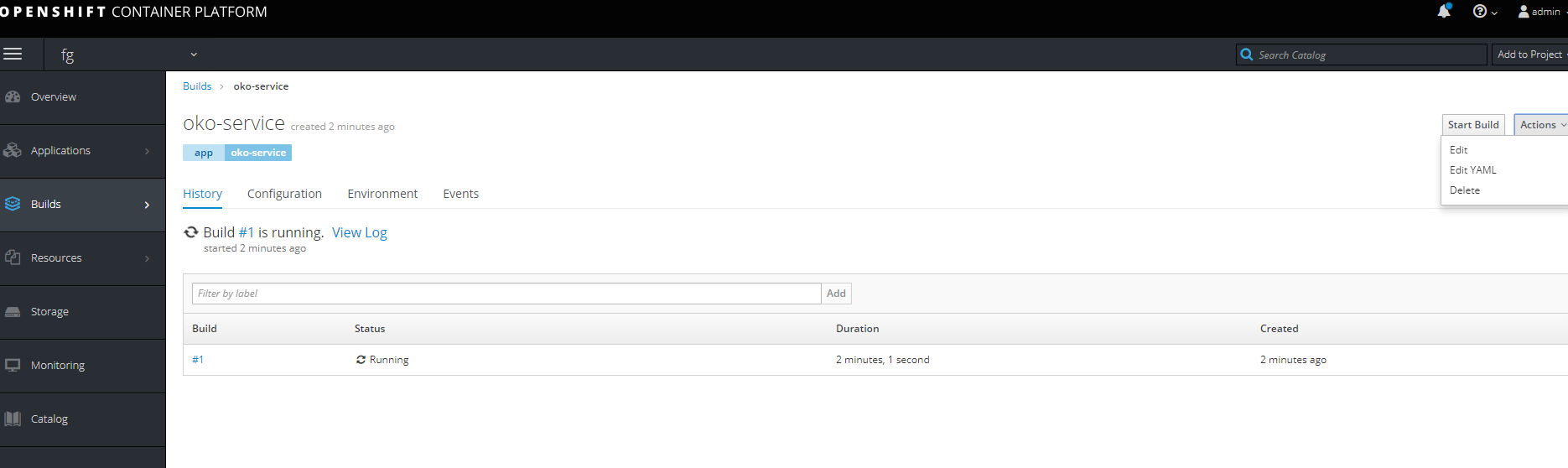

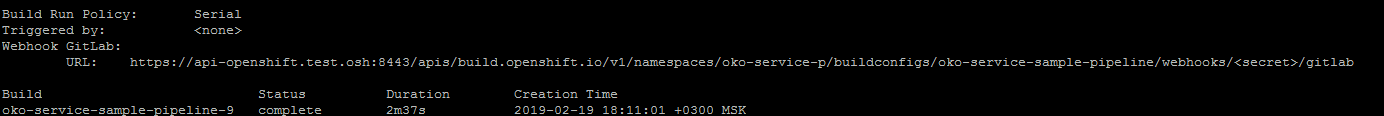

通过运行应用管道配置后

oc describe buildconfig oko-service-sample-pipeline

可以在gitlab中获取Webhook的网址。

用配置中指定的密码替换密码。

同样,在应用Pipeline之后,openshift本身将开始在项目中安装jenkins以启动Pipeline。 初始启动时间很长,因此您需要等待一段时间。

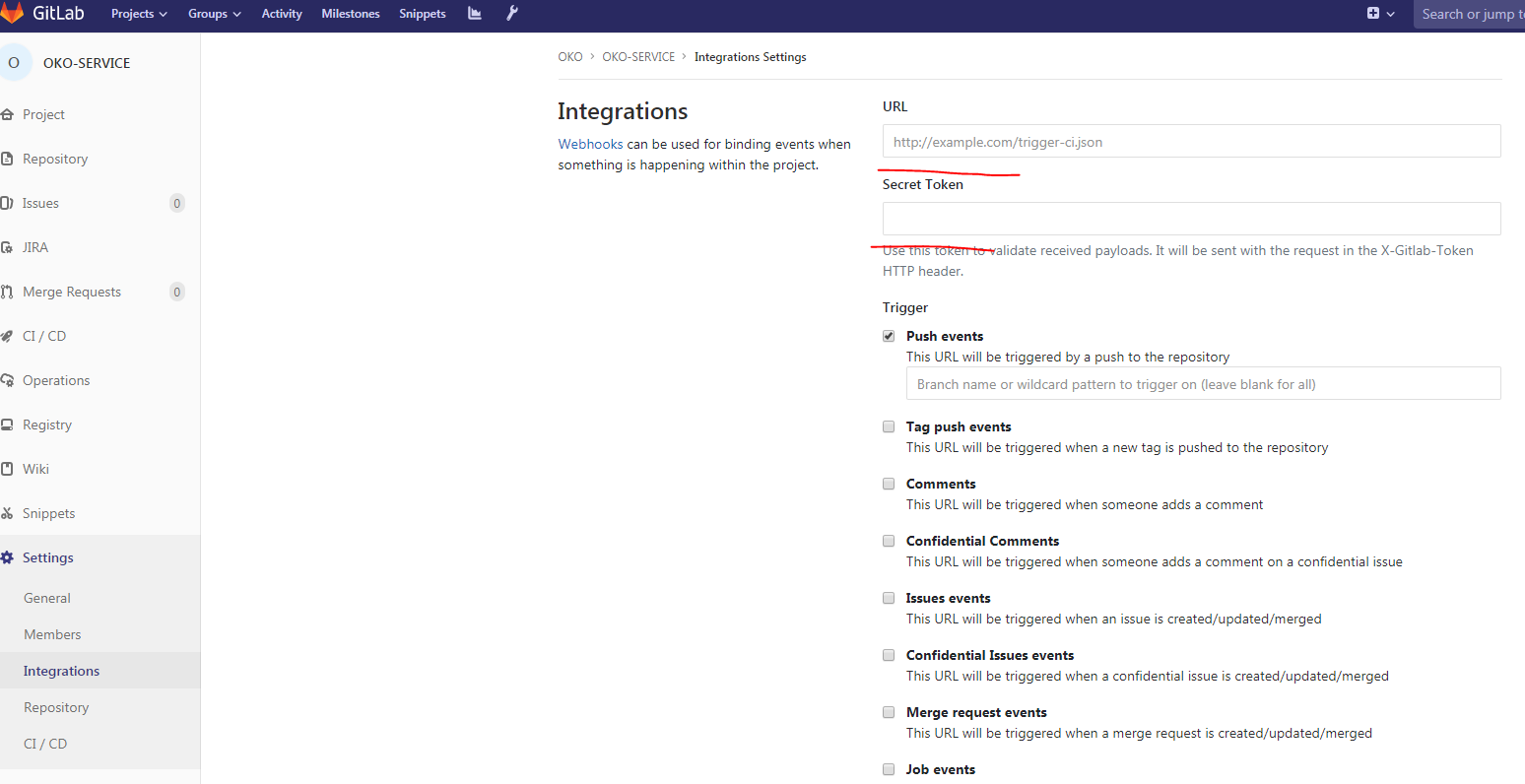

然后在我们的项目的Gitlab中:

填写网址,秘密,删除启用SSL验证,我们的网络挂钩已准备就绪。

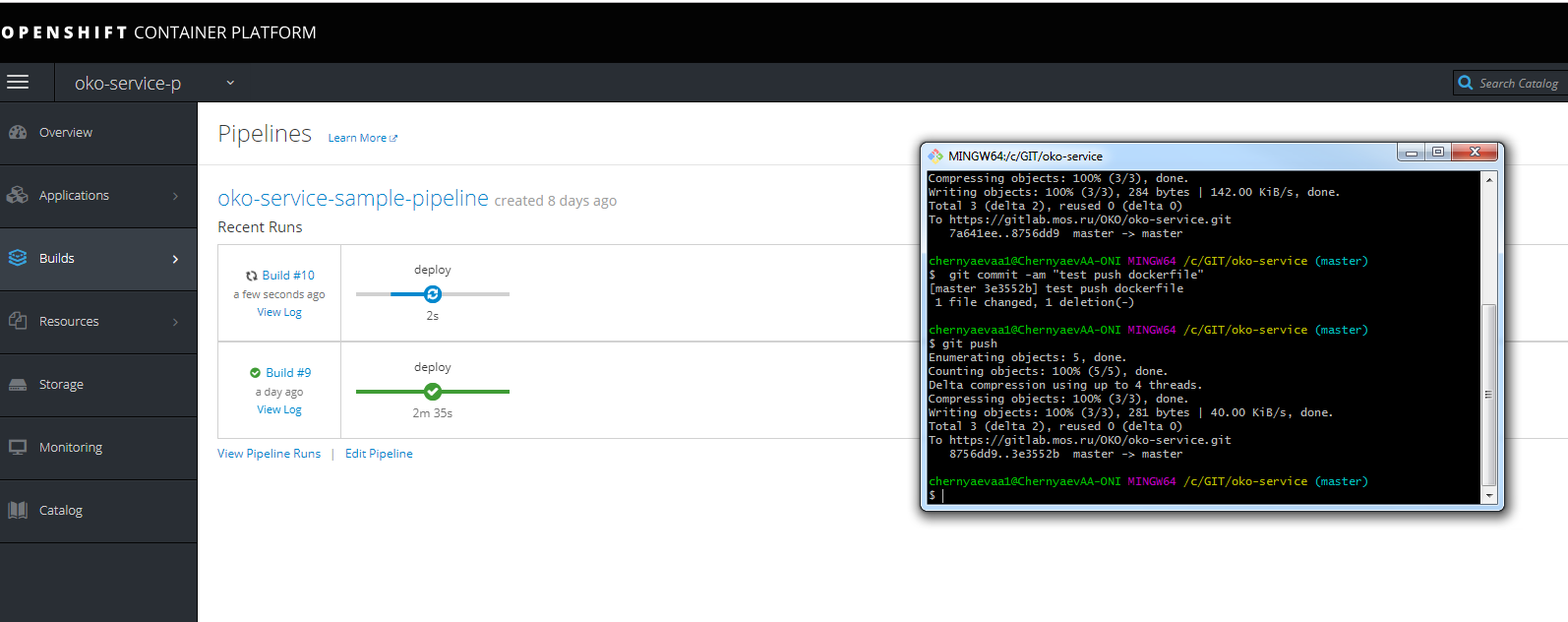

您可以进行测试推送并查看组装进度

不要忘记在主机URL中注册以获取基础节点上相同的詹金斯。

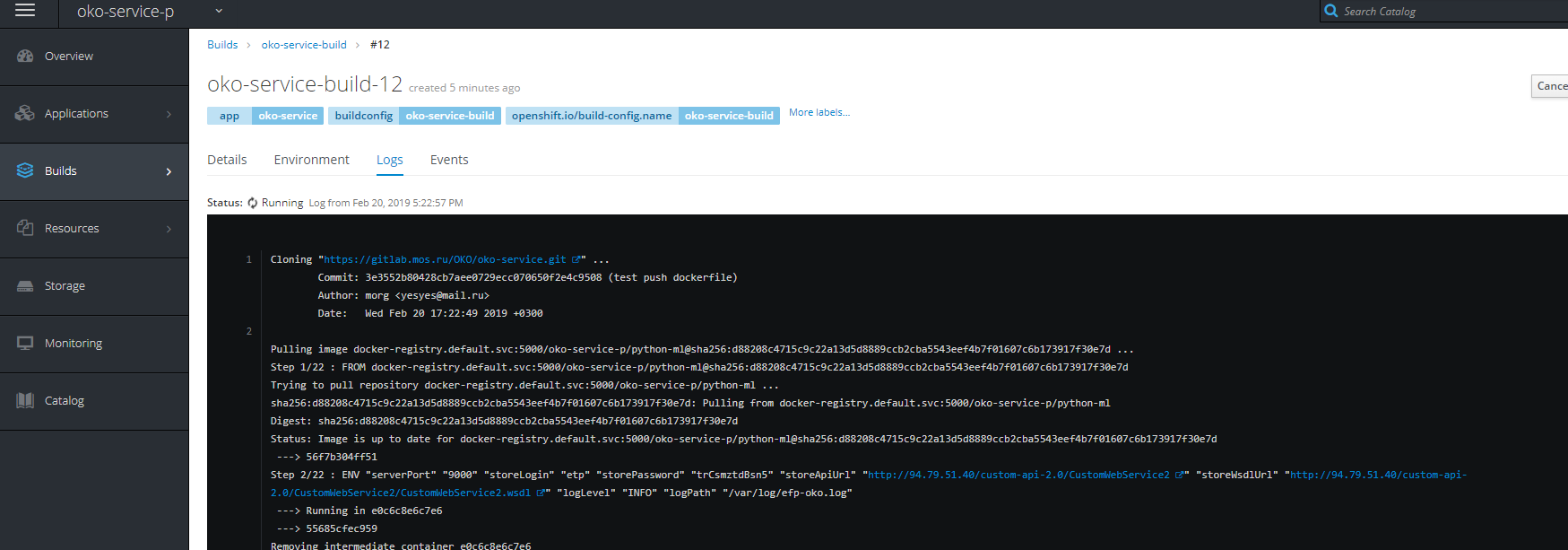

您还可以查看装配进度。

PS:我希望本文能帮助许多人了解openshift的吃法和方法,并阐明许多乍一看并不明显的观点。

PSS一些解决方案,解决一些问题

启动构建时出现问题,等等。

-为项目创建一个服务帐户

oc create serviceaccount oko-serviceaccount oc adm policy add-scc-to-user privileged system:serviceaccount:__:oko-serviceaccount oc adm policy add-scc-to-group anyuid system:authenticated oc adm policy add-scc-to-user anyuid system:serviceaccount:__:oko-serviceaccount

项目冻结而不是不删除的问题-强制完成脚本(先天性疾病) for i in $(oc get projects | grep Terminating| awk '{print $1}'); do echo $i; oc get serviceinstance -n $i -o yaml | sed "/kubernetes-incubator/d"| oc apply -f - ; done

下载图像时出现问题。 oc adm policy add-role-to-group system:image-puller system:serviceaccounts:__ oc adm policy add-role-to-user system:image-puller system:serviceaccount:__::default oc adm policy add-role-to-group system:image-puller system:serviceaccounts:__ oc policy add-role-to-user system:image-puller system:serviceaccount:__::default oc policy add-role-to-group system:image-puller system:serviceaccounts:__

还要覆盖nfs上注册表的文件夹权限。(注册表日志中存在写入错误,该构建挂起了推送)。

还要覆盖nfs上注册表的文件夹权限。(注册表日志中存在写入错误,该构建挂起了推送)。 chmod 777 -r /exports/registry/docker/registry/ chmod -R 777 /exports/registry/docker/registry/ chown nfsnobody:nfsnobody -R /exports/registry/ hown -R 1001 /exports/registry/ restorecon -RvF exportfs -ar