您是否想知道劳动力市场的情况,尤其是数据科学领域的情况?

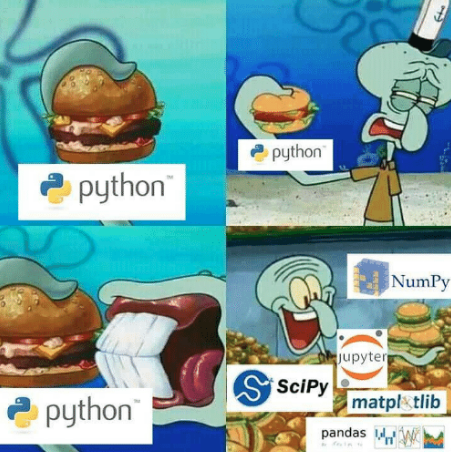

如果您了解Python和Pandas,猎头解析似乎是最可靠,最简单的方法之一。

代码适用于Python3.6和Pandas 0.24.2

Ipython可以在这里下载。

要检查Pandas(Linux / MacOS)控制台的版本,请执行以下操作:

ipython

然后在命令行上

#ipython import pandas as pd pd.__version__

# () pip install pandas==0.24.2

已经配置了所有内容? 走吧

Python中的Parsim

HH可让您在俄罗斯找工作。 该招聘资源具有最大的职位空缺数据库。 HH共享一个方便的api。

谷歌了一下,原来是写一个解析器。

# https://gist.github.com/fuwiak/9c695b51c33b2e052c5a721383705a9c # (BASH) python3 hh_parser.py import requests import pandas as pd number_of_pages = 100 #number_of_ads = number_of_pages * per_page job_title = ["'Data Analyst' and 'data scientist'"] for job in job_title: data=[] for i in range(number_of_pages): url = 'https://api.hh.ru/vacancies' par = {'text': job, 'area':'113','per_page':'10', 'page':i} r = requests.get(url, params=par) e=r.json() data.append(e) vacancy_details = data[0]['items'][0].keys() df = pd.DataFrame(columns= list(vacancy_details)) ind = 0 for i in range(len(data)): for j in range(len(data[i]['items'])): df.loc[ind] = data[i]['items'][j] ind+=1 csv_name = job+".csv" df.to_csv(csv_name)

结果,我们得到了一个在job_title中指定名称的csv文件。

在指定的一个带有空缺短语的文件中,将下载该文件

“数据分析师”和“数据科学家”。 如果要单独将行更改为

job_title=['Data Analyst', 'Data Scientist']

然后,您将获得2个具有这些名称的文件。

有趣的是,除“和”外还有其他运算符。 在他们的帮助下,您可以搜索完全匹配。 此处有更多详细信息。

https://hh.ru/article/309400

现在几点 熊猫时间!

以这种方式收集的广告将根据其中包含的信息或对元数据的描述而分为几组。 例如:城市; 位置 付费插头 工作类别。 在这种情况下,一个广告可能属于几个类别。

我将使用jupyter-notebook处理与“数据科学家”职位相关的数据。 https://jupyter.org/

如何更改“未命名”列的名称?

最重要的问题-ZP

import ast # run code from string for example ast.literal_eval("1+1") salaries = df.salary.dropna() # remove all NA's from dataframe currencies = [ast.literal_eval(salaries.iloc[i])['currency'] for i in range(len(salaries))] curr = set(currencies) #{'EUR', 'RUR', 'USD'} #divide dataframe salararies by currency rur = [ast.literal_eval(salaries.iloc[i]) for i in range(len(salaries)) if ast.literal_eval(salaries.iloc[i])['currency']=='RUR'] eur = [ast.literal_eval(salaries.iloc[i]) for i in range(len(salaries)) if ast.literal_eval(salaries.iloc[i])['currency']=='EUR'] usd = [ast.literal_eval(salaries.iloc[i]) for i in range(len(salaries)) if ast.literal_eval(salaries.iloc[i])['currency']=='USD']

原来是将薪金划分为货币,您可以尝试自己进行分析,例如仅针对欧元。 我现在只处理卢布的薪水

fr = [x['from'] for x in rur] # lower range of salary fr = list(filter(lambda x: x is not None, fr)) # remove NA's from lower range [0, 100, 200,...] to = [x['to'] for x in rur] #upper range of salary to = list(filter(lambda x: x is not None, to)) #remove NA's from upper range [100, 200, 300,...] import numpy as np salary_range = list(zip(fr, to)) # concatenate upper and lower range [(0,100), (100, 200), (200, 300)...] av = map(np.mean, salary_range) # convert [(0,100), (100, 200), (200, 300)...] to [50, 150, 250,...] av = round(np.mean(list(av)),1) # average value from [50, 150, 250,...] print("average salary as Data Scientist ", av, "rubles")

最终,我们如期获得了约15万卢布的收入。

对于平均薪水,我有以下条件:

- 没有考虑没有指定工资的职位空缺(df.salary.dropna)

- 只拿卢布的工资

- 如果有塞子,则取平均值(例如,塞子从10,000卢布到20,000卢布→15,000卢布)。

我会向巨魔,虚弱的人和业余爱好者说以下话,以寻求秘密含义:我不是hh.ru的雇员; 本文不是广告; 我没得到她一分钱。 祝大家好运。

红利

数据科学领域对6月的需求如何?

from collections import Counter vacancy_names = df.name # change here to change source of data/words etc cloud = Counter(vacancy_names) from wordcloud import WordCloud, STOPWORDS stopwords = set(STOPWORDS) cloud = '' for x in list(vacancy_names): cloud+=x+' ' wordcloud = WordCloud(width = 800, height = 800, stopwords = stopwords, min_font_size = 8,background_color='white' ).generate(cloud) import matplotlib.pylab as plt plt.figure(figsize = (16, 16)) plt.imshow(wordcloud) plt.savefig('vacancy_cloud.png')

[REPO]( https://github.com/fuwiak/HH )

编辑:

Zoldaten用户版本

解析器……

除某些拐杖外,该代码无版权。

# !/usr/bin/python3 # -*- coding: utf-8 -*- import sys import xlsxwriter # pip install XlsxWriter import requests # pip install requests from bs4 import BeautifulSoup as bs # pip install beautifulsoup4 headers = {'accept': '*/*', 'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36'} vacancy = input(' : ') base_url = f'https://hh.ru/search/vacancy?area=1&search_period=30&text={vacancy}&page=' # area=1 - , search_period=3 - 30 pages = int(input(' - : ')) #+ jobs =[] def hh_parse(base_url, headers): zero = 0 while pages > zero: zero = str(zero) session = requests.Session() request = session.get(base_url + zero, headers = headers) if request.status_code == 200: soup = bs(request.content, 'html.parser') divs = soup.find_all('div', attrs = {'data-qa': 'vacancy-serp__vacancy'}) for div in divs: title = div.find('a', attrs = {'data-qa': 'vacancy-serp__vacancy-title'}).text compensation = div.find('div', attrs={'data-qa': 'vacancy-serp__vacancy-compensation'}) if compensation == None: # compensation = 'None' else: compensation = div.find('div', attrs={'data-qa': 'vacancy-serp__vacancy-compensation'}).text href = div.find('a', attrs = {'data-qa': 'vacancy-serp__vacancy-title'})['href'] try: company = div.find('a', attrs = {'data-qa': 'vacancy-serp__vacancy-employer'}).text except: company = 'None' text1 = div.find('div', attrs = {'data-qa': 'vacancy-serp__vacancy_snippet_responsibility'}).text text2 = div.find('div', attrs = {'data-qa': 'vacancy-serp__vacancy_snippet_requirement'}).text content = text1 + ' ' + text2 all_txt = [title, compensation, company, content, href] jobs.append(all_txt) zero = int(zero) zero += 1 else: print('error') # Excel workbook = xlsxwriter.Workbook('Vacancy.xlsx') worksheet = workbook.add_worksheet() # bold = workbook.add_format({'bold': 1}) bold.set_align('center') center_H_V = workbook.add_format() center_H_V.set_align('center') center_H_V.set_align('vcenter') center_V = workbook.add_format() center_V.set_align('vcenter') cell_wrap = workbook.add_format() cell_wrap.set_text_wrap() # worksheet.set_column(0, 0, 35) # A https://xlsxwriter.readthedocs.io/worksheet.html#set_column worksheet.set_column(1, 1, 20) # B worksheet.set_column(2, 2, 40) # C worksheet.set_column(3, 3, 135) # D worksheet.set_column(4, 4, 45) # E worksheet.write('A1', '', bold) worksheet.write('B1', '', bold) worksheet.write('C1', '', bold) worksheet.write('D1', '', bold) worksheet.write('E1', '', bold) row = 1 col = 0 for i in jobs: worksheet.write_string (row, col, i[0], center_V) worksheet.write_string (row, col + 1, i[1], center_H_V) worksheet.write_string (row, col + 2, i[2], center_H_V) worksheet.write_string (row, col + 3, i[3], cell_wrap) # worksheet.write_url (row, col + 4, i[4], center_H_V) worksheet.write_url (row, col + 4, i[4]) row += 1 print('OK') workbook.close() hh_parse(base_url, headers)