注意事项 佩雷夫 :本文的作者,SAP的工程师Erkan Erol分享了他对kubectl exec命令的功能机制的研究,这对于使用Kubernetes的每个人都非常熟悉。 他将整个算法与Kubernetes源代码清单(以及相关项目)一起提供,使您能够按需深入理解该主题。

一个星期五,一位同事走到我面前,询问如何使用

client-go在pod中执行命令。 我无法回答他,突然意识到我对

kubectl exec的工作机制

kubectl exec 。 是的,我对它的设备有一定的想法,但是我不能百分百确定它们的正确性,因此决定解决此问题。 在学习了博客,文档和源代码之后,我学到了很多新东西,在本文中,我想分享我的发现和理解。 如果有问题,请通过

Twitter与我联系。

准备工作

为了在MacBook上创建集群,我

克隆了ecomm-integration-ballerina / kubernetes-cluster 。 然后,他纠正了kubelet配置中节点的IP地址,因为默认设置不允许

kubectl exec 。 您可以

在此处了解有关此原因的更多信息。

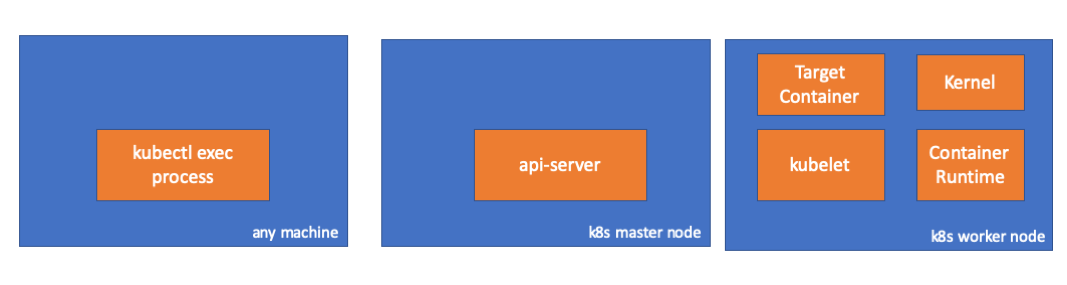

- 任何汽车=我的MacBook

- 主IP = 192.168.205.10

- 工作主机IP = 192.168.205.11

- API服务器端口= 6443

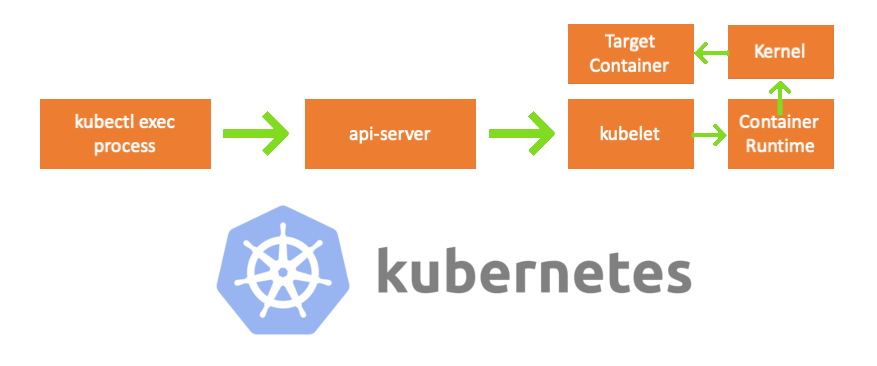

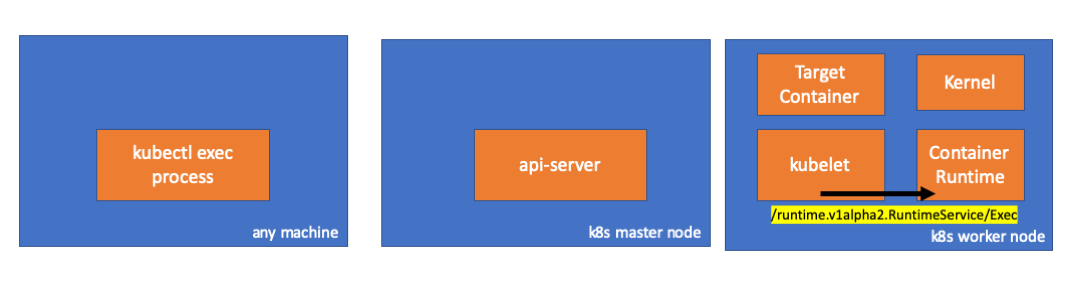

组成部分

- kubectl exec进程 :当我们执行“ kubectl exec ...”时,该进程开始。 您可以在有权访问K8s API服务器的任何计算机上执行此操作。 注意事项 trans。:在控制台列表中,作者进一步使用了注释“任何机器”,这意味着后续命令可以在任何具有Kubernetes访问权限的机器上执行。

- api服务器 :主服务器上的组件,提供对Kubernetes API的访问。 这是Kubernetes中控制平面的前端。

- kubelet :在集群中每个节点上运行的代理。 它在pod'e中提供容器。

- 容器运行时 ( container runtime ):负责容器操作的软件。 示例:Docker,CRI-O,容器化...

- kernel :工作节点上的OS内核; 负责流程管理。

- 目标 容器 :作为容器一部分并在其中一个工作节点上运行的容器。

我发现了什么

1.客户端活动

在

default名称空间中创建一个pod:

// any machine $ kubectl run exec-test-nginx --image=nginx

然后,我们执行exec命令并等待5000秒以进行进一步观察:

// any machine $ kubectl exec -it exec-test-nginx-6558988d5-fgxgg -- sh

出现kubectl进程(在本例中为pid = 8507):

// any machine $ ps -ef |grep kubectl 501 8507 8409 0 7:19PM ttys000 0:00.13 kubectl exec -it exec-test-nginx-6558988d5-fgxgg -- sh

如果我们检查该进程的网络活动,则会发现它与api服务器(192.168.205.10.6443)有连接:

// any machine $ netstat -atnv |grep 8507 tcp4 0 0 192.168.205.1.51673 192.168.205.10.6443 ESTABLISHED 131072 131768 8507 0 0x0102 0x00000020 tcp4 0 0 192.168.205.1.51672 192.168.205.10.6443 ESTABLISHED 131072 131768 8507 0 0x0102 0x00000028

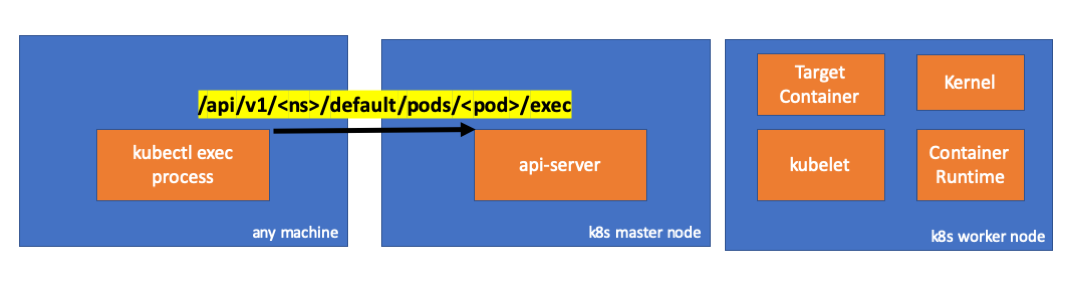

让我们看一下代码。 Kubectl使用exec子资源创建POST请求并发送REST请求:

req := restClient.Post(). Resource("pods"). Name(pod.Name). Namespace(pod.Namespace). SubResource("exec") req.VersionedParams(&corev1.PodExecOptions{ Container: containerName, Command: p.Command, Stdin: p.Stdin, Stdout: p.Out != nil, Stderr: p.ErrOut != nil, TTY: t.Raw, }, scheme.ParameterCodec) return p.Executor.Execute("POST", req.URL(), p.Config, p.In, p.Out, p.ErrOut, t.Raw, sizeQueue)

( kubectl / pkg / cmd / exec / exec.go )

2.主节点侧的活动

我们还可以在api服务器端观察请求:

handler.go:143] kube-apiserver: POST "/api/v1/namespaces/default/pods/exec-test-nginx-6558988d5-fgxgg/exec" satisfied by gorestful with webservice /api/v1 upgradeaware.go:261] Connecting to backend proxy (intercepting redirects) https://192.168.205.11:10250/exec/default/exec-test-nginx-6558988d5-fgxgg/exec-test-nginx?command=sh&input=1&output=1&tty=1 Headers: map[Connection:[Upgrade] Content-Length:[0] Upgrade:[SPDY/3.1] User-Agent:[kubectl/v1.12.10 (darwin/amd64) kubernetes/e3c1340] X-Forwarded-For:[192.168.205.1] X-Stream-Protocol-Version:[v4.channel.k8s.io v3.channel.k8s.io v2.channel.k8s.io channel.k8s.io]]

注意,HTTP请求包括协议更改请求。 SPDY允许您通过单个TCP连接多路复用各个stdin / stdout / stderr / spdy-error流。API服务器接收请求并将其转换为

PodExecOptions :

( pkg / apis / core / types.go )要执行所需的操作,api-server必须知道需要联系哪个pod:

( pkg /注册表/核心/ pod / strategy.go )当然,端点数据来自主机信息:

nodeName := types.NodeName(pod.Spec.NodeName) if len(nodeName) == 0 {

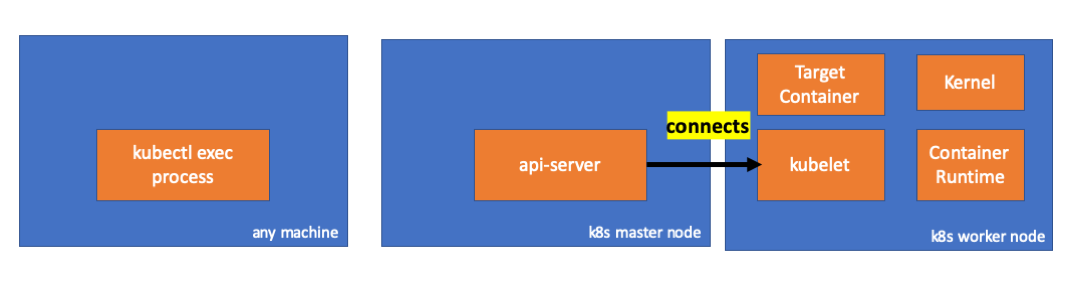

( pkg /注册表/核心/ pod / strategy.go )万岁! Kubelet现在具有一个端口(

node.Status.DaemonEndpoints.KubeletEndpoint.Port ),API服务器可以连接到该端口:

( pkg / kubelet /客户/ kubelet_client.go )从主节点通信>主节点到群集> apiserver到kubelet的文档 :

这些连接在kubelet的HTTPS端点上关闭。 默认情况下,apiserver不会验证kubelet的证书,这会使连接容易受到“中间攻击”(MITM)的影响,并且对于在不受信任和/或公共网络上的工作而言是不安全的。

现在,API服务器知道端点并建立连接:

( pkg /注册表/核心/ pod / rest / subresources.go )让我们看看在主节点上发生了什么。

首先,我们找到工作节点的IP。 在我们的情况下,这是192.168.205.11:

// any machine $ kubectl get nodes k8s-node-1 -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME k8s-node-1 Ready <none> 9h v1.15.3 192.168.205.11 <none> Ubuntu 16.04.6 LTS 4.4.0-159-generic docker://17.3.3

然后安装kubelet端口(本例中为10250):

// any machine $ kubectl get nodes k8s-node-1 -o jsonpath='{.status.daemonEndpoints.kubeletEndpoint}' map[Port:10250]

现在该检查网络了。 是否存在到工作节点(192.168.205.11)的连接? 在那里! 如果您杀死了

exec进程,它将消失,因此我知道该连接是由api服务器通过执行exec命令建立的。

// master node $ netstat -atn |grep 192.168.205.11 tcp 0 0 192.168.205.10:37870 192.168.205.11:10250 ESTABLISHED …

kubectl和api服务器之间的连接仍然打开。 此外,还有另一个连接api-server和kubelet的连接。

3.工作节点上的活动

现在,我们连接到工作程序节点,看看它发生了什么。

首先,我们看到与它的连接也已建立(第二行); 192.168.205.10是主节点的IP:

// worker node $ netstat -atn |grep 10250 tcp6 0 0 :::10250 :::* LISTEN tcp6 0 0 192.168.205.11:10250 192.168.205.10:37870 ESTABLISHED

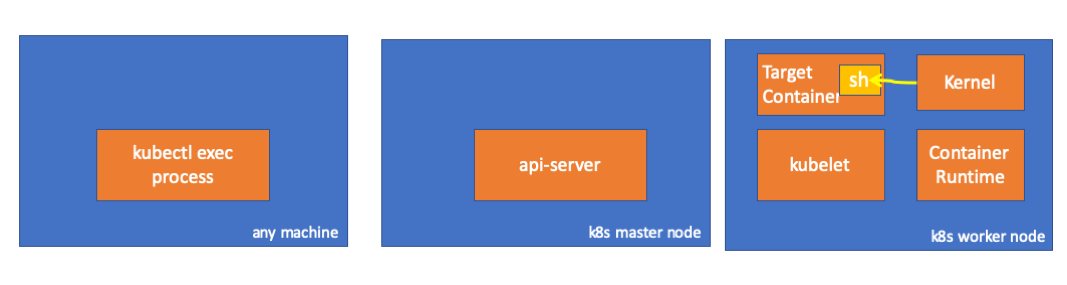

那我们的

sleep团队呢? 哇,她也在场!

// worker node $ ps -afx ... 31463 ? Sl 0:00 \_ docker-containerd-shim 7d974065bbb3107074ce31c51f5ef40aea8dcd535ae11a7b8f2dd180b8ed583a /var/run/docker/libcontainerd/7d974065bbb3107074ce31c51 31478 pts/0 Ss 0:00 \_ sh 31485 pts/0 S+ 0:00 \_ sleep 5000 …

但是,等等:kubelet是如何启动的? kubelet中有一个守护程序,该守护程序允许通过端口访问api服务器请求的API:

( pkg / kubelet /服务器/流/ server.go )Kubelet计算执行请求的响应端点:

func (s *server) GetExec(req *runtimeapi.ExecRequest) (*runtimeapi.ExecResponse, error) { if err := validateExecRequest(req); err != nil { return nil, err } token, err := s.cache.Insert(req) if err != nil { return nil, err } return &runtimeapi.ExecResponse{ Url: s.buildURL("exec", token), }, nil }

( pkg / kubelet /服务器/流/ server.go )不要混淆。 它不返回命令的结果,而是用于通信的端点:

type ExecResponse struct {

( cri-api / pkg / apis / runtime / v1alpha2 / api.pb.go )Kubelet实现了

RuntimeServiceClient接口,该接口是Container Runtime接口的一部分

(我们在此进行了更多介绍,例如, 在这里 -大约翻译) :

从cri-api到kubernetes / kubernetes的长列表 它只是使用gRPC通过容器运行时接口调用方法:

type runtimeServiceClient struct { cc *grpc.ClientConn }

( cri-api / pkg / apis / runtime / v1alpha2 / api.pb.go ) func (c *runtimeServiceClient) Exec(ctx context.Context, in *ExecRequest, opts ...grpc.CallOption) (*ExecResponse, error) { out := new(ExecResponse) err := c.cc.Invoke(ctx, "/runtime.v1alpha2.RuntimeService/Exec", in, out, opts...) if err != nil { return nil, err } return out, nil }

( cri-api / pkg / apis / runtime / v1alpha2 / api.pb.go )容器运行时负责实现

RuntimeServiceServer :

从cri-api到kubernetes / kubernetes的长列表

如果是这样,我们应该看到kubelet和容器运行时之间的连接,对吗? 让我们来看看。

在exec命令之前和之后运行此命令,并查看差异。 就我而言,区别是:

// worker node $ ss -a -p |grep kubelet ... u_str ESTAB 0 0 * 157937 * 157387 users:(("kubelet",pid=5714,fd=33)) ...

嗯……通过kubelet(pid = 5714)与未知对象之间的UNIX套接字的新连接。 可能是什么? 没错,这就是Docker(pid = 1186)!

// worker node $ ss -a -p |grep 157387 ... u_str ESTAB 0 0 * 157937 * 157387 users:(("kubelet",pid=5714,fd=33)) u_str ESTAB 0 0 /var/run/docker.sock 157387 * 157937 users:(("dockerd",pid=1186,fd=14)) ...

您还记得吗,这是一个执行我们命令的docker守护进程(pid = 1186):

// worker node $ ps -afx ... 1186 ? Ssl 0:55 /usr/bin/dockerd -H fd:// 17784 ? Sl 0:00 \_ docker-containerd-shim 53a0a08547b2f95986402d7f3b3e78702516244df049ba6c5aa012e81264aa3c /var/run/docker/libcontainerd/53a0a08547b2f95986402d7f3 17801 pts/2 Ss 0:00 \_ sh 17827 pts/2 S+ 0:00 \_ sleep 5000 ...

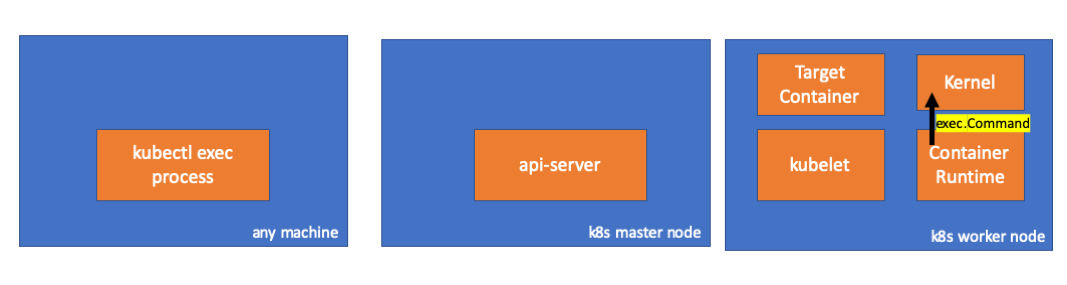

4.容器运行时中的活动

让我们检查CRI-O的源代码以了解正在发生的事情。 在Docker中,逻辑相似。

有一个服务器负责实现

RuntimeServiceServer :

( cri-o / server / server.go )

( cri-o / erver / container_exec.go )在链的末尾,容器运行时在工作节点上执行命令:

( cri-o / internal / oci / runtime_oci.go )

最后,内核执行以下命令:

提醒事项

- API Server也可以启动与kubelet的连接。

- 保持以下连接,直到交互式exec会话结束:

- 在kubectl和api-server之间;

- 在api服务器和kubectl之间;

- 在kubelet和容器运行时之间。

- Kubectl或api服务器无法在生产节点上运行任何东西。 Kubelet可以启动,但是对于这些操作,它还与容器运行时交互。

资源资源

译者的PS

另请参阅我们的博客: