在本文中,我们将收集用于SEO流量分析的仪表板。 我们将通过python脚本和.csv文件卸载数据。

我们将卸载什么?

要分析搜索短语位置的动态,您需要从

Yandex.Webmaster和

Google Search Console中卸载。 为了评估泵送搜索词组位置的“有用性”,频率数据将非常有用。 可以从

Yandex.Direct和

Google Ads获得 。 好吧,为了分析网站技术方面的行为,我们将使用

Page Speed Insider 。

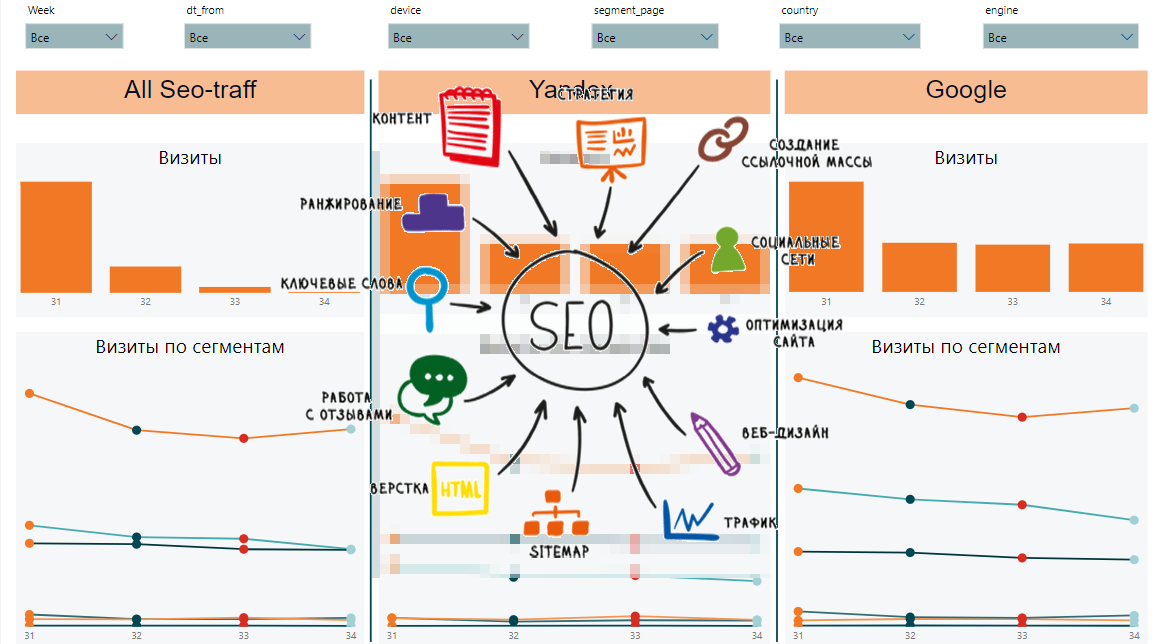

SEO流量动态

SEO流量动态Google搜索控制台

为了与API进行交互,我们将使用

searchconsole库。 github详细描述了如何获取登录所需的令牌。 上载数据并将其加载到MS SQL数据库的过程如下:

def google_reports():

Yandex网站管理员

不幸的是,网站管理员只能上传500个搜索词组。 根据国家/地区,设备类型等上传剪辑 他也不能。 由于这些限制,除了要从网站站长上载500个单词的位置之外,我们还将数据从Yandex.Metrica上载到目标网页。 对于那些搜索短语不多的人,500个单词就足够了。 如果根据Yandex的语义核心足够广泛,则您将不得不从其他来源卸载职位或编写职位解析器。

def yandex_reports(): token = "..."

Page Speed Insider

使您可以评估网站内容的下载速度。 如果网站开始加载的速度较慢,则这可能会大大降低网站在搜索结果中的位置。

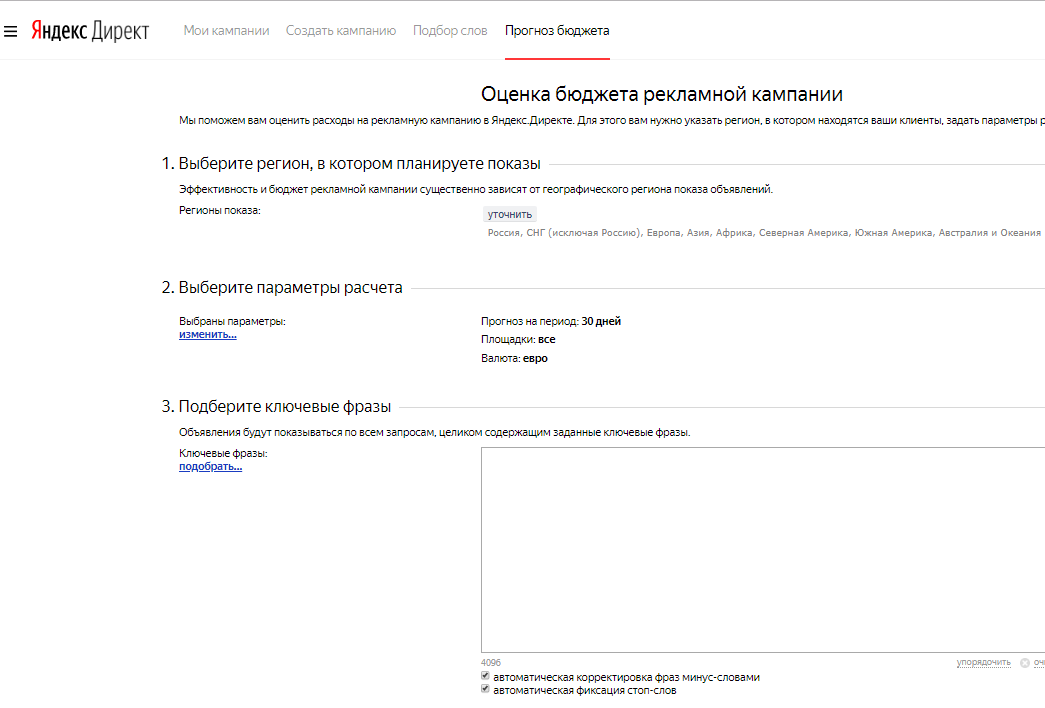

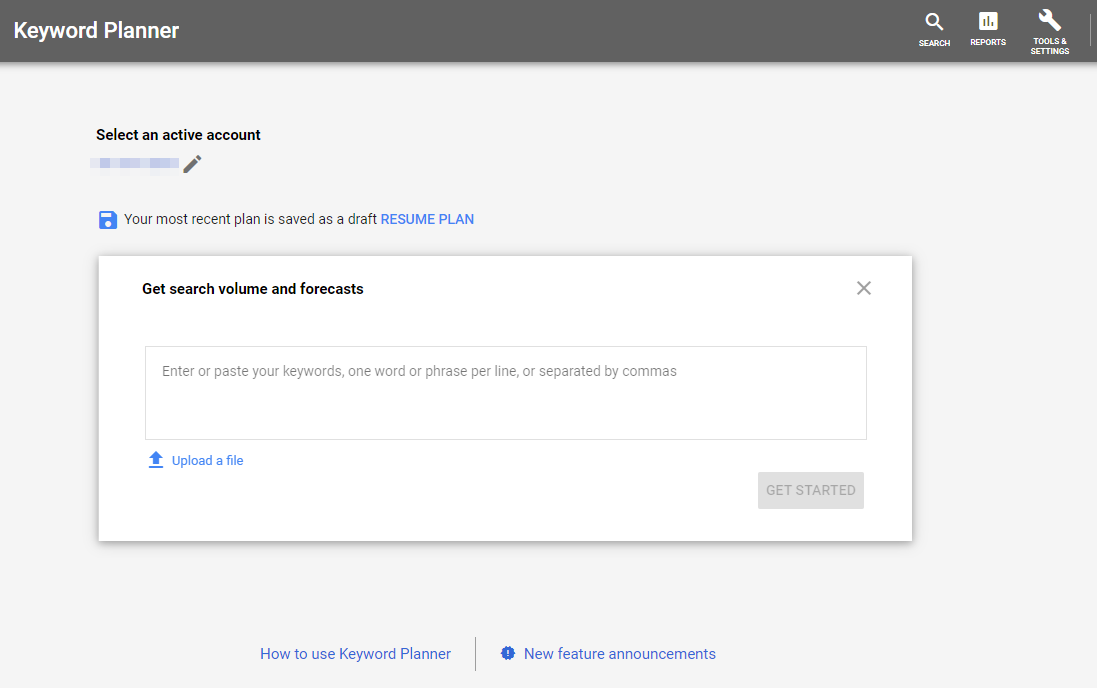

Google Ads和Yandex Direct

为了估算搜索查询的频率,我们卸载了SEO核心的频率。

Yandex预算预测

Yandex预算预测 Google关键字规划师

Google关键字规划师Yandex公制

通过SEO流量上传有关视图和访问的数据,以登录页面。

token = token headers = {"Authorization": "OAuth " + token} now = datetime.now() fr = (now - timedelta(days = 9)).strftime("%Y-%m-%d") to = (now - timedelta(days = 3)).strftime("%Y-%m-%d") res = requests.get("https://api-metrika.yandex.net/stat/v1/data/?ids=ids&metrics=ym:s:pageviews,ym:s:visits&dimensions=ym:s:startURL,ym:s:lastsignSearchEngine,ym:s:regionCountry,ym:s:deviceCategory&date1={0}&date2={1}&group=all&filters=ym:s:lastsignTrafficSource=='organic'&limit=50000".format(fr,to), headers=headers) a = json.loads(res.text) re = pd.DataFrame(columns=['page', 'device', 'view', 'dt_from', 'dt_to', 'engine', 'visits', 'country', 'pageviews']) for i in a['data']: temp={} temp['page'] = i['dimensions'][0]['name'] temp['engine'] = i['dimensions'][1]['name'] temp['country'] = i['dimensions'][2]['name'] temp['device'] = i['dimensions'][3]['name'] temp['view'] = i['metrics'][0] temp['visits'] = i['metrics'][1] temp['pageviews'] = i['metrics'][0] temp['dt_from'] = fr temp['dt_to'] = to re=re.append(temp, ignore_index=True) to_sql_server(re, 'yandex_pages')

Power BI中的数据采集

让我们看看我们要卸载什么:

- google_positions和yandex_positions

- google_frequency和yandex_frequency

- google_speed和yandex_speed

- yandex_metrika

从这些数据中,我们将能够按周,按细分,按细分和请求的常规数据,按页面的动态和常规数据以及内容下载速度收集动态。 这就是最终报告的样子:

一方面,有很多不同的迹象,很难理解什么是总体趋势。 另一方面,每个印版都会显示有关位置,印象,点击次数,点击率,页面加载速度的重要数据。

周期中的文章: